Facebook wants to build a ‘hateful meme’ AI to clean up its site, WhatsApp and Instagram

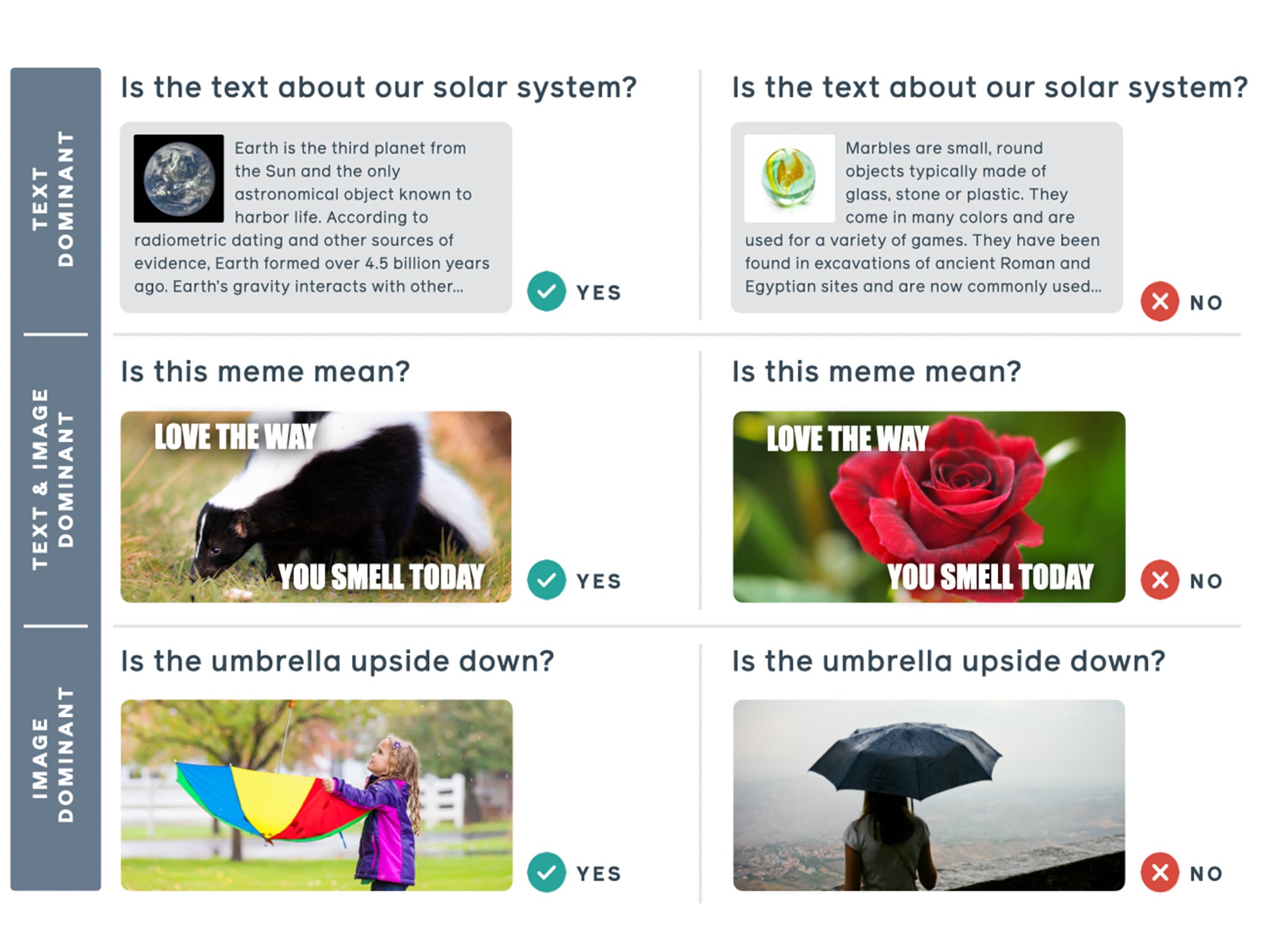

Computers find it difficult to read text and images simultaneously as humans do, so the social media giant is hoping to develop an algorithm that can manage the feat

Your support helps us to tell the story

From reproductive rights to climate change to Big Tech, The Independent is on the ground when the story is developing. Whether it's investigating the financials of Elon Musk's pro-Trump PAC or producing our latest documentary, 'The A Word', which shines a light on the American women fighting for reproductive rights, we know how important it is to parse out the facts from the messaging.

At such a critical moment in US history, we need reporters on the ground. Your donation allows us to keep sending journalists to speak to both sides of the story.

The Independent is trusted by Americans across the entire political spectrum. And unlike many other quality news outlets, we choose not to lock Americans out of our reporting and analysis with paywalls. We believe quality journalism should be available to everyone, paid for by those who can afford it.

Your support makes all the difference.Social media giant Facebook has launched a competition with an $100,000 prize pool in order to develop an artificial intelligence system that can detect “hateful” memes.

The company will provide people with a data set that researchers can train their algorithm, a finite process of instructions that a computer can follow in order to solve problems.

While humans are able to understand that the words and images in a meme are supposed to be read together, with contextual information from one informing the other, Facebook says that memes are difficult for computers to analyse because they cannot simply analyse the text and image separately.

Instead, they must “combine these different modalities and understand how the meaning changes when they are presented together,” the company wrote in its announcement.

In order to create a dataset that could be used by researchers, Facebook created new memes based on those that had been shared on social media sites, but replaced the original images with licensed pictures from Getty Images that still preserved the original message of the meme.

“If the original meme had a photo of a desert, we picked a similar desert photo from Getty. If no suitable replacement image could be found, the meme was discarded” Facebook said.

The hope is to develop a tool that would take the different modalities – the image and the text – and “fuse” them early in the classification process. For a computer algorithm, this would mean that it is looking at both the image and the text in the same way a human would before detecting whether the meme is “hateful” or “not hateful”.

This is more difficult to build than other models which have been used, where the image and the text were separated, analysed by the computer, and then recombined to detect the emotional message behind the meme.

Facebook has provided further information for researchers, an accompanying paper and the official rules of the competition which is being held in conjunction with DrivenData, an organisation that hosts challenges for data scientists.

The company’s definition of “hateful”, as explained in the research paper, is: “A direct or indirect attack on people based on characteristics, including ethnicity, race, nationality, immigration status, religion, caste, sex, gender identity, sexual orientation, and disability or disease. We define attack as violent or dehumanizing (comparing people to non-human things, e.g. animals) speech, statements of inferiority, and calls for exclusion or segregation. Mocking hate crime is also considered hate speech.”

This is not the only instance where Facebook has attempted to develop an artificial intelligence capable of detecting offensive memes. In 2018, the company announced “Rosetta”, which it uses to identify “inappropriate or harmful content” on its platform. The system would also be used to detect text on street signs or storefronts.

Should an effective algorithm be developed, it would be used on Instagram or WhatsApp – platforms both owned by Facebook and which have been criticised for their utility in cyberbullying others or spreading misinformation and disinformation - the company confirmed.

“The challenge is designed to be universal rather than focused on a specific app”, a Facebook spokesperson said.

Enforcing takedowns of content that is “hateful” or offensive is one that many social media companies have found difficult, and have also had challenges in defining. Recently, Facebook revealed members of its new oversight board – an organisation that will oversee the “most challenging content issues“ for the social network – in order to combat that.

Twitter is also experimenting with ways to content with offensive content on its platform, by sending prompts to users asking them to “revise [their] reply” when tweeting something “harmful”.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments