Invasion of the algorithms: The modern-day equations which can rule our lives

These are equations which, by processing huge amounts of micro-data, can predict our behaviour - but are they for better or worse?

Your support helps us to tell the story

From reproductive rights to climate change to Big Tech, The Independent is on the ground when the story is developing. Whether it's investigating the financials of Elon Musk's pro-Trump PAC or producing our latest documentary, 'The A Word', which shines a light on the American women fighting for reproductive rights, we know how important it is to parse out the facts from the messaging.

At such a critical moment in US history, we need reporters on the ground. Your donation allows us to keep sending journalists to speak to both sides of the story.

The Independent is trusted by Americans across the entire political spectrum. And unlike many other quality news outlets, we choose not to lock Americans out of our reporting and analysis with paywalls. We believe quality journalism should be available to everyone, paid for by those who can afford it.

Your support makes all the difference.“This is a miracle of modern technology,” says dating-agency proprietor Sid Bliss, played by Sid James, in the 1970 comedy film Carry On Loving. “All we do is feed the information into the computer here, and after a few minutes the lady suitable comes out there,” he continues, pointing to a slot.

There’s the predictable joke about the slot being too small, but Sid’s client is mightily impressed by this nascent display of computer power. He has faith in the process, and is willing to surrender meekly to whatever choices the machine makes. The payoff is that the computer is merely a facade; on the other side of the wall, Sid’s wife (played by Hattie Jacques) is processing the information using her own, very human methods, and bunging a vaguely suitable match back through the slot. The clients, however, don’t know this. They think it’s brilliant.

Technology has come a long way since Sid James delivered filthy laughs into a camera lens, but our capacity to be impressed by computer processes we know next to nothing about remains enormous. All that’s changed is the language: it’s now the word “algorithm” that makes us raise our eyebrows appreciatively and go “oooh”. It’s a guaranteed way of grabbing our attention: generate some findings, attribute them to an algorithm, and watch the media and the public lap them up.

“Apothic Red Wine creates a unique algorithm to reveal the ‘dark side’ of the nation’s personas,” read a typical press release that plopped into hundreds of email inboxes recently; Yahoo, the Daily Mirror, Daily Mail and others pounced upon it and uncritically passed on the findings. The level of scientific rigour behind Apothic’s study was anyone’s guess – but that didn’t matter because the study was powered by an algorithm, so it must be true.

The next time we’re about to be superficially impressed by the unveiling of a “special algorithm”, it’s worth remembering that our lives have been ruled by them since the year dot and we generate plenty ourselves every day. Named after the eminent Persian mathematician Muhammad ibn Musa Al-Khwarizmi, algorithms are merely sets of instructions for how to achieve something; your gran’s chocolate-cake recipe could fall just as much into the algorithm category as any computer program. And while they’re meant to define sequences of operations very precisely and solve problems very efficiently, they come with no guarantees. There are brilliant algorithms and there are appalling algorithms; they could easily be riddled with flawed reasoning and churn out results that raise as many questions as they claim to answer.

This matters, of course, because we live in an information age. Data is terrifyingly plentiful; it’s piling up at an alarming rate and we have to outsource the handling of that data to algorithms if we want to avoid a descent into chaos. We trust sat-nav applications to pull together information such as length of road, time of day, weight of traffic, speed limits and road blocks to generate an estimate of our arrival time; but their accuracy is only as good as the algorithm. Our romantic lives are, hilariously, often dictated by online-dating algorithms that claim to generate a “percentage match” with other human beings.

Our online purchases of everything from vacuum cleaners to music downloads are affected by algorithms. If you’re reading this piece online, an algorithm will have probably brought it to your attention. We’re marching into a future where our surroundings are increasingly shaped, in real time, by mathematics. Mentally, we’re having to adjust to this; we know that it’s not a human being at Netflix or Apple suggesting films for us to watch, but perhaps the algorithm does a better job. Google’s adverts can seem jarring – trying to flog us products that we have just searched for – precisely because algorithms tailor them to our interests far better than a human ever could.

With data being generated by everything from England’s one-day cricket team to your central heating system, the truth is that algorithms beat us hands down at extrapolating meaning.

“This has been shown to be the case on many occasions,” says data scientist Duncan Ross, “and that’s for obvious reasons. The sad reality is that humans are a basket of biases which we build up over our lives. Some of them are sensible; many of them aren’t. But by using data and learning from it, we can reduce those biases.”

In the financial markets, where poor human judgement can lead to eye-watering losses, the vast majority of transactions are now outsourced to algorithms which can react within microseconds to the actions of, well, other algorithms. They’ve had a place in the markets ever since Thomas Peterffy made a killing in the 1980s by using them to detect mispriced stock options (a story told in fascinating detail in the book Automate This by Christopher Steiner), but today data science drives trade. Millions of dollars’ worth of stocks change hands, multiple times, before one trader can shout “sell!”.

We humans have to accept that algorithms can make us look comparatively useless (except when they cause phenomena like Wall Street’s “flash crash” of 2010, when the index lost 1,000 points in a day, before recovering).

But that doesn’t necessarily feel like a good place to be. The increasing amount of donkey work undertaken by algorithms represents a significant shift in responsibility, and by association a loss of control. Data is power, and when you start to consider all the ways in which our lives are affected by the processing of said data, it can feel like a dehumanising step. Edward Snowden revealed the existence of an algorithm to determine whether or not you were a US citizen; if you weren’t, you could be monitored without a warrant. But even aside from the plentiful security and privacy concerns, other stuff is slipping underneath our radar, such as the homogenisation of culture; for many years, companies working in the film and music industry have used algorithms to process scripts and compositions to determine whether they’re worth investing in. Creative ventures that don’t fit the pattern are less likely to come to fruition. The algorithms forged by data scientists, by speeding up processes and saving money, have a powerful, direct impact on all of us.

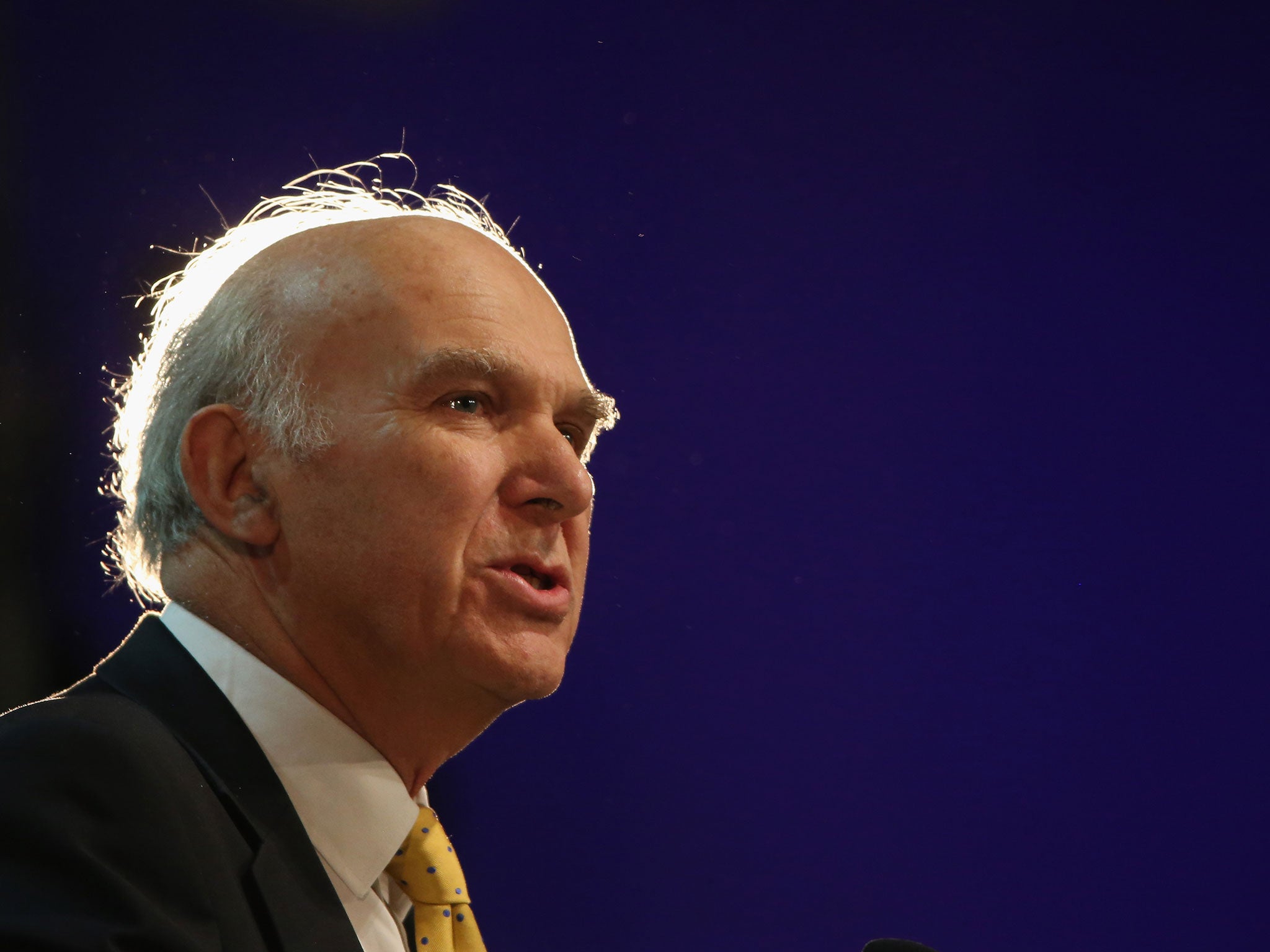

Little wonder that the Government is taking a slightly belated interest. Last year Vince Cable, the Business Secretary, announced £42m of funding for a new body, the Alan Turing Institute, which is intended to position the UK as a world leader in algorithm research.

The five universities selected to lead that institute (Cambridge, Edinburgh, Oxford, Warwick and UCL) were announced last month; they will lead the efforts to tame and use what’s often referred to as Big Data.

“So many disciplines are becoming dependent upon it, including engineering, science, commerce and medicine,” says Professor Philip Nelson, chief executive of the Engineering and Physical Sciences Research Council, the body co-ordinating the institute’s output. “It was felt very important that we put together a national capability to help in the analysis and interpretation of that data. The idea is to pull together the very best scientists to do the fundamental work in maths and data science to underpin all these activities.”

But is this an attempt to reassert control over a sector that’s wielding an increasing amount of power?

“Not at all,” says Nelson. “More than anything else, it’s about making computers more beneficial to society by using the data better.”

On the one hand we see algorithms used to do pointless work (“the most-depressing day of the year” simply does not exist); on the other we’re told to fear subjugation to our computer overlords. But it’s easy to forget the power of the algorithm to do good.

Duncan Ross is one of the founder directors of DataKind UK, a charity that helps other charities make the best use of the data at their disposal.

“We’re in this world of constrained resources,” he says, “and we can ill afford for charities to be doing things that are ineffective.”

From weekend “datathons” to longer-term, six-month projects, volunteers help charities to solve a range of problems.

“For example,” says Ross, “we did some recent work with Citizens Advice, who have a lot of data coming in from their bureaux.

“They’re keen to know what the next big issue is and how they can spot it quickly; during the payday-loans scandal they felt that they were pretty late to the game, because even though they were giving advice, they were slow to take corporate action. So we worked with them on algorithms that analyse the long-form text reports written by local teams in order to spot new issues more quickly.

“We’re not going to solve all the charities’ problems; they’re the experts working on the ground. What we can do is take their data and help them arrive at better decisions.”

Data sets can be investigated in unexpected ways to yield powerful results. For example, Google has developed a way of aggregating users’ search data to spot flu outbreaks.

“That flu algorithm [Google Flu Trends] picked up on people searching for flu remedies or symptoms,” says Ross, “and by itself it seemed to be performing about as well as the US Centers for Disease Control. If you take the output of that algorithm and use it as part of the decision-making process for doctors, then we really get somewhere.”

But Google, of course, is a private company with its own profit motives, and this provokes another algorithmic fear; that Big Data is being processed by algorithms that might not be working in our best interests. We have no way of knowing; we feel far removed from these processes that affect us day to day.

Ross argues that it’s perfectly normal for us to have little grasp of the work done by scientists.

“How much understanding is there of what they actually do at Cern?” he asks. “The answer is almost none. Sometimes, with things like the Higgs boson, you can turn it into a story where, with a huge amount of anecdote, you can just about make it exciting and interesting – but it’s still a challenge.

“As far as data is concerned, the cutting-edge stuff is a long way from where many organisations are; what they need to be doing is much, much more basic. But there are areas where there are clearly huge opportunities.”

That’s an understatement. As the so-called “internet of things” expands, billions of sensors will surround us, each of them a data point, each of them with algorithmic potential. The future requires us to place enormous trust in data scientists; just like the hopeful romantic in Carry On Loving, we’ll be keeping our fingers crossed that the results emerging from the slot are the ones we’re after.

We’ll also be keeping our fingers crossed that the processes going on out of sight, behind that wall, aren’t overseen by the algorithmic equivalent of Sid James and Hattie Jacques.

Here’s hoping.

The visualization at the top of this article was supplied by curalytics.com

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments