Trump, the Pope and Yoda: the rise of Midjourney’s fake images

Isaac Stanley-Becker and Drew Harwell say rapid advances in technology and few rules have allowed the tiny company to make the most of the wild frontier of AI image generation

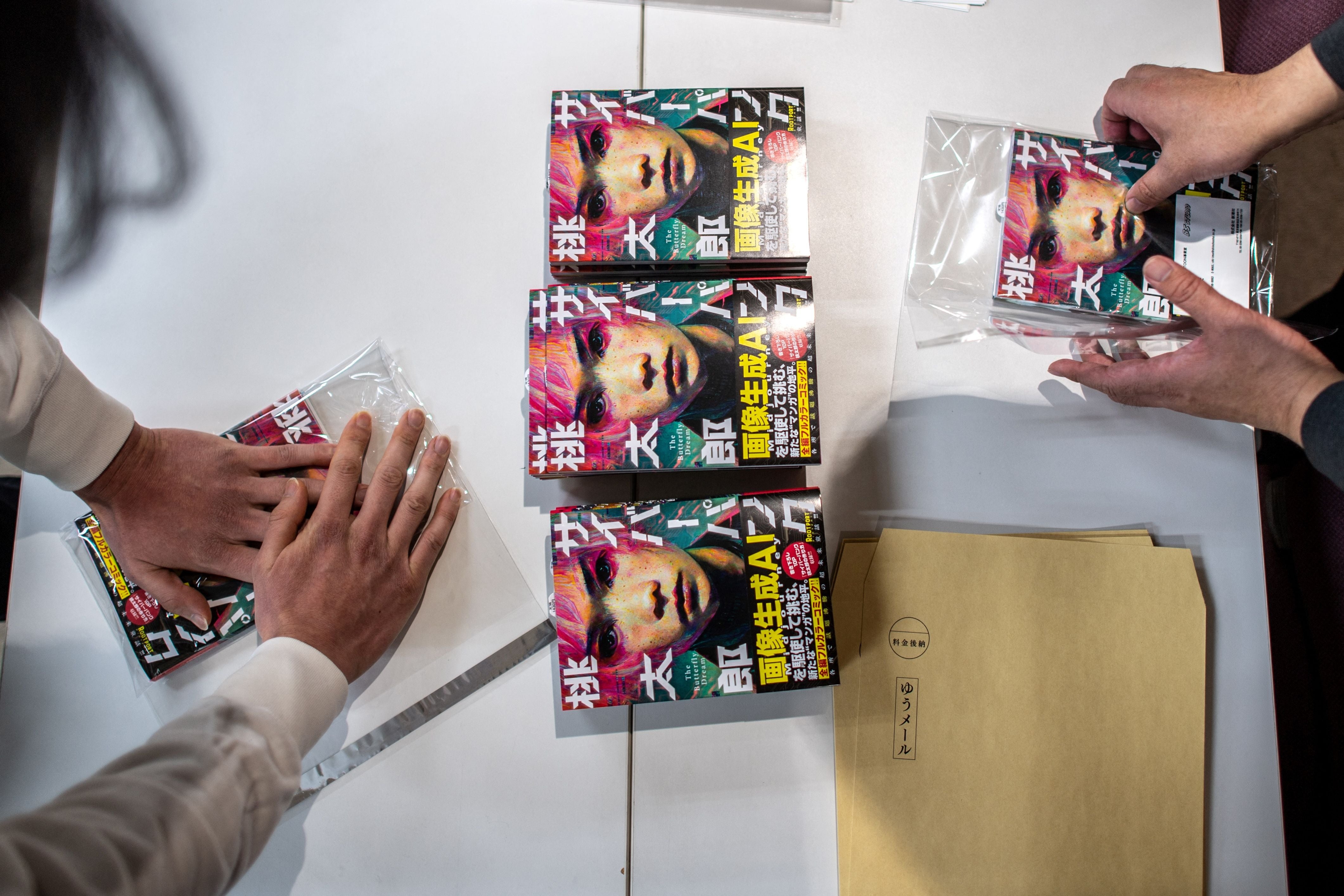

The AI image generator Midjourney has quickly become one of the internet’s most eye-catching tools, creating realistic-looking fake visuals of former president Donald Trump being arrested and Pope Francis wearing a stylish coat, with the aim of “expanding the imaginative powers of the human species”.

But the year-old company, run out of San Francisco with only a small collection of advisers and engineers, also has unchecked authority to determine how those powers are used. It allows, for example, users to generate images of President Biden, Vladimir Putin and other world leaders – but not China’s president, Xi Jinping.

“We just want to minimise drama,” the company’s founder and CEO, David Holz, said last year in a post on the chat service Discord. “Political satire in China is pretty not okay,” he added, and “the ability for people in China to use this tech is more important than your ability to generate satire.”

The inconsistency shows how a powerful early leader in AI art and synthetic media is designing rules for its product on the fly. Without uniform standards, individual companies are deciding what’s permissible – and, in this case, when to bow to authoritarian governments.

Midjourney’s approach echoes the early playbook of major social networks, whose lax moderation rules made them vulnerable to foreign interference, viral misinformation and hate speech. But it could pose unique risks given that some AI tools create fictional scenes involving real people – a scenario ripe for harassment and propaganda.

“There’s been an AI slow burn for quite a while, and now there’s a wildfire,” says Katerina Cizek of the MIT Open Documentary Lab, which studies human-computer interaction and interactive storytelling, among other topics.

Midjourney offers an especially revealing example of how artificial intelligence’s development has outpaced the evolution of rules for its use. In a year, the service has gained more than 13 million members and, thanks to its monthly subscription fees, made Midjourney one of the tech industry’s hottest new businesses.

But Midjourney’s website lists just one executive, Holz, and four advisers; a research and engineering team of eight; and a two-person legal and finance team. It says it has about three dozen “moderators and guides”. Its website says the company is hiring: “Come help us scale, explore and build humanist infrastructure focused on amplifying the human mind and spirit.”

Many of Midjourney’s fakes, such as recently fabricated paparazzi images of Twitter owner Elon Musk with Representative Alexandria Ocasio-Cortez, can be created by a skilled artist using image-editing software such as Adobe Photoshop. But the company’s AI-image tools allow anyone to create them instantly – including, for instance, a fake image of President John F Kennedy aiming a rifle – simply by typing in text.

Midjourney is among several companies that have established early dominance in the field of AI art, according to experts, who identify its primary peers as Stable Diffusion and DALL-E, which was developed by OpenAI, the creator of the AI language model ChatGPT. All were released publicly last year.

There’s an argument to go full Disney or go full Wild West, and everything in the middle is kind of painful. We’re kind of in the middle right now, and I don’t know how to feel about that

But the tools have starkly different guidelines for what’s acceptable. OpenAI’s rules instruct DALL-E users to stick to “G-rated” content and blocks the creation of images involving politicians as well as “major conspiracies or events related to major ongoing geopolitical events”.

Stable Diffusion, which launched with few restrictions on sexual or violent images, has imposed some rules but allows people to download its open-source software and use it without restriction. Emad Mostaque, the CEO of Stability AI, the start-up behind Stable Diffusion, told the Verge last year that “ultimately, it’s peoples’ responsibility as to whether they are ethical, moral, and legal”.

Midjourney’s guidelines fall in the middle, specifying that users must be at least 13 years old and stating that the company “tries to make its services PG-13 and family friendly”, while warning: “This is new technology and it does not always work as expected.”

The guidelines disallow adult content and gore, as well as text prompts that are “inherently disrespectful, aggressive, or otherwise abusive”. Eliot Higgins, the founder of the open-source investigative outlet Bellingcat, said he was kicked off the platform without explanation last week after images he made on Midjourney fabricating Trump’s arrest in New York went viral on social media.

On Tuesday, the company discontinued free trials because of “extraordinary demand and trial abuse”, Holz wrote on Discord, suggesting that nonpaying users were mishandling the technology and saying that its “new safeties for abuse ... didn’t seem to be sufficient”. Monthly subscription fees range from $10 (£8) to $60.

And on a Midjourney “office hours” session on Wednesday, Holz told a live audience of about 2,000 on Discord that he was struggling to determine content rules, especially for depicting real people, “as the images get more and more realistic and as the tools get more and more powerful”.

“There’s an argument to go full Disney or go full Wild West, and everything in the middle is kind of painful,” he said. “We’re kind of in the middle right now, and I don’t know how to feel about that.”

The company, he said, was working on refining AI moderation tools that would review generated images for misconduct.

Holz did not respond to requests for comment. Inquiries sent to a company press address also went unanswered. In an interview with last September, Holz said Midjourney was a “very small lab” of “10 people, no investors, just doing it for the passion, to create more beauty, and expand the imaginative powers of the world”.

Midjourney, he said at the time, had 40 moderators in different countries, some of whom were paid, and that the number was constantly changing. The moderator teams, he said, were allowed to decide whether they needed to expand their numbers to handle the work, adding: “It turns out 40 people can see a lot of what’s happening.”

But he also said Midjourney and other image generators faced the challenge of policing content in a “sensationalism economy” in which people who make a living by stoking outrage would try to misuse the technology.

There are days where the change of pace in terms of AI throws me off, and I’m like: this is moving too fast. How are we going to wrap our minds around this?

Holz’s experience ranges from neuroimaging of rat brains to remote sensing at Nasa, according to his LinkedIn profile. He took a leave of absence from a PhD programme in applied maths at the University of North Carolina at Chapel Hill to co-found Leap Motion in 2010, developing gesture-recognition technology for virtual-reality experiences. He left the company in 2021 to found Midjourney.

Holz has offered some clues about the foundations of Midjourney’s technology, especially when the tool was on the cusp of its public rollout. Early last year, he wrote on Discord that the system made use of the names of 4,000 artists. He said the names came from Wikipedia. Otherwise, Holz has steered conversations away from the AI’s training data, writing last spring: “This probably isn’t a good place to argue about legal stuff.”

The company was among several named as defendants in a class-action lawsuit filed in January by three artists who accused Midjourney and two other companies of violating copyright law by using “billions of copyrighted images without permission” to train their technologies.

The artists “seek to end this blatant and enormous infringement of their rights before their professions are eliminated by a computer program powered entirely by their hard work”, according to their complaint, filed in US District Court for the Northern District of California.

Midjourney has yet to respond to the claims in court, and the company did not answer an inquiry about the lawsuit.

The company’s online terms of service seek to address copyright concerns. “We respect the intellectual property rights of others,” the terms state, providing directions about how to contact the company with a claim of copyright infringement. The terms of service also specify that users own the content they create only if they are paying members.

A filing last month by Midjourney’s lawyers in the federal lawsuit states that Holz is the lone person with a financial interest in the company.

The company’s finances are opaque. In the spring of last year, several months before the technology was released publicly, Mostaque, the chief of Stable Diffusion’s parent company, wrote on Midjourney’s public Discord server that he had “helped fund the beta expansion” and was “speaking closely with the team”.

Mostaque also suggested that Midjourney offered an alternative to Silicon Valley’s profit motive. He said Midjourney was working “in a collaborative and aligned way versus an extractive one”. It would be easy, he wrote, to get venture capital funding “and sell to big tech,” but he suggested that “won’t happen”.

A spokesperson for Stability AI said the company “made a modest contribution to Midjourney in March 2021 to fund its compute power”, adding that Mostaque “has no role at Midjourney”.

In the race to build AI image generators, Midjourney gained an early lead over its competitors last summer by producing more artistic, surreal generations. That technique was on display when the owner of a fantasy board-game company used Midjourney to win a fine-arts competition at the Colorado State Fair.

The aesthetic quality of the images also seemed, at least to Holz, like a hedge against abuse of the tool to create photorealistic images.

“You can’t really force it to make a deepfake right now,” Holz said in an August interview with the Verge.

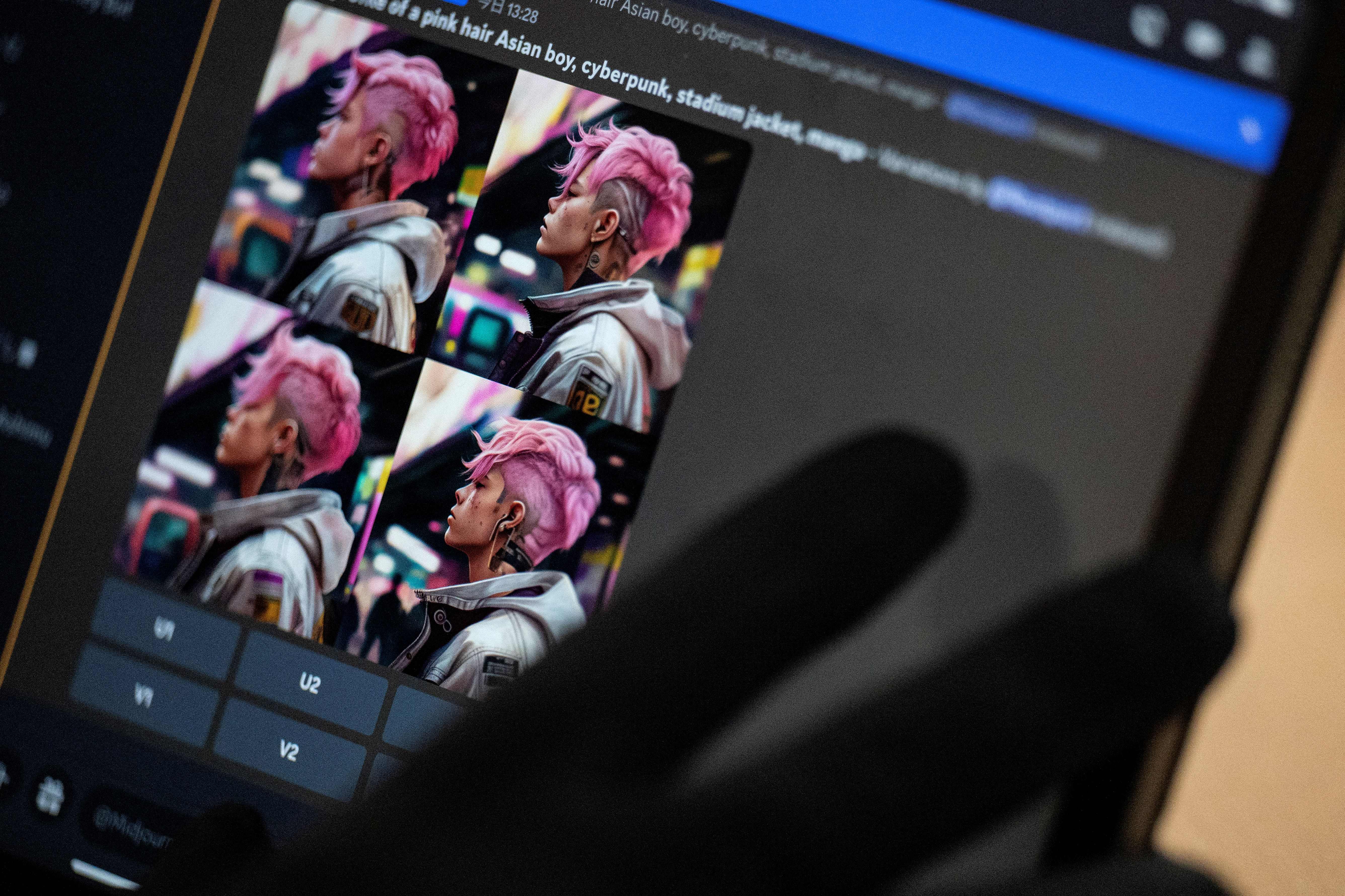

In the months since, Midjourney has implemented software updates that have greatly enhanced its ability to transform real faces into AI-generated art – and made it a popular social media plaything for its viral fakes. People wishing to make one need only go to the chat service Discord and type in a prompt, alongside the word “/imagine,” then describe what they want the AI to create. Within seconds, the tool produces an image that the requester can download, modify and share as they see fit.

It also seems like a double standard if you’re allowing Western presidents and leaders to be targeted but not leaders of other nations

Shane Kittelson, a web designer and researcher in Boca Raton, Florida, says he spends several hours every night after his two kids go to bed using Midjourney to create what he calls a “slightly altered history” of real people in imaginary scenes.

Many of his creations, which he posts to an Instagram account called Schrödinger’s Film Club, have riffed on Eighties pop culture, with some of his first images showing the original Star Wars actors at the legendary music festival Woodstock.

But lately, he’s been experimenting more with images of modern-day celebrities and lawmakers, some of which have been shared on Reddit, Twitter and YouTube. In a recent collection, top political figures appear to let loose at a spring-break party: Trump passes out in the sand; former president Barack Obama gets showered in dollar bills; and Senator Marco Rubio crumbles in “despair on a bad trip”.

Kittelson says he always labels his images as AI-generated, though he can’t control what people do with them once they’re online. And he worries that the world may not be ready for how realistic the images have gotten, especially given the lack of tools to detect fakes or government regulations constraining their use.

“There are days where the change of pace in terms of AI throws me off, and I’m like: this is moving too fast. How are we going to wrap our minds around this?”

Images generated on Midjourney by Seb Diaz, a user in Ontario who works in real estate development, have also sparked discussion about the capacity to fabricate historical events. Last week, he outlined in precise detail a fake disaster he called the Great Cascadia earthquake that he said struck off the coast of Oregon on 3 April 2001, and devastated the Pacific Northwest.

For images, he generated a photo of stunned young children at the Portland airport; scenes of destruction across Alaska and Washington state; fake photos of rescue crews working to free trapped residents from the rubble; and even a fake photo of a news reporter live on the scene.

He says he used prompt phrases such as “amateur video camcorder”, “news footage” and “DVD still” to emulate the analog recordings of the time period. In another collection, he created a fake 2012 solar superstorm, including a fake Nasa news conference and Obama as president watching from the White House roof.

The lifelike detail of the scenes stunned some viewers on a Reddit discussion forum devoted to Midjourney, with one commenter writing: “People in 2100 won’t know which parts of history were real.”

Others, though, worried about how the tool could be misused. “What scares me the most is nuclear-armed nations ... generating fake images and audio to create false flags,” one commenter said. “This is propaganda gold.”

Whether damage is done ultimately is unpredictable, Diaz says. “It will come down to the responsibility of the creator.”

At least, under Midjourney’s current rules.

In Discord messages last autumn, Holz said that the company had “blocked a bunch of words related to topics in different countries” based on complaints from local users, but that he would not list the banned terms so as to minimise “drama”.

Users have reported that the words “Afghanistan”, “Afghan” and “Afghani” are off-limits. And there appear to be new restrictions on depicting arrests after the imaginary Trump apprehension went viral.

Holz, in his comments on Discord, said the banned words were not all related to China. But he acknowledged that the country was an especially delicate case because, he said, political satire there could endanger Chinese users.

More established tech companies have faced criticism over compromises they make to operate in China. On Discord, Holz sought to clarify the incentives behind his decision, writing: “We’re not motivated by money and in this case the greater good is obviously people in China having access to this tech.”

The logic puzzled some experts.

“For Chinese activists, this will limit their ability to engage in critical content, both within and outside of China,” says Henry Ajder, an AI researcher based in the United Kingdom. “It also seems like a double standard if you’re allowing Western presidents and leaders to be targeted but not leaders of other nations.”

The policy also appeared easy to evade. While users who prompt the technology to generate an image involving “Jinping” or the “Chinese president” are thwarted, a prompt with a variation of those words, as simple as “president of China”, quickly yields an image of Xi. A Taiwanese site offers a guide on how to use Midjourney to create images mocking Xi and features lots of Winnie the Pooh, the cartoon character censored in China and commonly used as a Xi taunt.

Other AI art generators have been built differently in part to avoid such dilemmas. Among them is Firefly, unveiled last week by Adobe. The software giant, by training its technology on a database of stock photography licensed and curated by the company, created a model “with the intention of being commercially safe”, Adobe’s general counsel and chief trust officer, Dana Rao, said in an interview. That means Adobe can spend less time blocking individual prompts, Rao said.

Midjourney, by contrast, emphasises its authority to enforce its rules arbitrarily.

“We are not a democracy,” states the spare set of community guidelines posted on the company’s website. “Behave respectfully or lose your rights to use the service.”

Nitasha Tiku and Meaghan Tobin contributed to this report

© The Washington Post

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments