Robots can now learn new skills by watching videos

‘This work could enable robots to learn from the vast amount of internet videos available,’ robotics researcher says

Engineers have built robots that are capable of learning new skills by watching videos of humans performing them.

A team from Carnegie Mellon University (CMU) developed a model that allowed robots to perform household tasks like opening drawers and picking up knives after watching a video of the action.

The Visual-Robotics Bridge (VRB) method requires no human oversight and can result in new skills being learned in just 25 minutes.

“The robot can learn where and how humans interact with different objects through watching videos,” said Deepak Pathak, an assistant professor at CMU’s Robotics Institute.

“From this knowledge, we can train a model that enables two robots to complete similar tasks in varied environments.”

The VRB model allows the robot to learn how to perform the actions shown in a video, even if it is in a different setting.

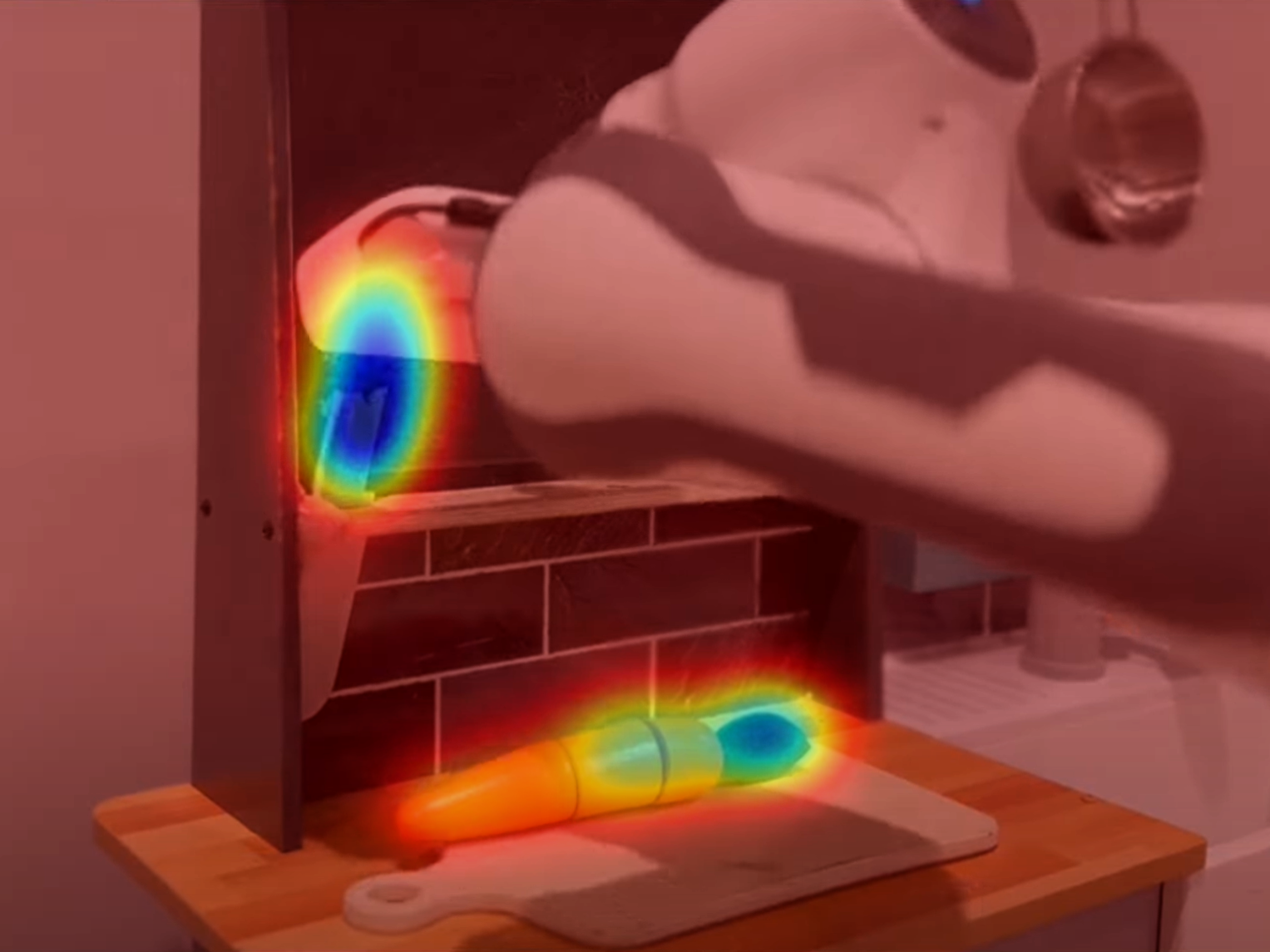

It does this by identifying contact points – such as the handle of a drawer or a knife – and understanding which motion to make in order to complete the task.

“We were able to take robots around campus and do all sorts of tasks,” said Shikhar Bahl, a PhD student in robotics at CMU.

“Robots can use this model to curiously explore the world around them. Instead of just flailing its arms, a robot can be more direct with how it interacts.

“This work could enable robots to learn from the vast amount of internet and YouTube videos available.”

The robots involved in the research successfully learned 12 new tasks during 200 hours of real-world tests.

All of the tasks were relatively straightforward, including opening cans and picking up a phone, with the researchers now planning to develop the VRB system to allow robots to perform multi-step tasks.

The research was detailed in a paper, titled ‘Affordances from human videos as a versatile representation for robotics’, which will be presented this month at the Conference on Vision and Pattern Recognition in Vancouver, Canada.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments