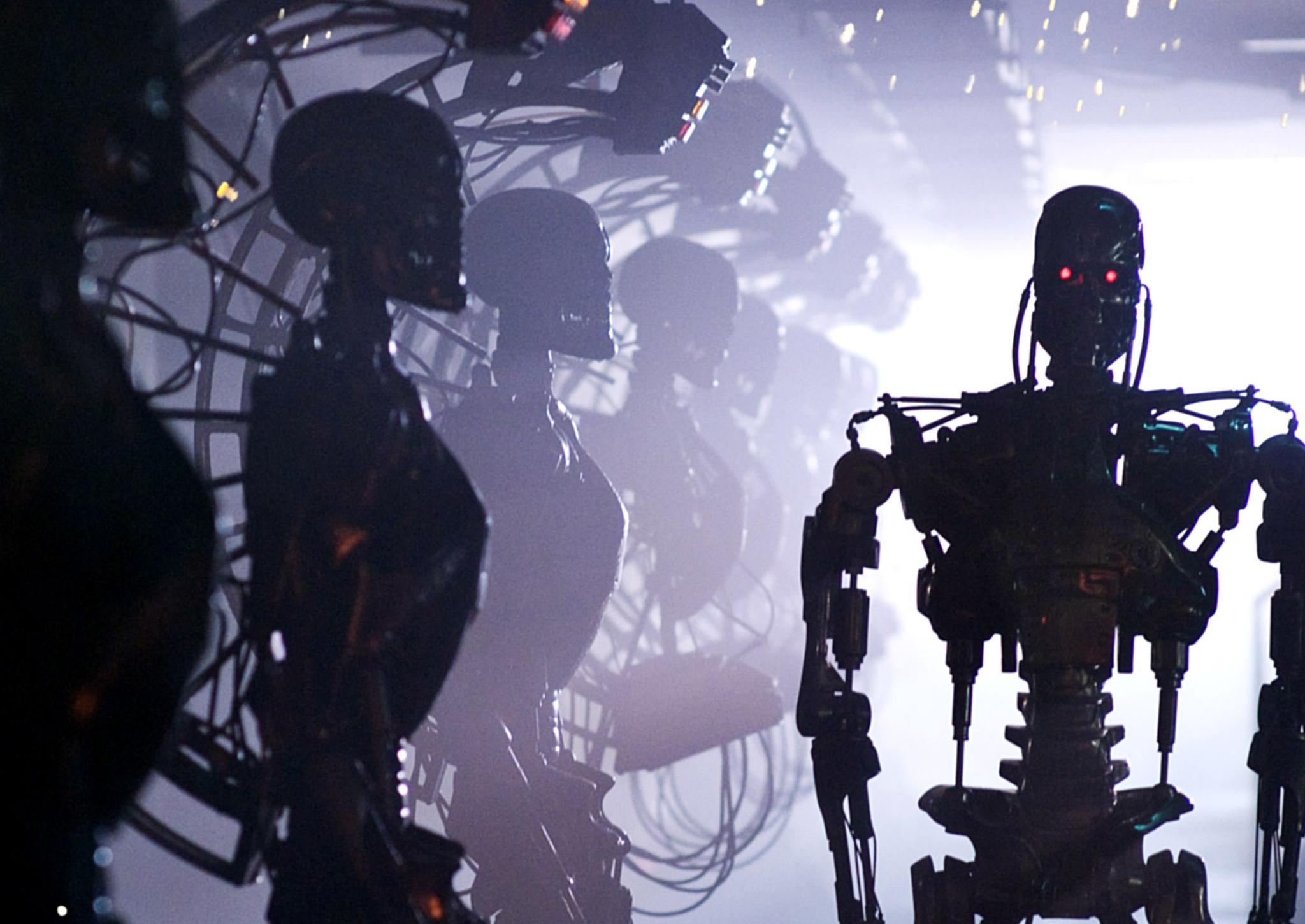

World calls for international treaty to stop killer robots before rogue states acquire them

'By permitting fully autonomous weapons to be developed, we are crossing a moral line', Human Rights Watch warns

Your support helps us to tell the story

From reproductive rights to climate change to Big Tech, The Independent is on the ground when the story is developing. Whether it's investigating the financials of Elon Musk's pro-Trump PAC or producing our latest documentary, 'The A Word', which shines a light on the American women fighting for reproductive rights, we know how important it is to parse out the facts from the messaging.

At such a critical moment in US history, we need reporters on the ground. Your donation allows us to keep sending journalists to speak to both sides of the story.

The Independent is trusted by Americans across the entire political spectrum. And unlike many other quality news outlets, we choose not to lock Americans out of our reporting and analysis with paywalls. We believe quality journalism should be available to everyone, paid for by those who can afford it.

Your support makes all the difference.There is widespread public support for a ban on so-called “killer robots”, which campaigners say would “cross a moral line” after which it would be difficult to return.

Polling across 26 countries found over 60 per cent of the thousands asked opposed lethal autonomous weapons that can kill with no human input, and only around a fifth backed them.

The figures showed public support was growing for a treaty to regulate these controversial new technologies - a treaty which is already being pushed by campaigners, scientists and many world leaders.

However, a meeting in Geneva at the close of last year ended in a stalemate after nations including the US and Russia indicated they would not support the creation of such a global agreement.

Mary Wareham of Human Rights Watch, who coordinates the Campaign to Stop Killer Robots, compared the movement to successful efforts to eradicate landmines from battlefields.

However, this time she said the aim was to achieve victory before autonomous weapons get out of control and into the wrong hands.

“The efforts to deal with landmines were reactive after the carnage had occurred. We are calling for a pre-emptive ban,” she said.

She added that unless kept in check these technologies could end up being employed not just by the military but by police forces and border guards as well.

Ms Wareham discussed these ideas with other experts in the field at the American Association for the Advancement of Science meeting in Washington DC.

She said scientists and tech companies such as Google had already been incredibly proactive in demonstrating their support for the cause.

UN Secretary-General Antonio Guterres added his voice in November, calling lethal autonomous weapons systems “politically unacceptable and morally repugnant” and urging states to prohibit them.

Ms Wareham said critics of these developments recognised killer robots as AI at its very worst.

“The AI experts have said that even if ‘responsible’ militaries like the US and UK say we will use these things responsibly, once they are out where you cannot control it they will proliferate to all sorts of governments as well as non-state groups,” she said.

One rationale often given for the development of these machines is that they could be more precise than existing weapons and therefore cause less human suffering. However, as these weapons systems have not been developed this remains untested.

Russia, Israel, South Korea and the US indicated at the annual meeting of the Convention on Conventional Weapons they would not support negotiations for a new treaty.

All of these nations, as well as China, are investing significantly in weapons with decreasing levels of human control.

Even the UK is allegedly dabbling with more autonomous weapons, with the planned development of a “drone swarm” capable of flying and locating targets by itself.

Ms Wareham said ministers should carefully consider the implications of such actions before hurrying to keep up with nations like the US.

“By permitting fully autonomous weapons to be developed, we are crossing a moral line,” she concluded

“We are not just concerned about use in warfare, but use in other circumstances, policing, law enforcement, border control, there are many other ways in which a fully autonomous weapons could be used in the future.”

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments