Facebook creates ‘reactive’ profile pictures that ‘come alive’ but results look creepy

'In this work, we are interested in animating faces in human portraits, and in particular controlling their expressions'

Your support helps us to tell the story

From reproductive rights to climate change to Big Tech, The Independent is on the ground when the story is developing. Whether it's investigating the financials of Elon Musk's pro-Trump PAC or producing our latest documentary, 'The A Word', which shines a light on the American women fighting for reproductive rights, we know how important it is to parse out the facts from the messaging.

At such a critical moment in US history, we need reporters on the ground. Your donation allows us to keep sending journalists to speak to both sides of the story.

The Independent is trusted by Americans across the entire political spectrum. And unlike many other quality news outlets, we choose not to lock Americans out of our reporting and analysis with paywalls. We believe quality journalism should be available to everyone, paid for by those who can afford it.

Your support makes all the difference.Facebook researchers want to make your profile pictures “come alive” to make it look like you’re expressing different emotions.

They say the “Harry Potter-style” technique, which manipulates your face and controls your expressions, can be used to create strange-sounding new “reactive profiles”.

On reactive profiles, still pictures of your face will move, depending on how you feel about any updates you interact with.

The “Haha” reaction, for instance, will cause your profile picture to look like it’s laughing. The same rule applies to “Wow”, “Sad” and “Angry”.

Furthermore, the researchers, who mocked up a reactive Facebook profile as part of the project, say their technique can be used to animate your full head and upper body, rather than just your face.

It’s an unusual project, which could result in more people reacting to Facebook posts, even if it’s just to see their own profile pictures come to life.

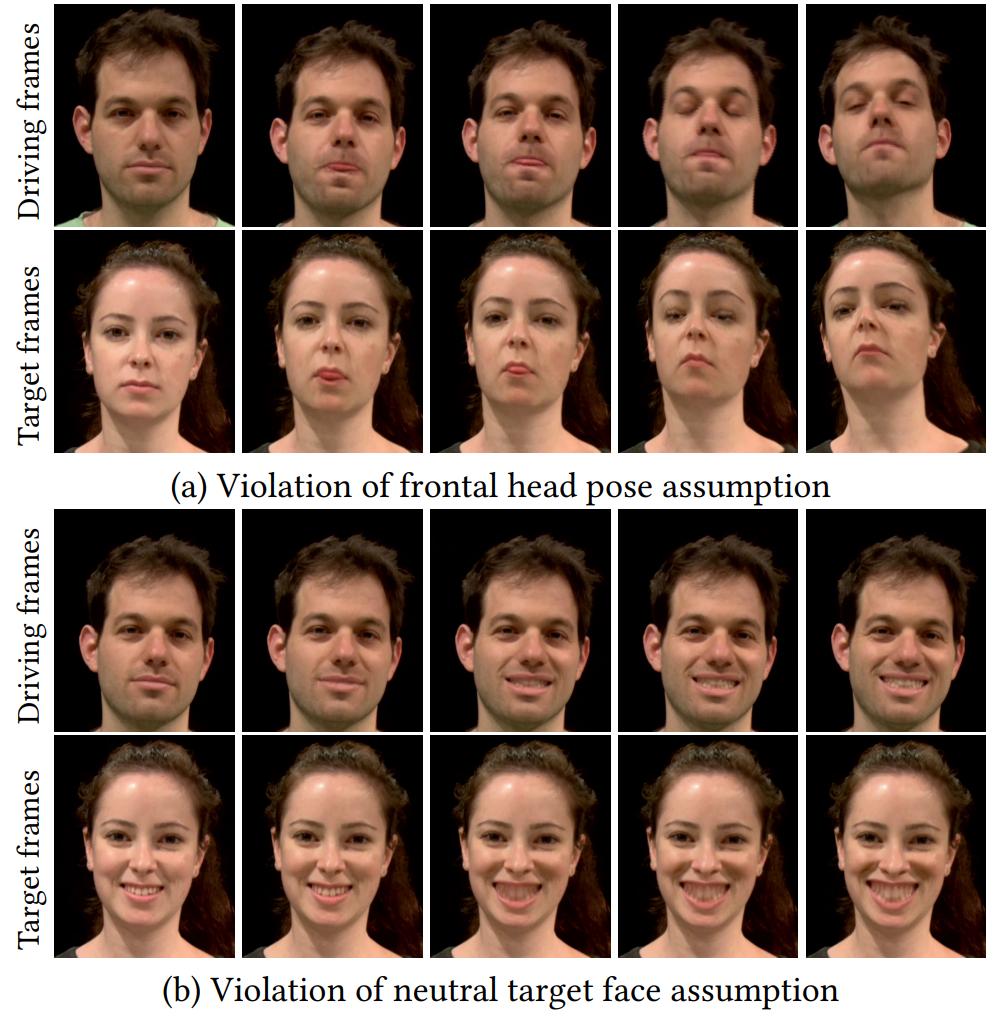

However, many of the images created by the technique look creepy and not quite right, provoking a kind of "uncanny valley" effect.

“In this work, we are interested in animating faces in human portraits, and in particular controlling their expressions,” wrote Facebook researchers Johannes Kopf and Michael F. Cohen, and Hadar Averbuch-Elor and Daniel Cohen-Or from Tel-Aviv University, in a paper.

“As our results illustrate, our technique enables bringing a still portrait to life, making it seem as though the person is breathing, smiling, frowning, or for that matter any other animation that one wants to drive with. We apply our technique on highly varying facial images, including internet selfies, old portraits and facial avatars.

“Additionally, we demonstrate our results in the context of reactive profiles – a novel application which resembles the moving portraits from Harry Potter’s magical world, where people in photographs move, wave, etc.”

The researchers say the expressions come from “driving videos” of a different person’s face, and that they can be transferred to pictures of anyone else’s face using “2D warps that imitate the facial transformations”.

Their technique also adds “fine-scale dynamic details”, such as creases and wrinkles, and “hallucinates” areas that may be hidden, such as the inner mouth.

“We built on the fact that there is a significant commonality in the way humans ‘warp’ their faces to make an expression. Thus, transferring local warps between aligned faces succeeds in hallucinating facial expressions,” the researchers say.

“As we have shown, the internal features of the mouth of the driving video are transplanted to the target face to compensate for the disoccluded region.

“Although this transplanting violates our goal of keeping the identity of the target face features, the affect of this violation is only secondary, since humans in general are not as sensitive to teeth as a recognition cue.”

In the future, the researchers plan to automatically match driving videos with people that have similar-looking faces.

They’re also considering using the technique to animate faces that aren’t directly facing the camera in pictures.

“We expect other fun applications for the work we have shown here,” the paper concludes. “One can imagine coupling this work with an AI to create an interactive avatar starting from a single photograph.”

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments