ChatGPT creators release even more powerful version

The creators of ChatGPT have revealed a new model they say is even more powerful than that already viral system.

The new tool, GPT-4, is available in some versions of ChatGPT and to paying customers of OpenAI, the company that created both systems.

OpenAI says the new tool includes a range of upgrades on that existing system: it is able to accept images as an input, is more creative, “hallucinates” much less and is less biased, said OpenAI chief executive Sam Altman. The new system is able to pass a bar exam and scores top marks on AP exams.

But it is also “still flawed, still limited, and it still seems more impressive on first use than it does after you spend more time with it”, Mr Altman said.

The text version of GPT-4 will be initially launched within ChatGPT+, the recently-launched premium version of the system. It will also be available through the API that OpenAI offers to developers, and companies such as Duolingo have already announced they will be integrating it into their apps.

The image version of the tool will initially launch with one partner, presumably so that it can be tested and refined. It will be available at first through Be My Eyes, a mobile app that helps people who are blind or low vision, where it will allow people to ask questions about an image.

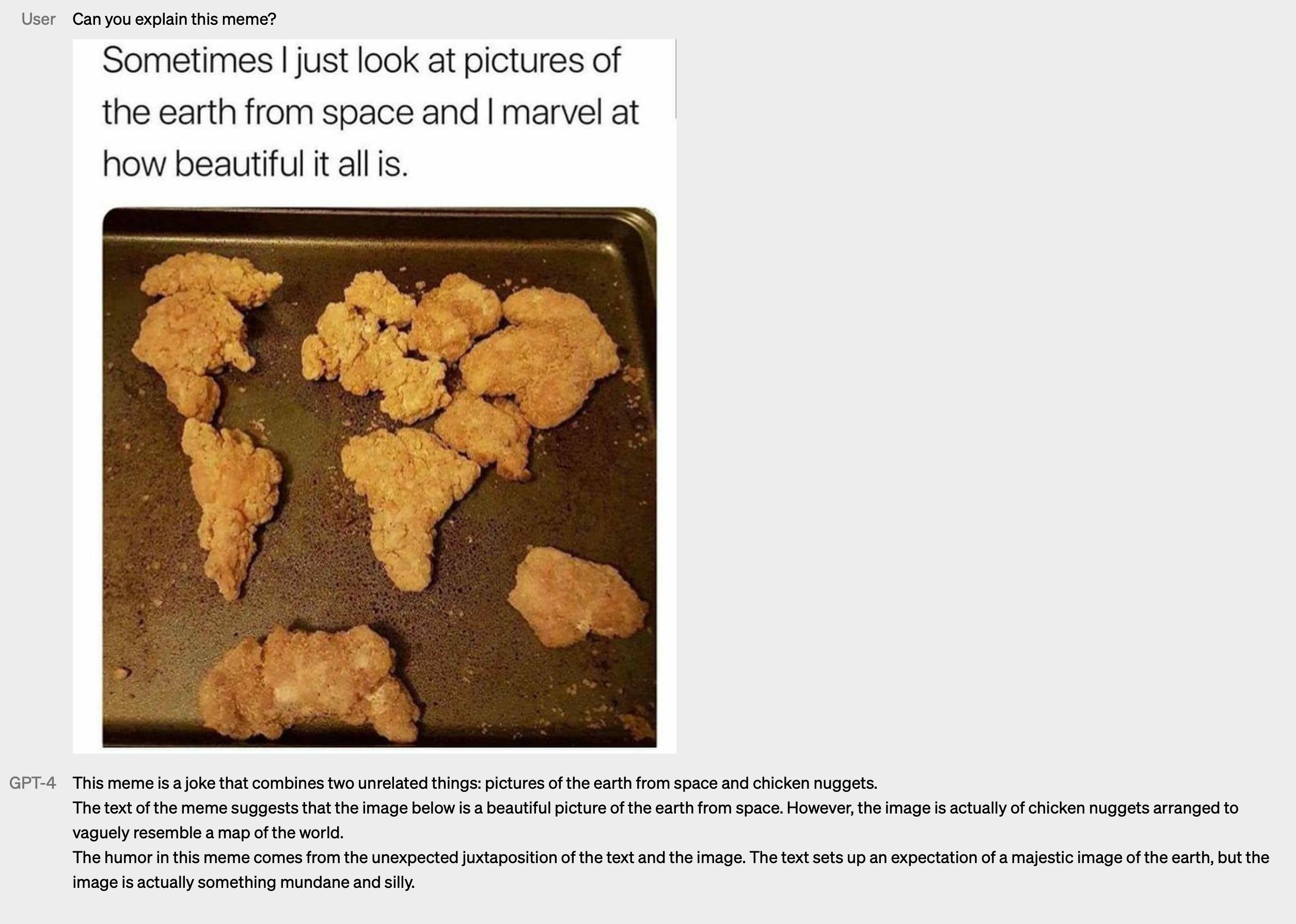

The image part of the system functions in much the same way as text inputs, but could allow for more developed use cases, such as analysing graphs or answering questions about diagrams. It is also able to understand humour: OpenAI said that it was fed a range of humorous images, such as memes and unusual images, and could explain the joke or what was strange about the picture.

As well as its image inputs, users can set rules for the outputs of GPT-4. If users only want to receive responses in Shakespearean verse, for instance, or in a particular data format, they can force the tool to always reply in that style.

OpenAI says that GPT-4 is significantly better than the systems that went before it. It is both more stable and more capable, it said: the previous system scored in the bottom 10 per cent of a simulated bar exam, for instance, while the new one came in the top 10 per cent.

But the difference between the two tools “can be subtle” in a casual conversation, it said. “ The difference comes out when the complexity of the task reaches a sufficient threshold—GPT-4 is more reliable, creative, and able to handle much more nuanced instructions than GPT-3.5” which came before it, it said.

It still does have many limitations, however, OpenAI admitted. For instance it still “hallucinates” facts – a common behaviour among such systems, where they will confidently state falsehoods – though it does so much less than its predecessor.

As such, the company warned that people should still take “great care” when using such systems, “particularly in high-stakes contexts”.

The additional capabilities of the new system also brings new kinds of risk, OpenAI noted, and it said that it had “engaged over 50 experts from domains such as AI alignment risks, cybersecurity, biorisk, trust and safety, and international security to adversarially test the model”. Their warnings were integrated into the new model – it is now better at refusing to give information about how to make dangerous chemicals, for instance.

Though OpenAI said that it hopes at some point to offer “some amount of free GPT-4 queries” to people without subscriptions, it did not commit to doing so, and warned that it could eventually charge more for the tool. Such AI systems require large amounts of computing power, which can make even simple questions very expensive to answer.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments

Bookmark popover

Removed from bookmarks