How artificial intelligence could help us to win arguments

It is feared that algorithms are locking humans into narrow-minded points of view

The ability to argue, to express our reasoning to others, is one of the defining features of what it is to be human.

Argument and debate form the cornerstones of civilised society and intellectual life. Processes of argumentation run our governments, structure scientific endeavour and frame religious belief. So should we worry that new advances in artificial intelligence are taking steps towards equipping computers with these skills?

As technology reshapes our lives, we are all getting used to new ways of working and new ways of interacting. Millennials have known nothing else.

Governments and judiciaries are waking up to the potential offered by technology for engaging citizens in democratic and legal processes. Some politicians, individually, are more ahead of the game in understanding the enormous role that social media plays in election processes. But there are profound challenges.

One is nicely set out by Upworthy chief executive Eli Pariser in his TED Talk. In it he explains how we are starting to live in “filter bubbles”: what you see when you search a given term on Google is not necessarily the same as what I see when I search the same term.

Media organisations from Fox News to, most recently, the BBC, are personalising content, with ID and login being used to select which stories are featured most prominently. The result is that we risk locking ourselves into echo chambers of like-minded individuals while our arguments become more one-sided, less balanced and we have less understanding of other viewpoints.

Another concern is the way in which news and information, though ever more voluminous, is becoming ever less reliable – accusations and counter-accusations of “fake news” are now commonplace.

In the face of such challenges, skills of critical thinking are more vital now than they have ever been – the ability to judge and assess evidence quickly and efficiently, to step outside our echo chamber and think about things from alternative points of view, to integrate information, often in teams, balance arguments on either side and reach robust, defensible conclusions. These are the skills of argument that have been the subject of academic research in philosophy for more than 2,000 years, since Aristotle.

The Centre for Argument Technology (ARG-tech) at the University of Dundee is all about taking and extending theories from philosophy, linguistics and psychology that tell us about how humans argue, how they disagree, and how they reach consensus – and making those theories a starting point for building artificial intelligence tools that model, recognise, teach and even take part in human arguments.

One of the challenges for modern research in the area has been getting enough data. AI techniques such as deep learning require vast amounts of data, carefully reviewed examples that can help to build robust algorithms.

But getting such data is really tough: it takes highly trained analysts hours of painstaking work to tease apart the way in which arguments have been put together from just a few minutes of discourse.

More than 10 years ago, ARG-tech turned to the BBC Radio 4 programme Moral Maze as an example of “gold standard” debate: rigorous, tight argument on emotive, topical issues, with careful and measured moderation. Enormously valuable, that data fed a programme of empirically grounded research into argument technology.

Working with such demanding data has meant that everything from philosophical theory to large-scale data infrastructure has been put to the test. In October, we ran a pilot with BBC Radio’s religion and ethics department to deploy two types of new argument technology.

The first was a set of “analytics”. We started by building an enormous map of each Moral Maze debate, comprising thousands of individual utterances and thousands more connections between the contents of all of those utterances. Each map was then translated into a series of infographics, using algorithms to determine the most central themes (using something similar to Google’s PageRank algorithm). We automatically identified the most divisive issues and where participants stood, as well as the moments in the debate when conflict reached boiling point, how well-supported arguments were, and so on.

The result, at bbc.arg.tech in conjunction with the Moral Maze presents, for the first time, an evidence-based way of understanding what really happens in a debate.

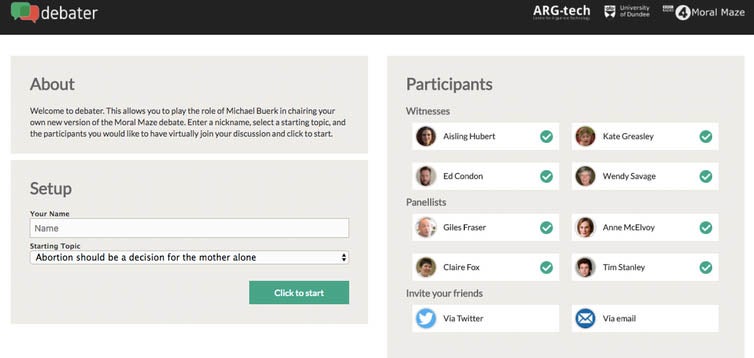

The second was a tool called “debater”, which allows you to take on the role of the chair of the Moral Maze and run your own version. It takes the arguments offered by each participant and allows you to navigate them, following your own nose for a good argument.

Both aspects aim to offer insight and encourage better-quality, more reflective arguing. One the one hand, the work allows summaries of how to improve skills of arguing, driven by evidence in the data of what actually works.

On the other is the opportunity to teach those skills explicitly: a Test Your Argument prototype deployed on the BBC Taster site uses examples from the Moral Maze to explore a small number of arguing skills and lets you pit your wits directly against the machine.

Ultimately, the goal is not to build a machine that can beat us at an argument. Much more exciting is the potential to have AI software contribute to human discussion – recognising types of arguments, critiquing them, offering alternative views and probing reasons are all things that are now within the reach of AI.

And it is here that the real value lies – having teams of arguers, some human, some machine, working together to deal with demanding, complex situations from intelligence analysis to business management.

Such collaborative, “mixed-initiative” reasoning teams are going to transform the way we think about interacting with AI – and hopefully transform our collective reasoning abilities too.

Chris Reed is a professor of computer science and philosophy at the University of Dundee. This article first appeared on The Conversation (theconversation.com)

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments