The Independent's journalism is supported by our readers. When you purchase through links on our site, we may earn commission.

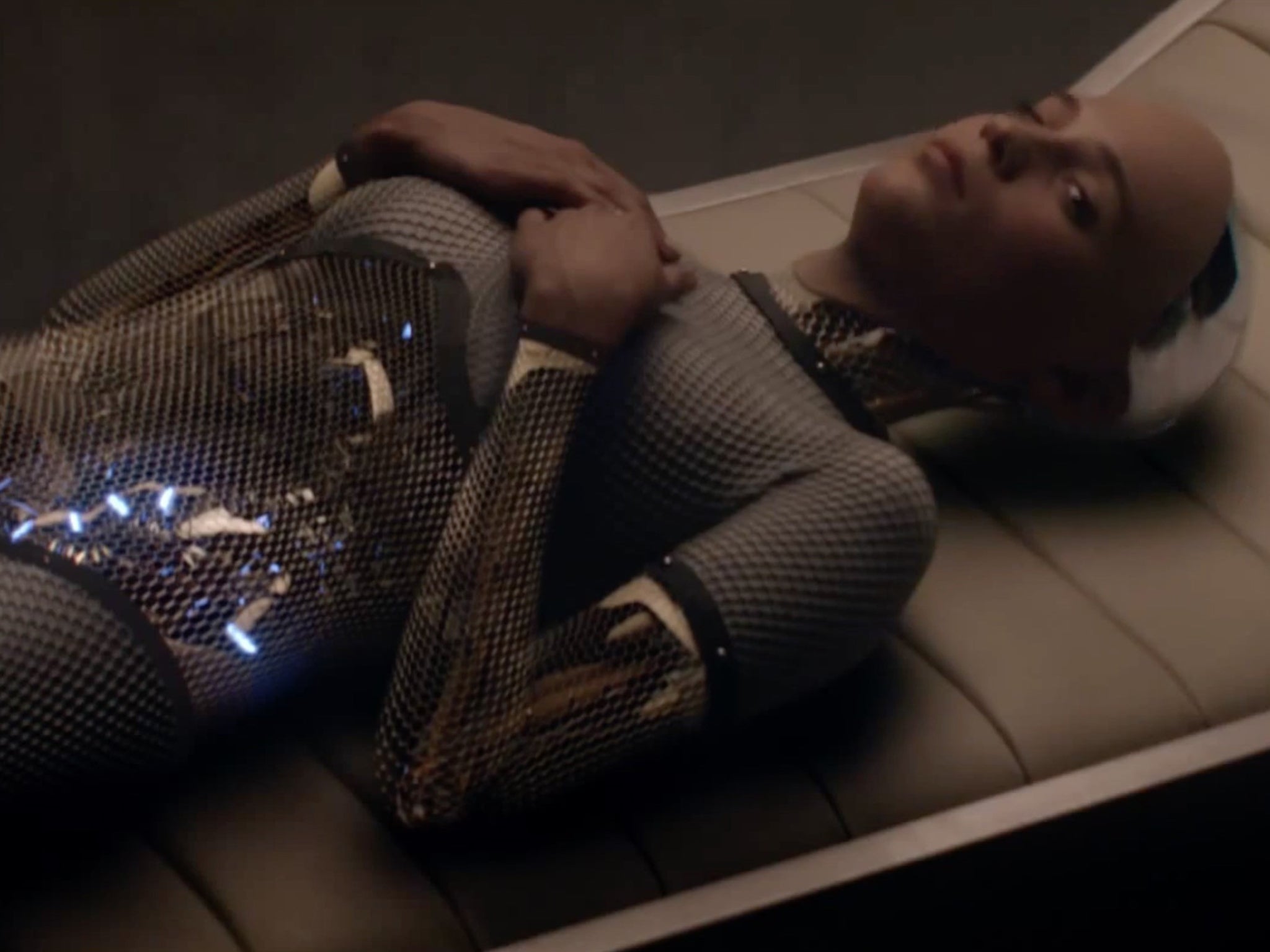

Artificial intelligence could kill us because we're stupid, not because it's evil, says expert

Building artificial intelligence in humanity’s image will make it dangerous, says leading theorist

Your support helps us to tell the story

From reproductive rights to climate change to Big Tech, The Independent is on the ground when the story is developing. Whether it's investigating the financials of Elon Musk's pro-Trump PAC or producing our latest documentary, 'The A Word', which shines a light on the American women fighting for reproductive rights, we know how important it is to parse out the facts from the messaging.

At such a critical moment in US history, we need reporters on the ground. Your donation allows us to keep sending journalists to speak to both sides of the story.

The Independent is trusted by Americans across the entire political spectrum. And unlike many other quality news outlets, we choose not to lock Americans out of our reporting and analysis with paywalls. We believe quality journalism should be available to everyone, paid for by those who can afford it.

Your support makes all the difference.Artificial intelligence will be a threat because we are stupid, not because it is clever and evil, according to experts.

We could put ourselves in danger by creating artificial intelligence that looks too much like ourselves, a leading theorist has warned. “If we look for A.I. in the wrong ways, it may emerge in forms that are needlessly difficult to recognize, amplifying its risks and retarding its benefits,” writes theorist Benjamin H Bratton in the New York Times.

The warning comes partly in response to similar worries voiced by leading technologists and scientists including Elon Musk and Stephen Hawking. They and hundreds of other experts signed a letter last month calling for research to combat the dangers of artificial intelligence.

But many of those worries seem to come from thinking that robots will care deeply about humanity, for better or worse. We should abandon that idea, Bratton proposes.

“Perhaps what we really fear, even more than a Big Machine that wants to kill us, is one that sees us as irrelevant,” he writes. “Worse than being seen as an enemy is not being seen at all.”

Instead we should start thinking about artificial intelligence as something more than the image of human intelligence. Tests like that proposed by Alan Turing, which challenges artificial intelligence to pass as a human, reflect the fact that our thinking about what kinds of intelligence there might be is limited, according to Bratton.

“That we would wish to define the very existence of A.I. in relation to its ability to mimic how humans think that humans think will be looked back upon as a weird sort of speciesism,” he writes. “The legacy of that conceit helped to steer some older A.I. research down disappointingly fruitless paths, hoping to recreate human minds from available parts. It just doesn’t work that way.”

Other experts in artificial intelligence have pointed out that we don’t tend to build other technology to mimic biology. Planes, for instance, aren’t designed to mimic the flight of birds, and it could be a mistake to do the same with humanity.

Retaining our idea that intelligence only exists as it does in humans could also mean that we force robots to “pass” as a person in a way that Bratton likens to being “in drag as a human”.

“We would do better to presume that in our universe, ‘thinking’ is much more diverse, even alien, than our own particular case,” he writes. “The real philosophical lessons of A.I. will have less to do with humans teaching machines how to think than with machines teaching humans a fuller and truer range of what thinking can be (and for that matter, what being human can be).”

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments