Artificial intelligence may already be ‘slightly conscious’, AI scientists warn

Sentient machines are ‘one of the most important questions for the long-term future’, researchers say

Advanced forms of artificial intelligence may already be displaying glimmers of consciousness, according to leading computer scientists.

MIT researcher Tamay Besiroglu joined OpenAI cofounder Ilya Sutskever in warning that some machine learning AI may have achieved a limited form of sentience, sparking debate among neuroscientists and AI researchers.

“It may be that today’s large neural networks are slightly conscious,” tweeted Mr Sutskever, who co-founded OpenAI alongside tech billionaire Elon Musk.

The comment drew a strong response from leaders in the field, including Professor Murray Shanahan from Imperial College London, who said: “In the same sense that it may be that a large field of wheat is slightly pasta.”

Mr Besiroglu, defended Dr Sutskever’s idea, claiming that such possibilities should not be derided or dismissed.

“Seeing so many prominent machine learning folks ridiculing this idea is disappointing,” he tweeted. “It makes me less hopeful in the field’s ability to seriously take on some of the profound, weird and important questions that they’ll undoubtedly be faced with over the next few decades.”

Attempts to define consciousness have divided neuroscientists and philosophers for centuries, though a broad way of describing it is as a narrative constructed by our brains, capable of perception through senses and imagination. Some definitions also include the ability to experience positive and negative emotions, like love and hate.

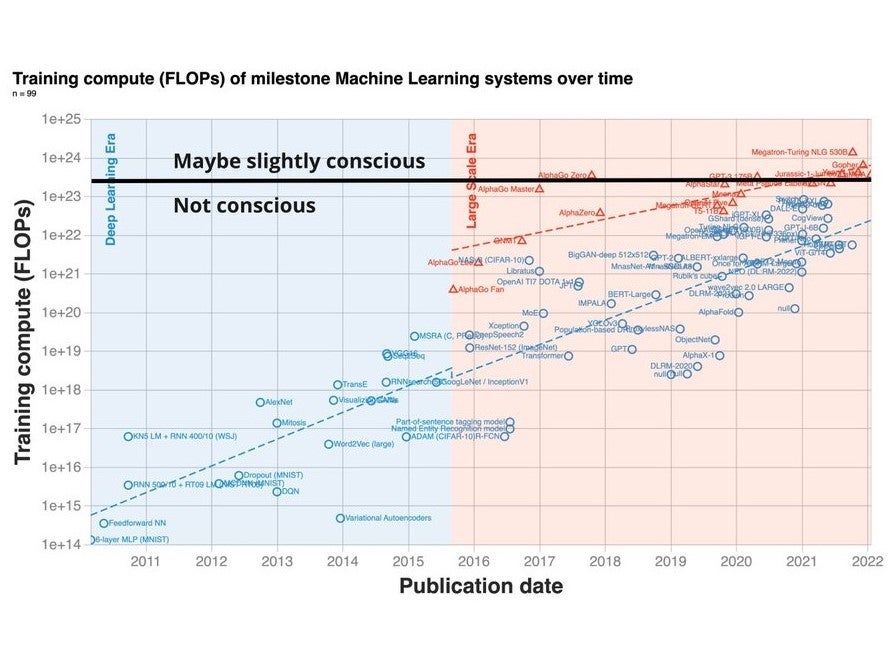

A recent study attempted to track advances in machine learning over the last decade, showing a clear trend in major advances in vision and language

One of the authors of the research was Mr Besiroglu, who drew a line across across a trend graph in a “tongue-in-cheek” attempt to classify which leading AI algorithms could be considered to have some form of consciousness.

OpenAI’s sophisticated text generator GPT-3 was placed in the “maybe slightly conscious” category, as well as AlphaGo Zero, developed by Google’s DeepMind AI division.

“I don’t actually think we can draw a clear line between models that are ‘not conscious’ vs. ‘maybe slightly conscious’. I’m also not sure any of these models are conscious,” Dr Besiroglu told Futurism.

“That said, I do think the question could be a meaningful one that shouldn’t just be neglected.”

OpenAI CEO Sam Altman offered his thoughts on his company’s most powerful AI, tweeting: “I think GPT-3 or -4 will very, very likely not be conscious in any way we use that word. If they are, it’s a very alien form of consciousness.”

The prospect of artificial consciousness rather than simply artificial intelligence raises ethical and practical questions: If machines achieve sentience, then would it be ethically wrong to destroy them or turn them off if they malfunction or are no longer useful?

Jacy Reese Anthis, who researches technology and ethics, described such a dilemma as “one of the most important questions for the long-term future”.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments