Apple rolls out message scanning feature to keep children safe from harmful images in UK

Apple is introducing new protections for children on iPhones in the UK.

The feature allows the phone to scan the messages of children and look for images that contain nudity. If it finds them, it will warn children about what they are receiving or sending, offering them extra information or the ability to message someone they trust for help.

It had already launched in the US, and will now be coming to the UK in a software update in the coming weeks. The rollout has been staggered as Apple works to ensure that the feature and the help it offers are tailored to individual countries.

The tool is referred to by Apple as “expanded protections for children” and lives within the Messages app on iOS, iPadOS, WatchOS and MacOS. It is not turned on by default.

When it is turned on, the Messages app uses artificial intelligence to watch for images that appear to contain nudity. That processing happens on the device and neither the pictures or warnings are uploaded to Apple.

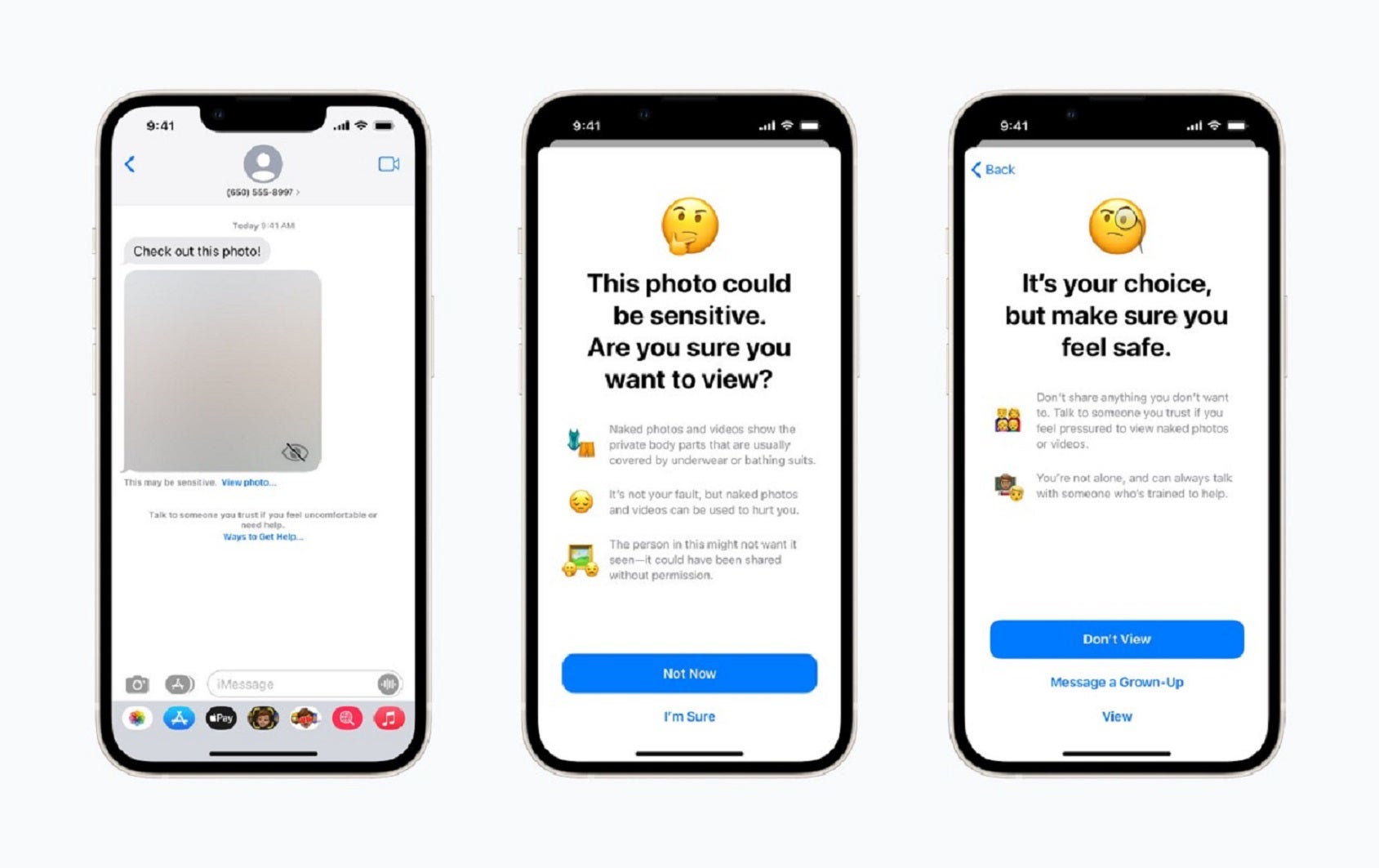

If an image is sent or received by a phone with the feature on, a warning will pop up telling the user that the “photo could be sensitive”, and asking them whether they are sure they want to see it. It includes a range of warnings about the fact that the images include messages telling them that “naked photos and videos can be used to hurt you” and that the images might have been taken without the person’s permission.

Users can then choose to keep the image blurred without looking at it, get further information or “message a grown-up” about what they have been sent. Apple stresses that parents or caregivers will not be alerted without a child’s consent.

Apple’s new child protection features have proven controversial since it was first announced in August. Apple made some tweaks to the way the feature worked since its initial announcement, and says that it is built to preserve the privacy of young people.

It was also announced alongside another tool that would scan everyone’s photos if they were uploaded for storage in iCloud, and look for known child sexual abuse imagery. After it was announced, it was met with concerns that it could be used for other purposes such as political repression, and Apple announced that it would be pausing the rollout until it had gathered more feedback.

That feature – which proved far more controversial and criticised than the scanning in Messages feature that is now being rolled out – will not be launching in the UK yet, and is still yet to be rolled out in the US or any other market.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments

Bookmark popover

Removed from bookmarks