Digital replicas to be banned under proposed AI law

NO FAKES Act prevents a person’s likeness from being digitally replicated without their consent during their lifetime, and for 70 years after their death

Your support helps us to tell the story

From reproductive rights to climate change to Big Tech, The Independent is on the ground when the story is developing. Whether it's investigating the financials of Elon Musk's pro-Trump PAC or producing our latest documentary, 'The A Word', which shines a light on the American women fighting for reproductive rights, we know how important it is to parse out the facts from the messaging.

At such a critical moment in US history, we need reporters on the ground. Your donation allows us to keep sending journalists to speak to both sides of the story.

The Independent is trusted by Americans across the entire political spectrum. And unlike many other quality news outlets, we choose not to lock Americans out of our reporting and analysis with paywalls. We believe quality journalism should be available to everyone, paid for by those who can afford it.

Your support makes all the difference.US lawmakers have introduced a new bill aimed at protecting individuals from AI-generated digital replicas.

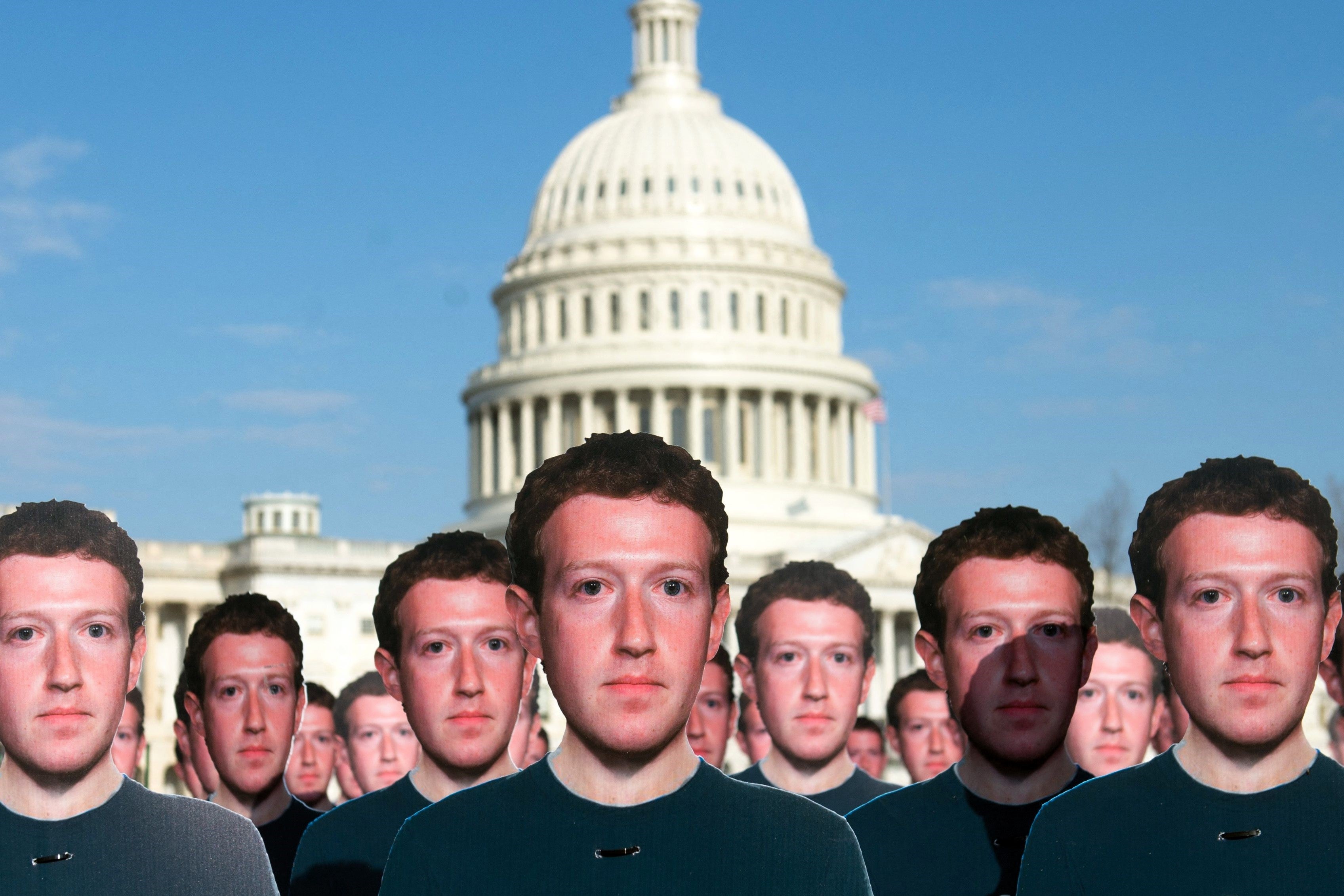

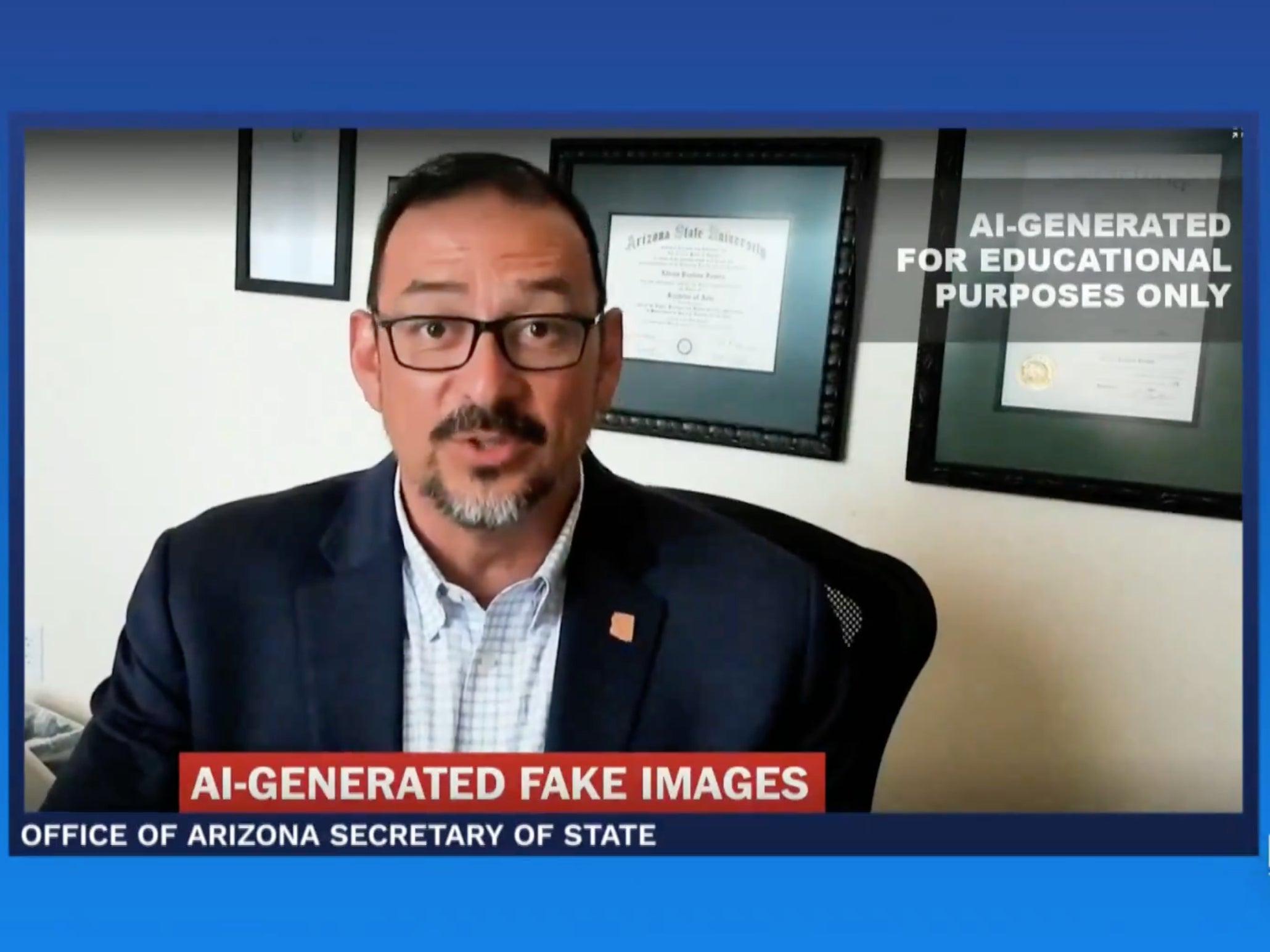

The NO FAKES Act, which stands for ‘Nurture Originals, Foster Art and Keep Entertainment Safe’, comes in response to advanced generative artificial intelligence tools that are able to create realistic deepfakes from a single photo or voice recording.

Introduced by Democratic Senators Chris Coons and Amy Klobuchar and Republican Senators Marsha Blackburn and Thom Tillis this week, the bill would prevent a person’s likeness from being digitally replicated without their consent during their lifetime, and for 70 years after their death.

“Everyone deserves the right to own and protect their voice and likeness, no matter if you’re Taylor Swift or anyone else,” said Senator Coons.

“Generative AI can be used as a tool to foster creativity, but that can’t come at the expense of the unauthorised exploitation of anyone’s voice or likeness.”

The introduction of the bill follows a high-profile Senate hearing in April, which brought to public attention the risks involved with generative AI tools like OpenAI’s Dall-E, Adobe Firefly and Midjourney.

“Americans from all walks of life are increasingly seeing AI being used to create deepfakes in ads, images, music, and videos without their consent,” said Senator Klobuchar. “We need our laws to be as sophisticated as this quickly advancing technology.”

The hearing discussed potential harm for individuals who are the unwilling subject of digital replicas, such as in fake images showing explicit content, as well as the potential for misleading the public through deepfake videos of politicians or other public figures.

The Senators highlighted the harm done to a Baltimore high school principal, whose voice was digitally cloned using AI to produce a fake recording that included racist and derogatory comments about students and staff.

The bill also follows warnings from ethicists that AI simulations of dead people risk “unwanted digital hauntings” for surviving friends and relatives.

So-called deadbots are already being offered to customers of some chatbot companies, with academics at Cambridge University calling for more safety protocols surrounding the technology in May.

“It is vital that digital afterlife services consider the rights and consent, not just of those they recreate, but those who will have to interact with the simulations,” Dr Tomasz Hollanek, from Cambridge’s Leverhulme Centre, said at the time.

“These services run the risk of causing huge distress to people... The potential psychological effect, particularly at an already difficult time, could be devastating.”

If passed into law, the NO FAKES Act would hold individuals or companies liable for producing non-consensual digital replicas, while platforms like Instagram and X would also be liable for hosting unauthorised deepfakes.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments