The fake videos trying to trick you on Election Day

DIY deep fakes made using cheap and readily available artificial intelligence software could cause major disruptions to the midterm elections, experts tell Bevan Hurley

Your support helps us to tell the story

From reproductive rights to climate change to Big Tech, The Independent is on the ground when the story is developing. Whether it's investigating the financials of Elon Musk's pro-Trump PAC or producing our latest documentary, 'The A Word', which shines a light on the American women fighting for reproductive rights, we know how important it is to parse out the facts from the messaging.

At such a critical moment in US history, we need reporters on the ground. Your donation allows us to keep sending journalists to speak to both sides of the story.

The Independent is trusted by Americans across the entire political spectrum. And unlike many other quality news outlets, we choose not to lock Americans out of our reporting and analysis with paywalls. We believe quality journalism should be available to everyone, paid for by those who can afford it.

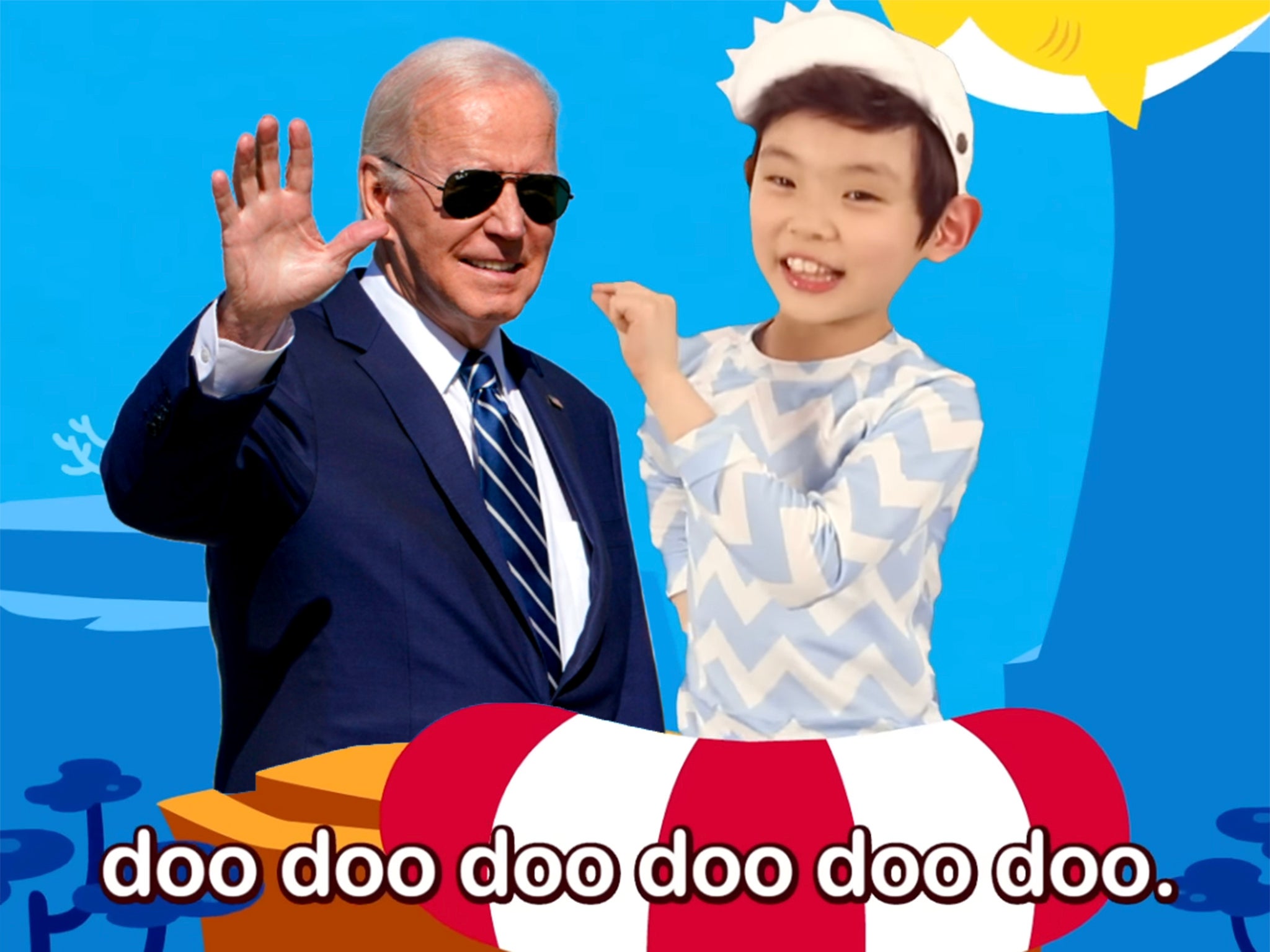

Your support makes all the difference.No, Joe Biden didn’t sing the “Baby Shark” song.

But a viral deepfake video showing the US president launching into the children’s tune convinced plenty of people that he had.

Likewise, a clip of a child shouting “shut the f*** up” at First Lady Jill Biden during a reception to celebrate Diwali never happened.

The clip was shared on Twitter by a pro-Maga account where it had received more than 600,000 views by Thursday.

A succession of deepfakes videos targeting political figures have gone viral in the lead up to the November midterms, stoking concerns they could play a role in determining the outcome of tight races.

Deepfakes are made using artificial intelligence programmes that are manipulate from authentic videos to misrepresent a person’s actions and words.

Once an expensive and complex process, creating the hyperrealistic content is now cheap and easily available to developers on sites such as GitHub.

At a time when trust in media is near an all time low, according to Gallup Research, and the Big Lie that Donald Trump won in 2020 has created deep-seated distrust in elections, deepfakes are the latest weapon in an ongoing information war.

Experts tell The Independent that as technology improves, deep fakes will become harder to spot and erode public trust even further.

But they believe the real danger lies in the clip’s ability to circulate rapidly online, and that social media companies are doing nothing to curb the threat.

“One deepfake video doesn’t mean anything. The video becomes dangerous when it goes viral,” Wael Abd-Almageed, director of the Visual Intelligence and Multimedia Analytics Laboratory at the University of Southern California (USC), told The Independent.

A report in September by US media monitor firm Newsguard found that nearly one in five videos automatically suggested by TikTok contained misinformation on topics from school shootings to the Russian invasion of Ukraine.

As deepfakes videos become “extremely prevalent”, social platforms were shrugging their shoulders at the problem, Dr Abd-Almageed said.

He has developed tools with his students at USC that would allow social media companies to flag the deepfakes, or even stop fake content from being posted altogether.

He said he offered the tools to social media companies for free, and implored them to use them to stop the propagation of misinformation, and was told explicitly: “We don’t care.”

“There are so many things they can do but they are not interested in doing anything to stop misinformation. They just want to maximise user engagement, the more people stay on the platform, the more money they make from advertising.”

Dr Abd-Almageed finds it “shocking and frightening” how little the large social media platforms were interested in preventing the spread of deepfakes.

“No tool will be bulletproof, but if I could stop 50, 60 or 70 per cent of these deepfakes, it’s much better than nothing. What they’re doing now is nothing,” he said.

“We keep eroding the line between what’s true and what’s not and at some point everything becomes not true, and we will not believe in anything. It’s extremely important to keep trying to protect that line, but they’re not interested,” Dr Abd-Almageed told The Independent.

‘This literally keeps me up at night’

A report released by the Congressional Research Service in June warned that the proliferation of photo, audio and video forgeries generated by artificial intelligence could present “national security challenges in the years to come”.

“State adversaries or politically motivated individuals could release falsified videos of elected officials or other public figures making incendiary comments or behaving inappropriately,” researchers found.

“Doing so could, in turn, erode public trust, negatively affect public discourse, or even sway an election.”

Dr Abd-Almageed agrees a fake video could easily be used to manipulate the outcome of an election.

For example, a fake video claiming that the president is extremely sick and dying is posted to social media three hours before polls close on election day.

The video goes viral, people start believing it, and stop going to the polls. In the time it would take for the White House to disprove the story, voters may have already missed the window to cast their ballot.

“This literally keeps me up at night,” Dr Abd-Almageed tells The Independent.

“We always grew up thinking that seeing is believing, even after someone debunks it it becomes very difficult to reverse that belief.”

He said the solution may be for lawmakers to compel social platforms to curb the flood of deepfakes.

“All these platforms are hiding behind saying we are just a news stand, we are not responsible for the content on our platforms. They have to accept the moral and ethical responsibility to fight misinformation.”

Separating real from fake

Days after Russia invaded Ukraine, a video of Volodymyr Zelensky calling for his soldiers to surrender spread rapidly online. Hackers managed to briefly get it broadcast on Ukrainian television, before it could be debunked by Ukrainian officials.

The doctored clip of Mr Biden singing “Baby Shark” was taken from a speech he gave at Irvine Valley Community College, in California on 14 October.

In the clip, the president announces the national anthem, but instead of singing “The Star-Spangled Banner” he sings the opening lyrics to the annoyingly familiar “Baby Shark”.

The initial clip posted to TikTok received half a million views before it was taken down, and spread rapidly on other social networking sites.

Hundreds of commenters believed the clip was authentic.

“He’s lost his mind,” one person commented on TikTok.

“Ladies & Gentlemen, This is who’s leading this country! How?” another wrote.

Factcheckers at the Associated Press debunked the clip, but their efforts to dampen the flames of misinformation invariably reach a far smaller audience.

The deepfake creator Ali Al-Rikabi, a civil servant based in the United Kingdom, told the Associated Press that he used voice cloning software to make Mr Biden sing the “Baby Shark” lyrics and lip syncing software to make his lips match the words.

In a separate deepfake, footage of Jill Biden clapping and singing ahead of a Philadelphia Eagles NFL game was digitally altered to swap the authentic audio with a crude anti-Biden chant.

Reuters reported that a single tweet of the fake clip received hundreds of thousands views before it was taken down.

More examples have surfaced and circulated in recent weeks.

A recent viral clip on TikTok appeared to show White House Press Secretary Karine Jean-Pierre ignore a question from a Fox News reporter.

An Associated Press fact check found it had been digitally altered.

In a recently resurfaced clip from 2021, Kamala Harris appears to say that nearly all of those to have been hospitalised or died from Covid-19 had been vaccinated. In fact, she said unvaccinated.

A separate deep fake from 2019 appeared to show an intoxicated Nancy Pelosi slurring her speech. It was debunked, but only after it had been viewed 2.5 million times on Facebook and has continued to spread online.

An analysis by the AI firm Deeptrace in 2020 found there were nearly 15,000 deep fakes in existence, more than double the number nine months earlier. Of those, 96 per cent were pornographic, with the vast majority superimposing the faces of celebrities on to graphic sexual content.

Exact figures are hard to pin down, but experts say the number of deep fakes have exploded in the years since then.

A popular sub-genre of deep fakes features mashups of well-known actors in unfamiliar roles, such as Jim Carrey in The Shining, or Jerry Seinfeld in Pulp Fiction.

After actor Paul Walker was killed during the filming of Fast and Furious 7, in 2013, Weta Digital used deepfake technology to map his face onto the bodies of his brothers Cody and Caleb to complete the film at huge expense.

Artificial intelligence is also being used to give new life to James Earl Jones’ portrayal of Darth Vader after the 91-year-old actor gave his permission to clone his voice and record new lines for future Disney films.

That same technology is readily available on sites like GitHub for anyone to use.

Siwei Lyu, director of the University of Buffalo’s Media Forensic Lab, has studied user behaviour around deepfakes and told The Independent that more needs to be done to educate social media users.

“Social media world is very biased, very polarised. People are living in their echo chambers. Everybody has confirmation bias, and we like to see evidence supporting our beliefs,” Professor Lyu said.

“If everybody has a higher awareness this type of media manipulation. If they know this is false, then that will prevent them from having such an impact.”

He shares concerns about deepfakes spreading around an election day, and believes the dangers of tipping the balance in a tight election are very real.

Professor Lyu told The Independent algorithms exist that can expose most fake clips, but the more savvy deepfake creators can get around these.

He said the onus was on social media users need to check the source and authenticate clips before sharing them with friends.

A spokesman for Meta, the parent company of Facebook and Instagram, provided The Independent with its policies on manipulated media.

Meta says it cannot define what constitutes misinformation as “the world is changing constantly, and what is true one minute may not be true the next minute”.

It adds that it removes posts that are incite violence or are “likely to directly contribute to interference with the functioning of political processes”.

TikTok and Twitter did not respond to a request for comment.

In comments posted to Twitter on Thursday, Elon Musk said he did not want to see the platform become a “free-for-all hellscape”, after previously saying he would allow any content that did not break local laws.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments