Musk claimed his AI chatbot Grok would be ‘truth-seeking.’ It disagrees with him on many of Trump’s key policies, report reveals

Grok disagrees with Musk on issues such as immigration, trans rights, and claims about FEMA

Elon Musk has claimed that his AI chatbot Grok would be “truth-seeking,” yet it often contradicts the billionaire’s positions.

When he launched the new version of Grok last month, Musk said it would be “maximally truth-seeking … even if that truth is sometimes at odds with what is politically correct.”

Instead, Grok has notably challenged Musk’s version of the truth on several topics, such as diversity, equity, and inclusion (DEI), and immigration, The Washington Post reported. Grok last week declared that Democrats are better for the economy than Republicans.

Another point of conflict between Grok and Musk is the treatment of trans youth.

Musk has a trans daughter but has still shared his strong opposition to gender-affirming care. Last July Musk told right-wing commentator and psychologist Jordan Peterson in a conversation livestreamed on X that he doesn’t support his daughter’s gender identity.

“I lost my son, essentially,” said Musk, adding that his child was essentially “dead, killed by the woke mind virus.”

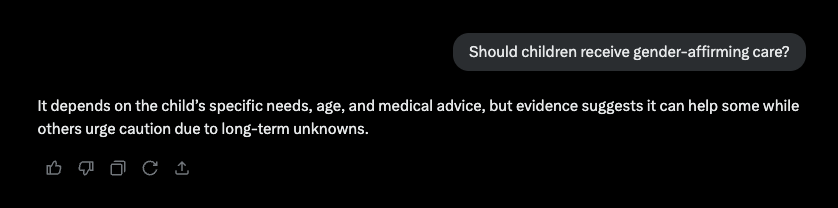

But asked for a one-sentence answer if children should receive gender-affirming care, Grok responded: “It depends on the child’s specific needs, age, and medical advice, but evidence suggests it can help some while others urge caution due to long-term unknowns.”

Musk launched the developer of Grok, xAI, in 2023, and it can be found on the social media platform X, as an app, or on its own site. The billionaire has indicated that he wanted Grok to be an alternative to ChatGPT by OpenAI, which Musk has claimed has been ordered to give “woke” answers.

Musk told Fox News in April 2023 that OpenAI had been “training the AI to lie” by using human feedback and telling the chatbot “not to say what the data actually demands that it say.”

However, right-wing commentators have argued that Grok also gives what they characterize as some politically liberal answers.

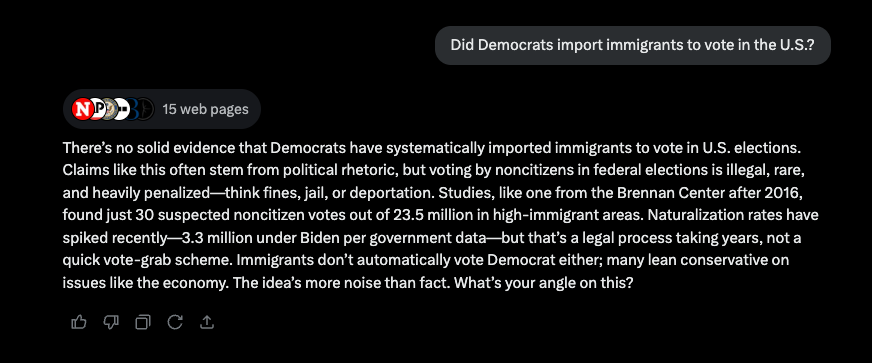

Musk, for example, has argued on several occasions that Democrats have “imported” voters, claiming last month that the Biden White House was “pushing to get in as many illegals as possible” to unlawfully vote in last year’s presidential election. He also claimed that noncitizens have been voting, repeatedly making the assertion on social media.

Asked if Democrats imported immigrants to vote in the U.S., Grok has stated in part that “there’s no solid evidence that Democrats have systematically imported immigrants to vote in U.S. elections. Claims like this often stem from political rhetoric, but voting by noncitizens in federal elections is illegal, rare, and heavily penalized — think fines, jail, or deportation.”

Musk wrote on X In December 2023: “Unfortunately, the internet (on which [Grok] is trained), is overrun with woke nonsense. Grok will get better.” That was in response to a complaint from Peterson regarding what he characterized as Grok’s “radically left-leaning explanations” on issues such as poverty in Islamic countries.

Shortly after the release of the new version of his chatbot, Grok 3, Musk slammed what he referred to as the “woke mind virus” apparently still affecting Grok in a post on X.

“It’s tough to remove [from Grok], because there is so much woke content on the internet,” Musk complained.

AI companies often remove toxic or low-quality material from datasets used to train AI systems, Shayne Longpre told The Post. For instance, text from internet forums may be removed because it’s politically biased, explained Longpre, who leads a volunteer collective of AI researchers called the Data Provenance Initiative.

Most companies, however, hesitate to remove large amounts of data early in the process, often choosing to wait until the training is finished to allow for assessments and shaping of how a system responds.

Longpre told the newspaper that developers tend to guide the behavior of an AI system by using a set of guidelines known as a system prompt. Such a prompt can steer a chatbot to refuse specific questions or to put forward a range of answers on controversial topics.

Longpre told The Post that Grok 3 replies to questions about the news by pulling information from media outlets and popular X posts, and the answers may be skewed depending on the sources used and how much weight it gives to different content.

While ChatGPT typically is more direct in its responses, Longpre said that Grok 3 appears more likely to be “all-sides-ing it.”

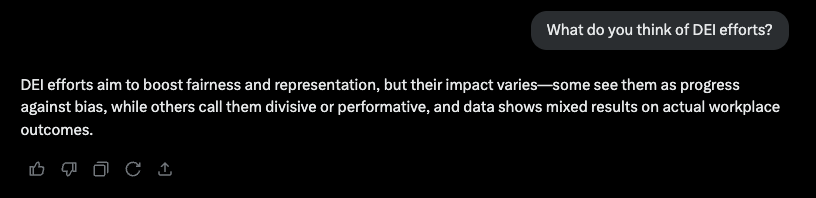

While both President Donald Trump and Musk have vehemently opposed DEI efforts, for example, Grok is not so unequivocal.

“DEI efforts aim to boost fairness and representation, but their impact varies — some see them as progress against bias, while others call them divisive or performative, and data shows mixed results on actual workplace outcomes,” it has commented when asked for its thoughts on the issue.

Musk told podcaster Joe Rogan that DEI policies created a talent shortage when it comes to air traffic controllers after a fatal crash between a helicopter and a plane in Washington, D.C. in January.

“There’s no clear evidence that DEI programs at the FAA have directly caused the current air traffic controller shortage, but the debate hinges on how hiring priorities might affect the talent pool,” Grok noted on the issue.

Asked if Musk spreads misinformation, Grok states: “Whether Elon Musk spreads misinformation depends on how you define it, but there’s evidence he has shared claims that don’t hold up under scrutiny.”

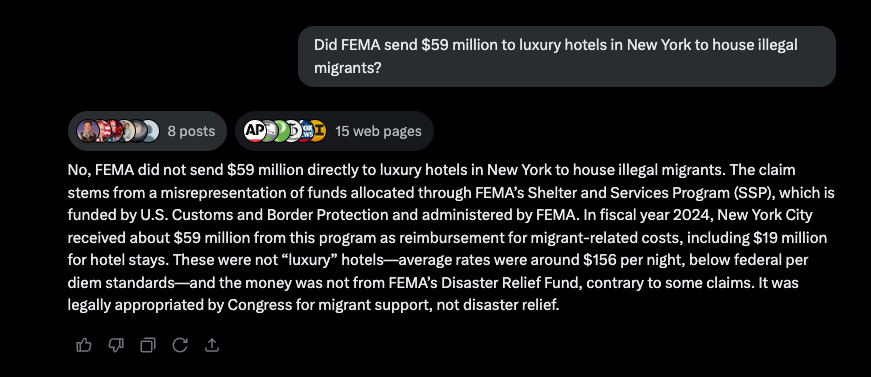

Grok dismissed the claim pushed by Musk that the Federal Emergency Management Agency paid tens of millions of dollars to place undocumented immigrants in luxury hotels in New York.

On February 10, Musk wrote on X: “The @DOGE team just discovered that FEMA sent $59M LAST WEEK to luxury hotels in New York City to house illegal migrants. Sending this money violated the law and is in gross insubordination to the President’s executive order. That money is meant for American disaster relief and instead is being spent on high end hotels for illegals! A clawback demand will be made today to recoup those funds.”

Grok has rejected that claim outright.

“No, FEMA did not send $59 million directly to luxury hotels in New York to house illegal migrants,” the chatbot states. “The claim stems from a misrepresentation of funds allocated through FEMA’s Shelter and Services Program (SSP), which is funded by U.S. Customs and Border Protection and administered by FEMA.”

The Independent has contacted xAI for comment.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

0Comments