Democrats and Republicans have a common enemy: pornographic ‘deepfakes’

Ted Cruz and AOC are unlikely allies forcing Big Tech to take down nonconsenual images, but Elon Musk’s antics derailed their efforts, Alex Woodward reports

Your support helps us to tell the story

From reproductive rights to climate change to Big Tech, The Independent is on the ground when the story is developing. Whether it's investigating the financials of Elon Musk's pro-Trump PAC or producing our latest documentary, 'The A Word', which shines a light on the American women fighting for reproductive rights, we know how important it is to parse out the facts from the messaging.

At such a critical moment in US history, we need reporters on the ground. Your donation allows us to keep sending journalists to speak to both sides of the story.

The Independent is trusted by Americans across the entire political spectrum. And unlike many other quality news outlets, we choose not to lock Americans out of our reporting and analysis with paywalls. We believe quality journalism should be available to everyone, paid for by those who can afford it.

Your support makes all the difference.Last fall, Elliston Berry woke up to a barrage of text messages.

A classmate ripped photographs from her Instagram, manipulated them into fake nude photographs, and shared them with other teens from her school. They were online for nine months. She was 14 years old.

“I locked myself in my room, my academics suffered, and I was scared,” she said during a recent briefing.

She is not alone. So-called non-consensual intimate images (NCII) are often distributed as pornographic “deepfakes” using artificial intelligence that manipulates images of existing adult performers to look like the victims — targeting celebrities, lawmakers, middle- and high schoolers, and millions of others.

There is no federal law that makes it a crime to generate or distribute such images.

Elliston and dozens of other victims and survivors and their families are now urging Congress to pass a bill that would make NCII a federal crime — whether real or created through artificial intelligence — with violators facing up to two years in prison.

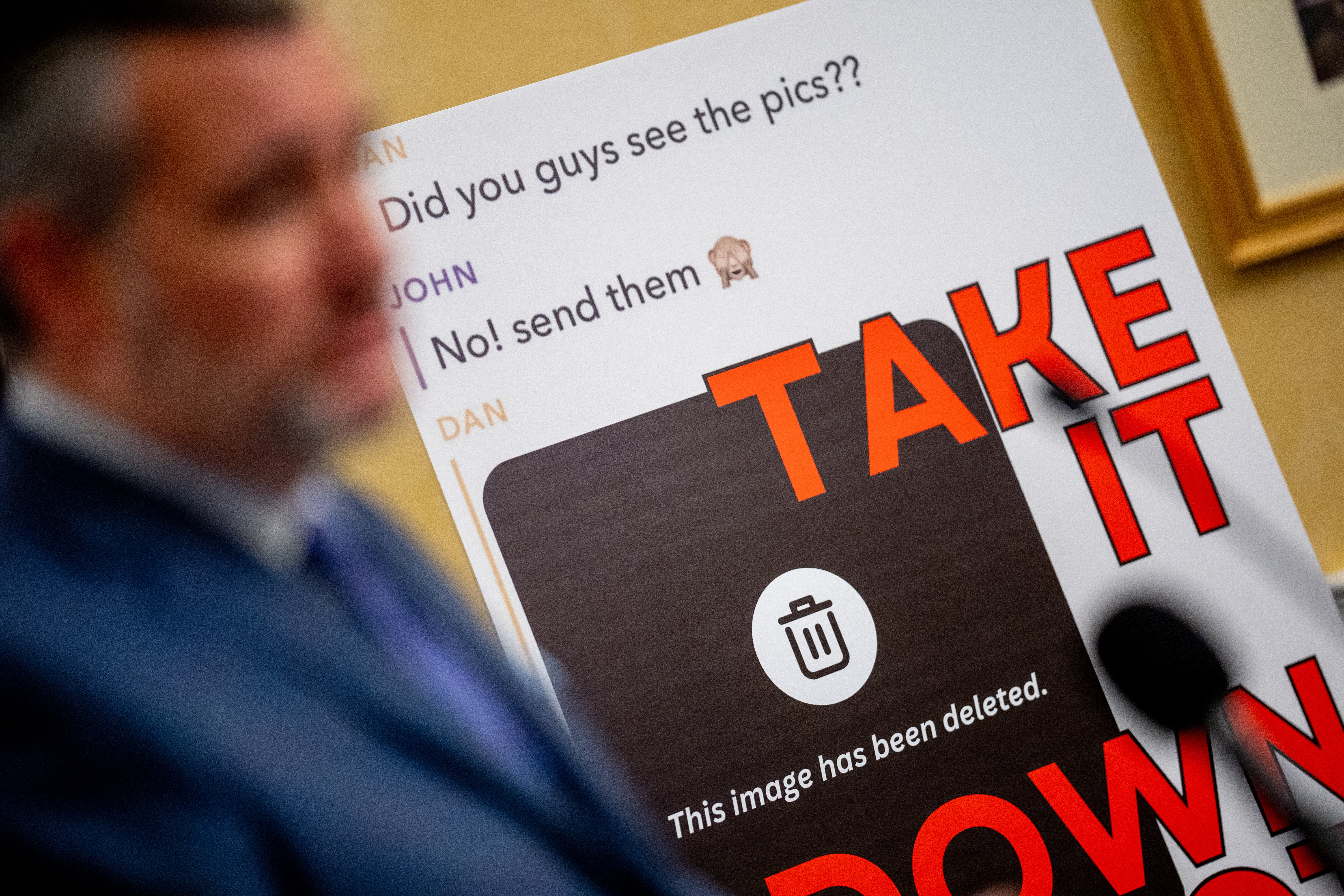

The “Tools to Address Known Exploitation by Immobilizing Technological Deepfakes on Websites and Networks Act” — or TAKE IT DOWN Act — unanimously passed the Senate on December 3.

The legislation was attached to a broader bipartisan government funding bill with support from both Republican and Democratic members of Congress, including a final push from Ted Cruz and Amy Klobuchar — two senators who are rarely if ever on the same side of an issue.

Elon Musk’s X platform was even involved in lobby efforts to support the legislation, including other actions on child safety.

But his pressure campaign against that government funding bill prompted Republicans to strike the TAKE IT DOWN Act language from the measure altogether. Efforts to revive the spending bill did not include it.

Another measure from Rep. Alexandria Ocasio-Cortez would allow victims of “digital forgery” to file lawsuits to stop them. The congresswoman has spoken out about her own experience as a victim.

The “Disrupt Explicit Forged Images and Non-Consensual Edits” Act — or DEFIANCE Act — marked the first-ever attempt to ensure federal protections targets of nonconsensual deepfakes.

Legislation passed the Senate this summer.

“Victims of nonconsensual pornographic deepfakes have waited too long for federal legislation to hold perpetrators accountable,” the congresswoman said in a statement at the time.

“As deepfakes become easier to access and create ... Congress needs to act to show victims that they won’t be left behind,” she said.

Many states have enacted laws intended to protect people against NCII, including 20 states with laws explicitly covering deepfakes, but the laws vary in application and “uneven criminal prosecution,” and victims often struggle to have the images removed from websites, “increasing the likelihood the images are continuously spread and victims are retraumatized,” according to lawmakers.

The Take It Down Act would uniformly criminalize the publishing of NCII, defined in legislation to also include “realistic, computer-generated pornographic images and videos that depict identifiable, real people.”

It also requires websites to take down NCII upon notice from the victim.

Social media and other websites are required to have in place procedures to remove NCII within 48 hours of a request.

Last summer, Molly Kelley discovered images from her private social media account had been ripped and transformed into deepfake pornography. The images, which were created while she was six months pregnant, damaged her physical and emotional health and ability to work, she said.

“The stress of the discovery of seeing a hyper-realistic twisted version of myself that I had no part in creating was overwhelming,” she said. “When the initial shock wore off, a persistent reality set in: these images will likely exist forever … It is an intolerable weight.”

Kelley was among 85 women who were affected by deepfake images that were created by a man who they say has yet to face any consequences. When she reported the images to law enforcement, the reaction “was inconsistent and, at times, dismissive,” she said.

The dissemination of private explicit images and videos without a person’s consent — sometimes as intentionally malicious attacks known as “revenge porn” — has also been used to extort countless victims.

A South Carolina lawmaker whose 17-year-old son died by suicide in 2022 after he was the target of a “sextortion” plot made it his mission to turn state law he sponsored into the federal model supported by Cruz and Klobuchar.

“Both adults and kids deserve the right to have non-consensual intimate images, real or fake, removed from a site,”South Carolina state Rep. Brandon Guffey wrote in The Hill this month. “Because Big Tech won’t do it willingly, legislation is needed to force these companies to act.”

Samantha McCoy survived sexual assault while a junior in college in Indiana. Her attacker posted images of her assault online.

Had the legislation been in place, “I would not have experienced the constant and repeated pain as the video of the attack would have been promptly removed, significantly reducing the chances of wider circulation on these platforms,” she said.

Celebrities like Taylor Swift are among the most well-known targets, but the majority of NCII posted online are of women or teenage girls, according to the Cyber Civil Rights Initiative.

Roughly one in eight social media users have been targeted, and one in 20 adult social media users are perpetrators, according to the group.

More than two dozen members of Congress — mostly women — have also been the victims of sexually explicit deepfakes, according to research from the American Sunlight Project (ASP).

The think tank’s December report discovered more than 35,000 mentions of nonconsensual images depicting 26 members of Congress — 25 women and one man — on deepfake websites. Most of the images were removed after researchers shared their findings with impacted lawmakers.

Nearly 16 percent of all women who are currently serving in Congress — or roughly one in six congresswomen — are victims of pornographic deepfakes.

A recently released report from the House Bipartisan Task Force on Artificial Intelligence aims to give Congress a “roadmap” to both “safeguard consumers and foster continued U.S. investment and innovation in AI,” according to the group’s Republican co-chair, congressman Jay Obernolte.

The much-anticipated report included dozens of policy recommendations, including finding ways to “appropriately counter” the “growing harm” from the “proliferation of deepfakes and harmful digital replicas.”