2020 election polls: Should I trust the results? Here’s what pollsters are saying

Trump caused a massive upset in 2016. Could he do it again?

Your support helps us to tell the story

From reproductive rights to climate change to Big Tech, The Independent is on the ground when the story is developing. Whether it's investigating the financials of Elon Musk's pro-Trump PAC or producing our latest documentary, 'The A Word', which shines a light on the American women fighting for reproductive rights, we know how important it is to parse out the facts from the messaging.

At such a critical moment in US history, we need reporters on the ground. Your donation allows us to keep sending journalists to speak to both sides of the story.

The Independent is trusted by Americans across the entire political spectrum. And unlike many other quality news outlets, we choose not to lock Americans out of our reporting and analysis with paywalls. We believe quality journalism should be available to everyone, paid for by those who can afford it.

Your support makes all the difference.It’s probably a fair assumption that most committed news consumers during the 2016 election thought Hillary Clinton was going to win. A lot of people did. The polls said so.

On the night before election day, the New York Times’ projection gave her an 85 per cent chance of winning, according to their round-up of national and state polls. FiveThirtyEight, one of the most reputable pollsters in the world, said she had a 71 per cent chance.

It didn’t turn out that way. Election night came as a shock to many, and the hubris of the media elite became the story of the election.

In the subsequent years, pollsters have had a rough time explaining why the polls were so wrong in 2016, and why they should be trusted ever again. Their answer to that charge has been relatively uniform: they were not wrong.

“I think what happened in 2016 wasn't really a failure of methodology or technique, it was a failure of analysis and reporting,” said Chris Jackson, head of public polling at Ipsos.

“We, like many other people, sort of got hung up on the idea that Clinton winning by three percentage points and the national popular vote meant something, and took our eye off of the importance of the electoral college and how the electoral college could, and in fact did, deviate from those results.”

The numbers support that argument. The day before the election, the RealClearPolling average of election polls had Clinton 3.2 points ahead nationally. The final result was 2.1 per cent — within the margin of error. The lesson from 2016, then, is not that the polls were wrong, but that important nuances about how they should be read were lost in the way they were presented to the public.

The New York Times, for example, debuted its much-derided “needle” in the 2016 election season — a swing-o-meter- type graphic that became one of its best-read election pages. Jeremy Bowers, the NYT’s senior editor for news applications, said the needle was a means of “visualizing uncertainty” — but to most, a needle pointing to 80 per cent chance of victory for Clinton looked pretty certain.

The same criticism was made of FiveThirtyEight, whose model was slightly more cautious, but which nonetheless failed to translate to the public the uncertainty of the projection it was making.

“I think because they're so smart, they present stuff in these probability formats, and I agree that I think that provides more confidence than perhaps people should have on what the results are gonna be,” Mr Jackson said.

“Today, they're at what, 77, 22 [against] Trump, that's essentially one time out of four, Trump wins in their models. One time out of four is not bad. You know, like a lot of people would go to Las Vegas and bet on that heartbeat, and they think that's such a big difference from ‘Biden's gonna win’.”

So there are reasons for the public to treat polls with more caution, perhaps. But there have also been some lessons learned in the last four years. Pollsters and statisticians say they know why the polls did not give a fuller picture than they might, and have made adjustments.

There were a number of oddities and unknowns in 2016 which made accuracy more difficult.

G Elliott Morris, a data journalist who runs The Economist’s election forecast, said the issue of whether the polls will be right this time has become something of an obsession for journalists as of late.

“The biggest questions that reporters are asking pollsters right now are what went wrong last time and what have you changed?” he said

“They start with the premise that things went disastrously wrong, and they want to know what pollsters did to fix this disastrously miscalibrated industry. When in reality, polls are getting better.”

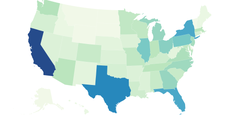

Nonetheless, Mr Morris said there were a few factors that hampered projections in 2016. The first was that an unusually high number of voters were undecided going into the final weeks of the campaign, and most of them swung towards Trump. The second was that pollsters failed to weight their surveys by education, which meant that college educated voters were overrepresented in polls, which gave Clinton an inaccurate lead. This was especially true in the Midwest, where Trump racked up some of his most surprising victories.

The first of these two weaknesses has largely been addressed on its own: the number of undecided voters is way lower than in 2016, meaning less uncertainty. The second, weighting by education, is now factored into most pollsters’ models, meaning in theory that the result should be more accurate.

The third issue in 2016, which has not and cannot be perfectly addressed by any predictive model, is who will turn out to vote.

“It’s sort of a classic error in polling, and it's an intractable error,” said Mr Morris.

“Pre-election polls are attempting to forecast essentially the demographics of who's going to turn out on election day. And they do that typically by asking people, are you going to vote or not? This is a source of error because people aren't entirely truthful about whether or not they're going to vote,” he added.

There was another factor at play, according to Mr Jackson: a lack of reliable state polling. So while the national picture was largely accurate, small differences in states that helped tipped the electoral college in Trump’s favour were missed.

But with those adjustments and improvements taken into consideration, should we trust the polls again? According to Mr Morris, that isn’t really the issue — we should rather adjust the way we think about polls altogether.

“They really are a great tool for measuring the sentiment of the public. It just so happens that in close elections, like 2016, when small errors can make the entire forecast change, they can't perfectly predict an outcome,” he said.

In an impassioned defence of his model written days ahead of Trump’s inauguration in January 2017, Nate Silver pointed the finger back at “ traditional reporters and editors” who, he argued, had “built a revisionist history about how they covered Trump and why he won.”

The real shortfall in 2016 was not the polls, he argued, but a “pervasive groupthink among media elites, an unhealthy obsession with the insider’s view of politics, a lack of analytical rigor, a failure to appreciate uncertainty, a sluggishness to self-correct when new evidence contradicts pre-existing beliefs, and a narrow viewpoint that lacks perspective from the longer arc of American history.”

Mr Jackson has a similar message to anyone still angry at pollsters.

“Be a little bit more careful and just understand like polls are not a crystal ball,” he said.

“People should always keep in mind as polls are by definition looking backwards. These are people that we interviewed yesterday or two days ago or a week ago. And people are actually usually kind of bad about predicting their own behaviour. So trying to take that and project it forward into the future is always risky.”

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments