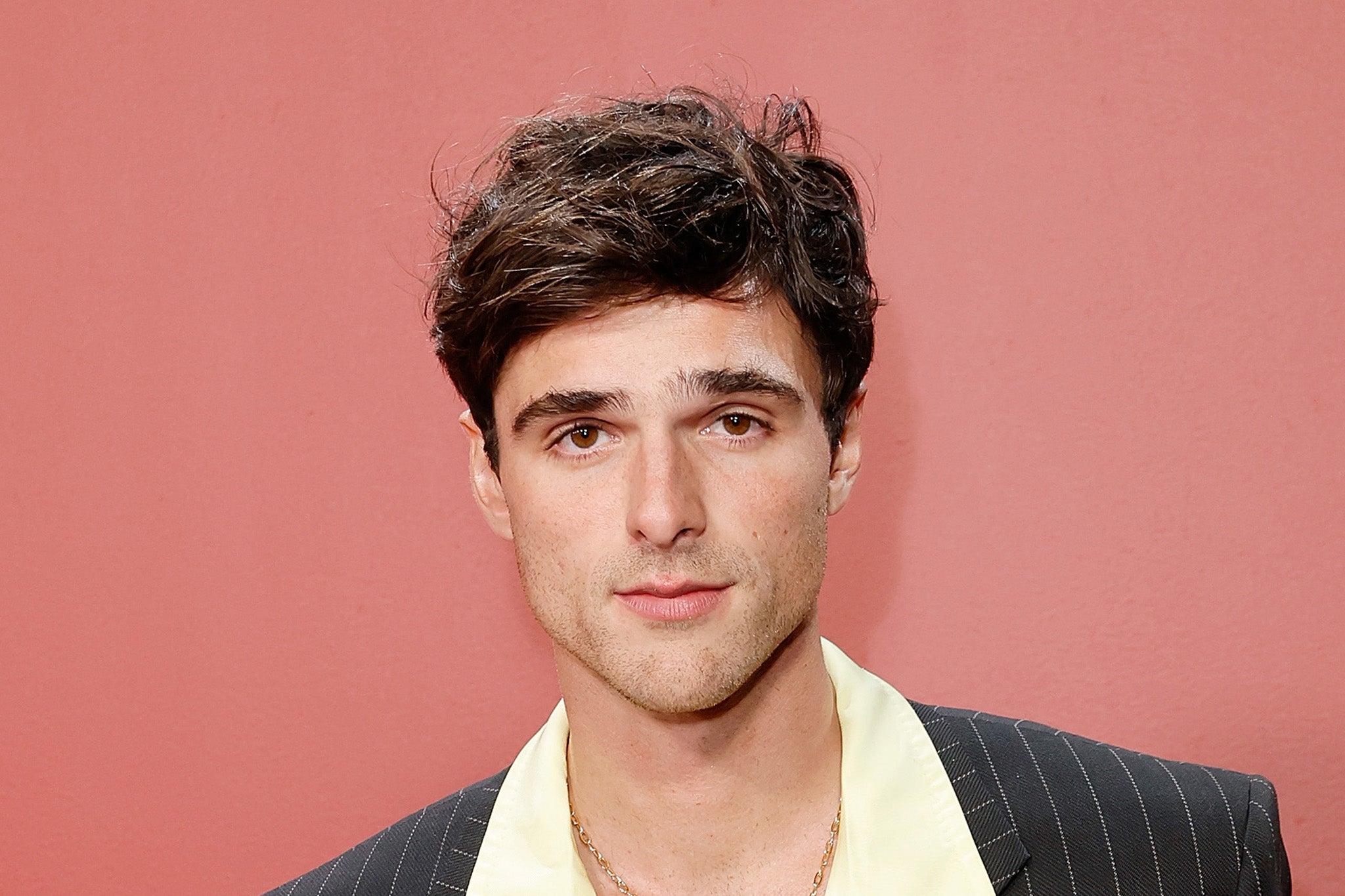

Jacob Elordi targeted in explicit AI deepfake scam using a minor’s body

Social media posts containing the deepfake video of Elordi were viewed more than three million times

Euphoria and Saltburn actor Jacob Elordi has become the latest celebrity to be targeted in a sexually explicit AI deepfake scam.

Posts on X, formerly Twitter, containing the non-consensual deepfake video of Elordi have been viewed more than three million times since late Monday, according to NBC News.

NBC reported that there were at least 16 posts on X that contained the video. At least one of them had a content label that said, “Visibility limited: this Post may violate X’s rules against Abuse,” according to the publication, meaning it could not be linked to or shared.

However, the post still had 23,000 views, NBC reported, while another had over 1.7 million views.

Meanwhile, some posts presented the material as a “leak,” suggesting it was real content published without Elordi’s consent. But other posts correctly identified the content as a deepfake.

The deepfake allegedly superimposes Elordi’s face onto a pornographic video from a male OnlyFans cretor, with the body in the deepfake lacking Elordi’s distinctive chest birthmark.

The male OnlyFans creator said he was 17 when the video was taken, meaning he was a minor. In posts, he publicly condemned the misuse of his video, writing: “that’s literally my video” and “deep fake is getting creepy.” He also replied to several of the posts containing the deepfake and asked for them to be deleted.

He is currently 19 and lives in Brazil, according to NBC.

In recent years, nonconsensual sexually explicit deepfakes have become increasingly common online, with a number of high-profile women including Taylor Swift and Megan Thee Stallion targeted. Numerous TikTok stars have been depicted in similar content on the platform.

Deepfakes are created or altered using artificial intelligence or similar technology, and manipulated to replace one person’s likeness convincingly with that of another.

Nonconsensual sexually explicit videos that “swap” a person’s face into a pornographic video are one of the most prevalent forms of deepfakes. Political deepfakes containing election disinformation are also prominent, with some raising concerns that they could be used to interfere with the 2024 elections.

Since April 2023, X has implemented a policy against sharing “synthetic, manipulated, or out-of-context media that may deceive or confuse people and lead to harm.” It also prohibits content that “sexualizes an individual without their consent.”

But despite the policy, deepfakes have persisted on X, with the company struggling to stamp them out.

Recent federal and state legislation in the US aimed at combatting deepfakes has also had little success, with such material ballooning online.

Adult content creators have voiced concerns about the increasing number of pornographic scams on the platform.

X has previously said it proactively removes deepfakes.

The Independent has contacted X and representatives of Elordi for comment.