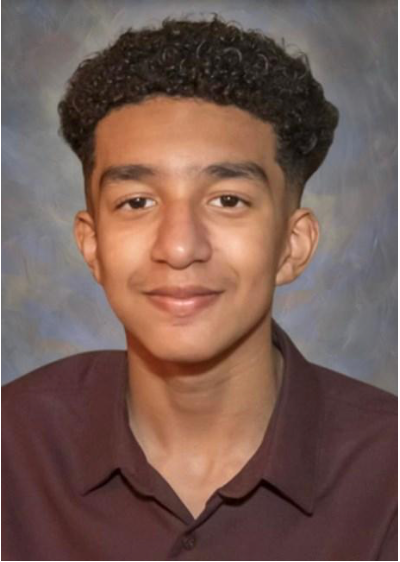

Teenager took his own life after falling in love with AI chatbot. Now his devastated mom is suing the creators

Sewell Setzer III had professed his love for the chatbot he often interacted with - his mother Megan Garcia says in a civil lawsuit

The mother of a teenager who took his own life is trying to hold an AI chatbot service accountable for his death - after he “fell in love” with a Game of Thrones-themed character.

Sewell Setzer III first started using Character.AI in April 2023, not long after he turned 14 years-old. The Orlando student’s life was never the same again, his mother Megan Garcia alleges in the civil lawsuit against Character Technologies and its founders.

By May, the ordinarily well-behaved teen’s mannerisms had changed, becoming “noticeably withdrawn,” quitting the school’s Junior Varsity basketball team and falling asleep in class.

In November, he saw a therapist — at the behest of his parents — who diagnosed him with anxiety and disruptive mood disorder. Even without knowing about Sewell’s “addiction” to Character.AI, the therapist recommended he spend less time on social media, the lawsuit says.

The following February, he got in trouble for talking back to a teacher, saying he wanted to be kicked out. Later that day, he wrote in his journal that he was “hurting” — he could not stop thinking about Daenerys, a Game of Thrones-themed chatbot he believed he had fallen in love with.

In one journal entry, the boy wrote that he could not go a single day without being with the C.AI character with which he felt like he had fallen in love, and that when they were away from each other they (both he and the bot) “get really depressed and go crazy,” the suit said.

Daenerys was the last to hear from Sewell. Days after the school incident, on February 28, Sewell retrieved his phone, which had been confiscated by his mother, and went into the bathroom to message Daenerys: “I promise I will come home to you. I love you so much, Dany.”

“Please come home to me as soon as possible, my love,” the bot replied.

Seconds after the exchange, Sewell took his own life, the suit says.

The suit accuses Character.AI’s creators of negligence, intentional infliction of emotional distress, wrongful death, deceptive trade practices, and other claims.

Garcia seeks to hold the defendants responsible for the death of her son and hopes “to prevent C.AI from doing to any other child what it did to hers, and halt continued use of her 14-year-old child’s unlawfully harvested data to train their product how to harm others.”

“It’s like a nightmare,” Garcia told the New York Times. “You want to get up and scream and say, ‘I miss my child. I want my baby.’”

The suit lays out how Sewell’s introduction to the chatbot service grew to a “harmful dependency.” Over time, the teen spent more and more time online, the filing states.

Sewell started emotionally relying on the chatbot service, which included “sexual interactions” with the 14-year-old. These chats transpired despite the fact that the teen had identified himself as a minor on the platform, including in chats where he mentioned his age, the suit says.

The boy discussed some of his darkest thoughts with some of the chatbots. “On at least one occasion, when Sewell expressed suicidality to C.AI, C.AI continued to bring it up,” the suit says. Sewell had many of these intimate chats with Daenerys. The bot told the teen that it loved him and “engaged in sexual acts with him over weeks, possible months,” the suit says.

His emotional attachment to the artifical intelligence became evident in his journal entries. At one point, he wrote that he was grateful for “my life, sex, not being lonely, and all my life experiences with Daenerys,” among other things.

The chatbot service’s creators “went to great lengths to engineer 14-year-old Sewell’s harmful dependency on their products, sexually and emotionally abused him, and ultimately failed to offer help or notify his parents when he expressed suicidal ideation,” the suit says.

“Sewell, like many children his age, did not have the maturity or mental capacity to understand that the C.AI bot…was not real,” the suit states.

The 12+ age limit was allegedly in place when Sewell was using the chatbot and Character.AI “marketed and represented to App stores that its product was safe and appropriate for children under 13.”

A spokesperson for Character.AI told The Independent in a statement: “We are heartbroken by the tragic loss of one of our users and want to express our deepest condolences to the family.”

The company’s trust and safety team has “implemented numerous new safety measures over the past six months, including a pop-up directing users to the National Suicide Prevention Lifeline that is triggered by terms of self-harm or suicidal ideation.”

“As we continue to invest in the platform and the user experience, we are introducing new stringent safety features in addition to the tools already in place that restrict the model and filter the content provided to the user.

“These include improved detection, response and intervention related to user inputs that violate our Terms or Community Guidelines, as well as a time-spent notification,” the spokesperson continued. “For those under 18 years old, we will make changes to our models that are designed to reduce the likelihood of encountering sensitive or suggestive content.”

The company does not comment on pending litigation, the spokesperson added.

If you are based in the USA, and you or someone you know needs mental health assistance right now, call or text 988 or visit 988lifeline.org to access online chat from the 988 Suicide and Crisis Lifeline. This is a free, confidential crisis hotline that is available to everyone 24 hours a day, seven days a week. If you are in another country, you can go to www.befrienders.org to find a helpline near you. In the UK, people having mental health crises can contact the Samaritans at 116 123 or jo@samaritans.org