A father is warning others about a new AI ‘family emergency scam’

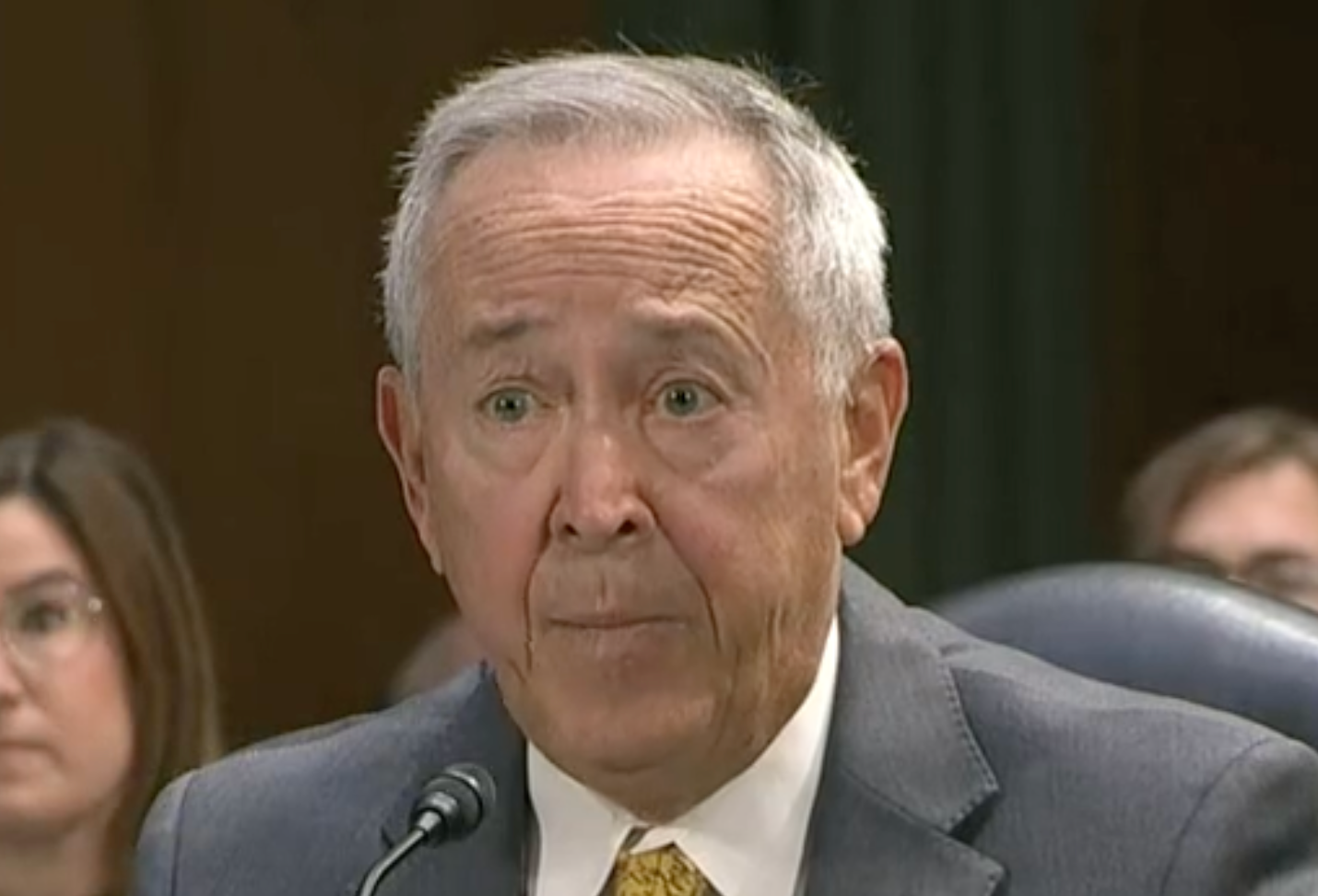

Philadelphia attorney Gary Schildhorn received a call from who he believed was his son, saying that he needed money to post bail following a car crash. Mr Schildhorn later found out he nearly fell victim to scammers using AI to clone his son’s voice, reports Andrea Blanco

Your support helps us to tell the story

From reproductive rights to climate change to Big Tech, The Independent is on the ground when the story is developing. Whether it's investigating the financials of Elon Musk's pro-Trump PAC or producing our latest documentary, 'The A Word', which shines a light on the American women fighting for reproductive rights, we know how important it is to parse out the facts from the messaging.

At such a critical moment in US history, we need reporters on the ground. Your donation allows us to keep sending journalists to speak to both sides of the story.

The Independent is trusted by Americans across the entire political spectrum. And unlike many other quality news outlets, we choose not to lock Americans out of our reporting and analysis with paywalls. We believe quality journalism should be available to everyone, paid for by those who can afford it.

Your support makes all the difference.When Gary Schildhorn picked up the phone while on his way to work back in 2020, he heard the panicked voice of his son Brett on the other end of the line.

Or so he thought.

A distressed Brett told Mr Schildhorn that he had wrecked his car and needed $9,000 to post bail. Brett said that his nose was broken, that he had hit a pregnant woman’s car and instructed his father to call the public attorney assigned to his case.

Mr Schildhorn did as told, but a short call with the supposed public defender, who ordered him to send the money through a Bitcoin kiosk, made the worried father feel uneasy about the situation. After a follow-up call with Brett, Mr Schildhorn realised that he almost fell victim to what the Federal Trade Commission has dubbed the “family emergency scam.”

“A FaceTime call from my son, he’s pointing to his nose and says, ‘My nose is fine, I’m fine, you’re being scammed,’” Mr Schildhorn, a practising corporate attorney in Philadelphia, told Congress during a hearing. “I sat there in my car, I was physically affected by that. It was shock, and anger and relief.”

The elaborate scheme involves scammers using artificial intelligence to clone a person’s voice, which is then used to trick loved ones into sending money to cover a supposed emergency. When he contacted his local law enforcement department, Mr Schildhorn was redirected to the FBI, which then told him that the agency was aware of the type of scam, but couldn’t get involved unless the money was sent overseas.

The FTC first sounded the alarm in March; Don’t trust the voice. The agency has warned consumers that long gone are the days of easily identifiable clumsy scams, and that sophisticated technologies have brought along a new set of challenges officials are still trying to navigate.

All that is needed to replicate a human voice is a short audio of that person speaking — in some cases readily accessible through content posted on social media. The voice is mimicked with an AI voice-cloning program to sound just like the original clip.

Some programs only require a three-second audio clip to generate whatever the scammer intends with a specific emotion or speaking style, according toPC Magazine. The threat is also present that dumbfounded family members answering the phone and asking questions to corroborate the scammer’s story may also be recorded, thus inadvertently providing more ammunition for scammers.

“Scammers ask you to pay or send money in ways that make it hard to get your money back,” the FTC advisory states. “If the caller says to wire money, send cryptocurrency, or buy gift cards and give them the card numbers and PINs, those could be signs of a scam.”

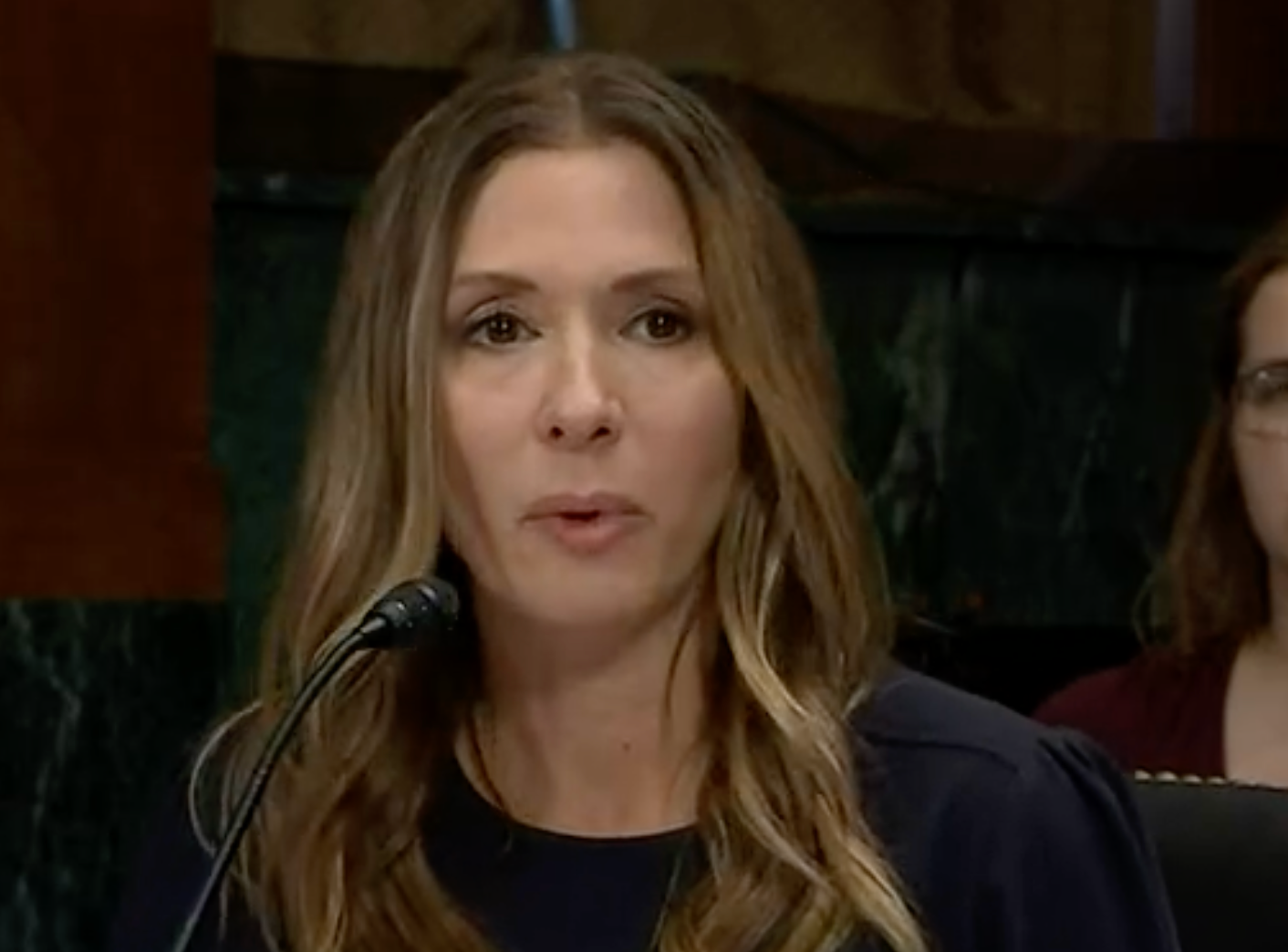

The rise of AI voice cloning scams has forced lawmakers to explore venues to regulate the use of new technology. During a Senate hearing in June, Pennsylvania mother Jennifer DeStefano shared her own experience with voice cloning scams, and how it left her shaken.

At the June hearing, Ms Stefano recounted hearing who she believed was her 15-year-old daughter crying and sobbing on the phone before a man told her he would “pump [the teen’s] stomach full of drugs and send her to Mexico” if the mother called police. A call to her daughter confirmed that she was safe.

“I will never be able to shake that voice and the desperate cry for help from my mind,” Ms DeStefano said at the time. “There’s no limit to the evil AI can bring. If left uncontrolled and unregulated, it will rewrite the concept of what is real and what is not.”

Unfortunately, existing legislation falls short of protecting victims of this type of scam.

IP expert Michael Teich wrote in an August column for IPWatchdog that laws designed to protect privacy may apply in some cases of voice-cloning scams, but they’re only actionable by the person whose voice was used, not the victim of the fraud.

Meanwhile, existing copyright laws do not recognise ownership of a person’s voice.

“That has left me frustrated because I have been involved in cases of consumer fraud, and I almost fell for this,” Mr Schildhorn told Congress. “The only thing I thought I could do was warn people ... I’ve received calls from people across the country who ... have lost money, and they were devastated. They were emotionally and physically hurt, they almost called to get a phone call hug.”

The FTC has yet to set any requirements for the companies developing the voice cloning programs, but according to Mr Teich, they could potentially face legal consequences if they fail to provide safeguards going forward.

To address the growing number of voice cloning scams, the FTC has announced an open call to action. Participants are asked to develop solutions that protect consumers from voice cloning harms, and the winners will receive a prize of $25,000.

The agency asks victims of voice-cloning fraud to report those on its website.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments