Police may have used 'dangerous' facial recognition unlawfully in UK, watchdog says

Information Commissioner calls for government to urgently draw up legal code of practice

Your support helps us to tell the story

From reproductive rights to climate change to Big Tech, The Independent is on the ground when the story is developing. Whether it's investigating the financials of Elon Musk's pro-Trump PAC or producing our latest documentary, 'The A Word', which shines a light on the American women fighting for reproductive rights, we know how important it is to parse out the facts from the messaging.

At such a critical moment in US history, we need reporters on the ground. Your donation allows us to keep sending journalists to speak to both sides of the story.

The Independent is trusted by Americans across the entire political spectrum. And unlike many other quality news outlets, we choose not to lock Americans out of our reporting and analysis with paywalls. We believe quality journalism should be available to everyone, paid for by those who can afford it.

Your support makes all the difference.Facial recognition technology may have been used unlawfully by police, a watchdog has warned while calling for urgent government regulation.

Raising "serious concerns" about the way it has been used by forces around the country, the Information Commissioner said that each deployment must be “strictly necessary” for law enforcement purposes and have an identified legal basis under data protection laws.

“Police forces must provide demonstrably sound evidence to show that live facial recognition (LFR) technology is strictly necessary, balanced and effective in each specific context in which it is deployed,” Elizabeth Denham said after her office released a report on its usage.

“A high statutory threshold that must be met to justify the use of LFR, and demonstrate accountability, under the UK’s data protection law.”

She added: “Never before have we seen technologies with the potential for such widespread invasiveness.”

The report highlighted a high rate of “false positive matches”, seeing software flag innocent members of the public to police as wanted criminals.

It noted studies showing the technology was less accurate for women and BAME subjects, and that unless police compensate for any inherent bias their decisions may be unfair and “potentially unlawful”.

The watchdog called for the government to create statutory and binding code of practice on the use of live facial recognition, which has spread beyond law enforcement into the hands of private companies and businesses.

“We found that the current combination of laws, codes and practices relating to LFR will not drive the ethical and legal approach that’s needed to truly manage the risk that this technology presents,” Ms Denham said.

“Moving too quickly to deploy technologies that can be overly invasive in people’s lawful daily lives risks damaging trust nfot only in the technology, but in the fundamental model of policing by consent.”

She added that the lack of a specific legal framework for facial recognition created inconsistency and increased the risk of data protection violations.

The Labour Party has pledged to regulate facial recognition if it wins power at the next election, while the Liberal Democrats would ban the technology until new laws can be drawn up.

Last month, a group of cross-party MPs including David Davis signed a letter calling for an immediate halt to the use of live facial recognition in British public spaces.

Mr Davis, a former cabinet minister, said: “There must be an immediate halt to the use of these systems to give parliament the chance to debate it properly and establish proper rules for the police to follow.”

The Information Commissioner’s investigation looked at deployments by South Wales Police and London's Metropolitan Police, which have both faced legal challenges.

The High Court found that South Wales Police had used facial recognition lawfully in a landmark case, but the Information Commissioner said judges only considered two specific deployments in Cardiff and their ruling “should not be seen as a blanket authorisation for police forces to use LFR systems in all circumstances”.

Ms Denham issued a legal opinion to be followed by police forces going forward, which states that facial recognition use must be “governed, targeted and intelligence-led”, rather than the “blanket, opportunistic and indiscriminate processing” already seen in some areas.

The report says that the watchlists used to compare scanned faces against must also meet the “strict necessity test” and include only people wanted for serious offences who are likely to be in the target area.

The watchdog said that the custody image database being used as a source contains unlawfully-retained mugshots which should have been deleted.

Following widespread criticism over the high cost of facial recognition and low number of arrests resulting from deployments, Ms Denham said police must consider effectiveness and ensure the technology provides a “demonstrable benefit to the public”.

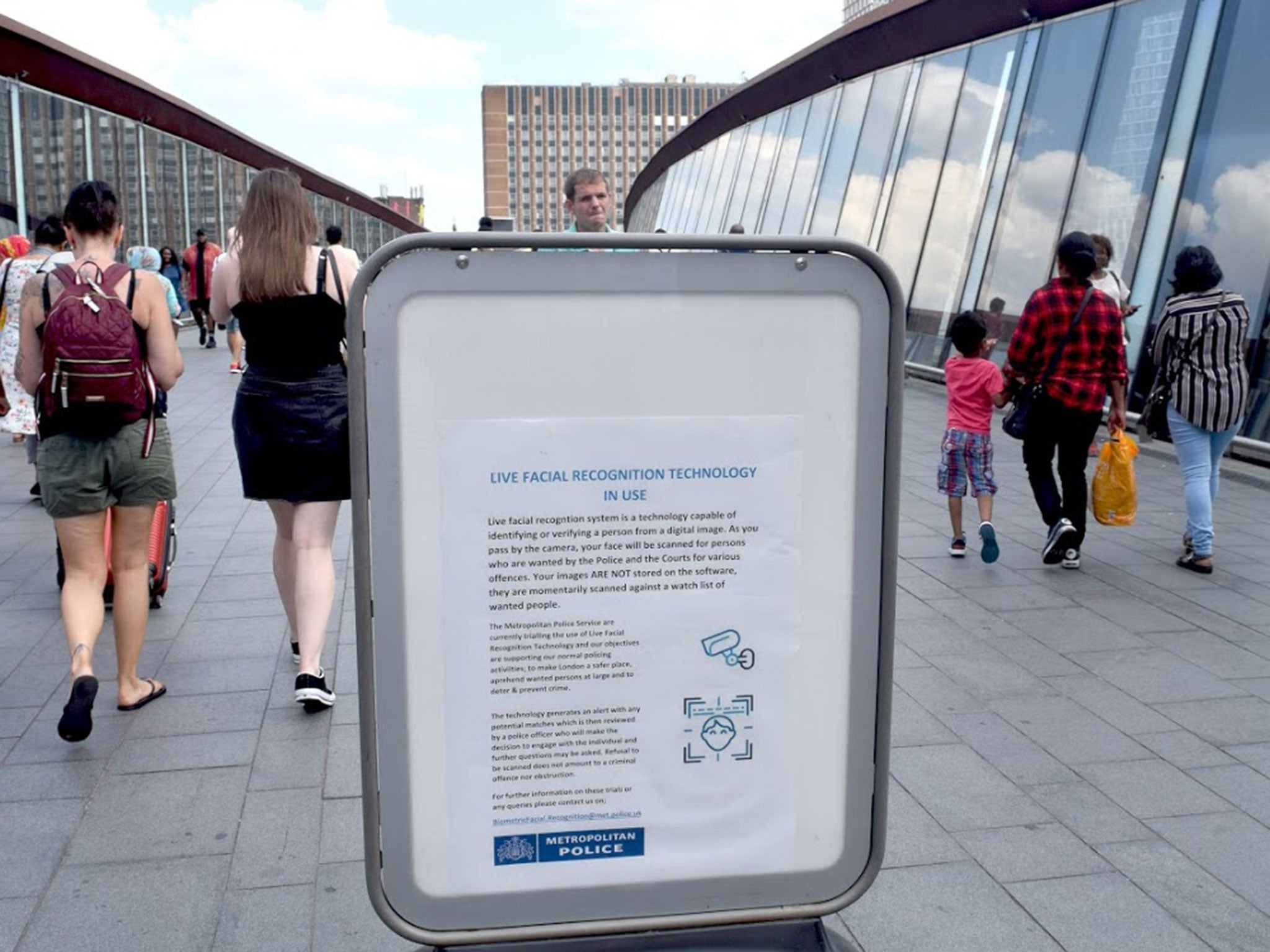

After finding that most members of the public already scanned had not consented to the deployments or seen signs saying facial recognition was in use, the Information Commissioner said “clear, effective and appropriate signage” must be used and police must offer the public information on their rights.

The watchdog said that in one Metropolitan Police trial in Romford, people were caught on camera before reaching warning signs, after The Independent found people unaware of the deployments.

Scotland Yard pledged that people would not be viewed as suspicious merely for avoiding cameras, but officers stopped a man who covered his face and fined him after he swore at them in January.

Big Brother Watch, which has crowdfunded an ongoing legal challenge against the Metropolitan Police and home secretary, said the “dangerous technology” erodes civil liberties.

Director Silkie Carlo said: “Police have been let off the leash, splashing public money around on Orwellian technologies, and regulators trying to clean up the mess is too little too late. This is a society-defining civil liberties issue that requires not just regulators but political leadership.”

The Liberty campaign group said facial recognition represented an “unprecedented intrusion on our privacy”.

Policy and campaigns officer Hannah Couchman added: “The use of this oppressive mass surveillance technology cannot be justified, which is why Liberty is calling for a ban – it has no place on our streets.”

The Information Commissioner’s Office is separately investigating this use of facial recognition in the private sector, following a scandal over its use on the King’s Cross estate.

The government did not immediately answer the watchdog's call but has committed to updating the Surveillance Camera Code, which covers the use of live facial recognition.

A Home Office spokesperson said: “The government supports the police as they trial new technologies to protect the public, including facial recognition, which has helped them identify, locate and arrest suspects that wouldn’t otherwise have been possible. The High Court recently found there is a clear and sufficient legal framework for the use of live facial recognition technology.

“We are always willing to consider proposals to improve the legal framework and promote public safety and confidence in the police.”

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments