Facial recognition company used by British police fined £7.5m over unlawful image database

Service ‘effectively monitors the public’s behaviour and offers it as a commercial service’, watchdog finds

Your support helps us to tell the story

From reproductive rights to climate change to Big Tech, The Independent is on the ground when the story is developing. Whether it's investigating the financials of Elon Musk's pro-Trump PAC or producing our latest documentary, 'The A Word', which shines a light on the American women fighting for reproductive rights, we know how important it is to parse out the facts from the messaging.

At such a critical moment in US history, we need reporters on the ground. Your donation allows us to keep sending journalists to speak to both sides of the story.

The Independent is trusted by Americans across the entire political spectrum. And unlike many other quality news outlets, we choose not to lock Americans out of our reporting and analysis with paywalls. We believe quality journalism should be available to everyone, paid for by those who can afford it.

Your support makes all the difference.A facial recognition company used by British police forces has been fined more than £7.5m for creating an unlawful database of 20 billion images.

The Information Commissioner’s Office said Clearview AI had scraped people’s private photos from social media and across the internet without their knowledge.

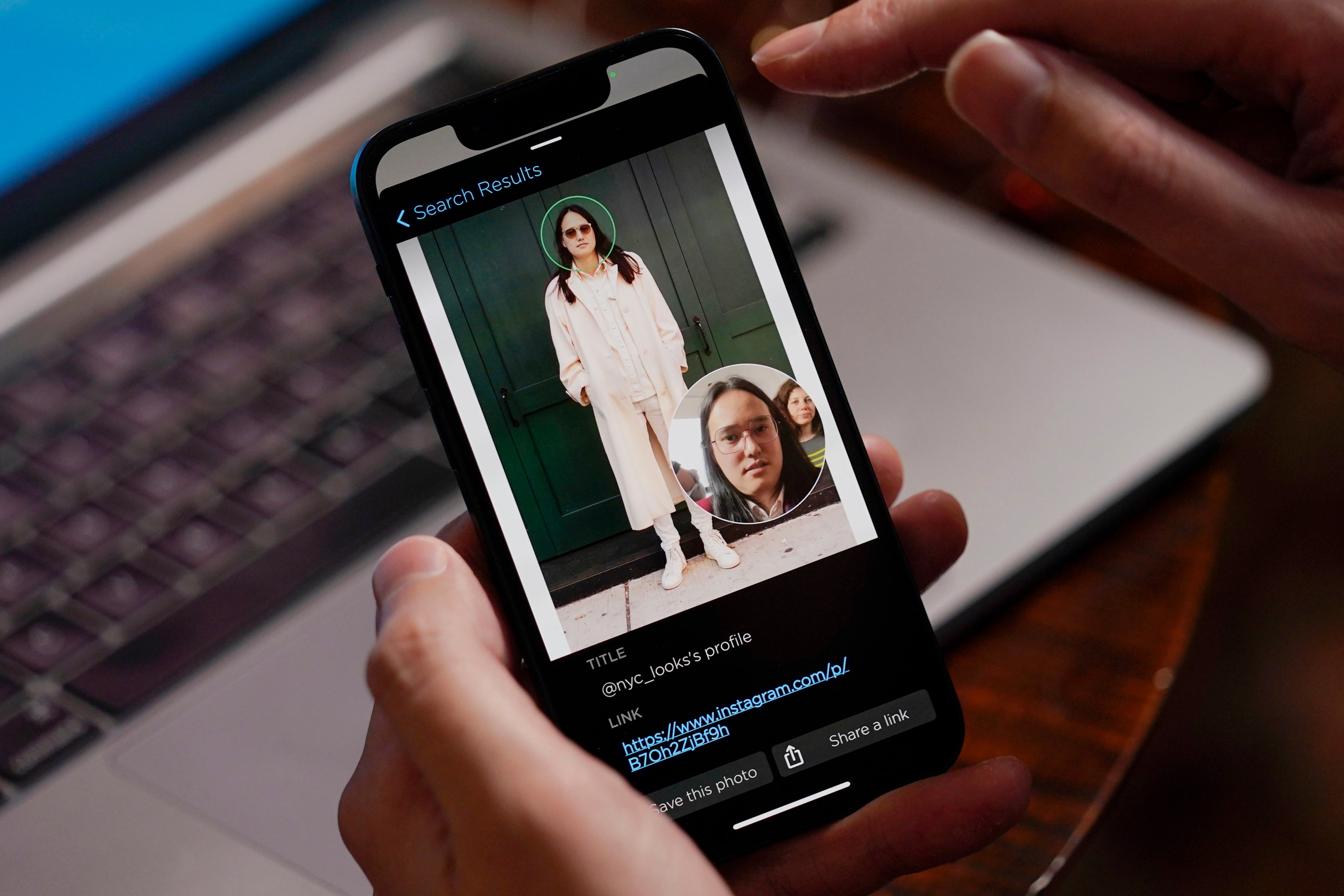

It created an app, sold to customers including the police, where they could upload a photograph to check for a match against images in the database.

The app would provide a list of images that have similar characteristics, with a link to the websites where they were sourced.

“Given the high number of UK internet and social media users, Clearview AI Inc’s database is likely to include a substantial amount of data from UK residents, which has been gathered without their knowledge,” a spokesperson for the Information Commissioner said.

“Although Clearview AI Inc no longer offers its services to UK organisations, the company has customers in other countries, so the company is still using personal data of UK residents.”

Documents reviewed by Buzzfeed News in 2020 indicated that the Metropolitan Police, National Crime Agency, Northamptonshire Police,North Yorkshire Police, Suffolk Constabulary, Surrey Police and Hampshire Police are among the forces to have used the technology.

The ICO found that Clearview AI had committed multiple breaches of data protection laws, including failing to have a lawful reason for collecting people’s information, failing to be “fair and transparent” and asking people who questioned whether they were on the database for additional personal information including photos.

John Edwards, the Information Commissioner, said: “Clearview AI Inc has collected multiple images of people all over the world, including in the UK, from a variety of websites and social media platforms, creating a database with more than 20 billion images.

“The company not only enables identification of those people, but effectively monitors their behaviour and offers it as a commercial service. That is unacceptable. That is why we have acted to protect people in the UK by both fining the company and issuing an enforcement notice.

“People expect that their personal information will be respected, regardless of where in the world their data is being used.”

The fine was the result of a joint investigation conducted with the Australian information commissioner, which started in July 2020.

The ICO also issued an enforcement notice, ordering the company to stop obtaining and using the personal data of UK residents that is publicly available on the internet, and to delete the data of UK residents from its systems.

A legal firm representing Clearview AI said the fine was “incorrect as a matter of law”, because the company no longer does business in the UK and is “not subject to the ICO’s jurisdiction”.

Hoan Ton-That, the company’s CEO, said: “I am deeply disappointed that the UK Information Commissioner has misinterpreted my technology and intentions. I created the consequential facial recognition technology known the world over.

“My company and I have acted in the best interests of the UK and their people by assisting law enforcement in solving heinous crimes against children, seniors, and other victims of unscrupulous acts.

“It breaks my heart that Clearview AI has been unable to assist when receiving urgent requests from UK law enforcement agencies seeking to use this technology to investigate cases of severe sexual abuse of children in the UK. We collect only public data from the open internet and comply with all standards of privacy and law.”

Clearview AI’s app was separate from live facial recognition systems used by the Metropolitan Police and South Wales Police, which use video footage to scan for matches to a “watchlist” of images in real time.

The use of the technology, which has also been expanding into the private sector, has drawn controversy and several legal challenges.

A man who was scanned in Cardiff won a Court of Appeal case in 2020, with judges finding that the automatic facial recognition used violated human rights, data protection and equality laws.

Subscribe to Independent Premium to bookmark this article

Want to bookmark your favourite articles and stories to read or reference later? Start your Independent Premium subscription today.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments