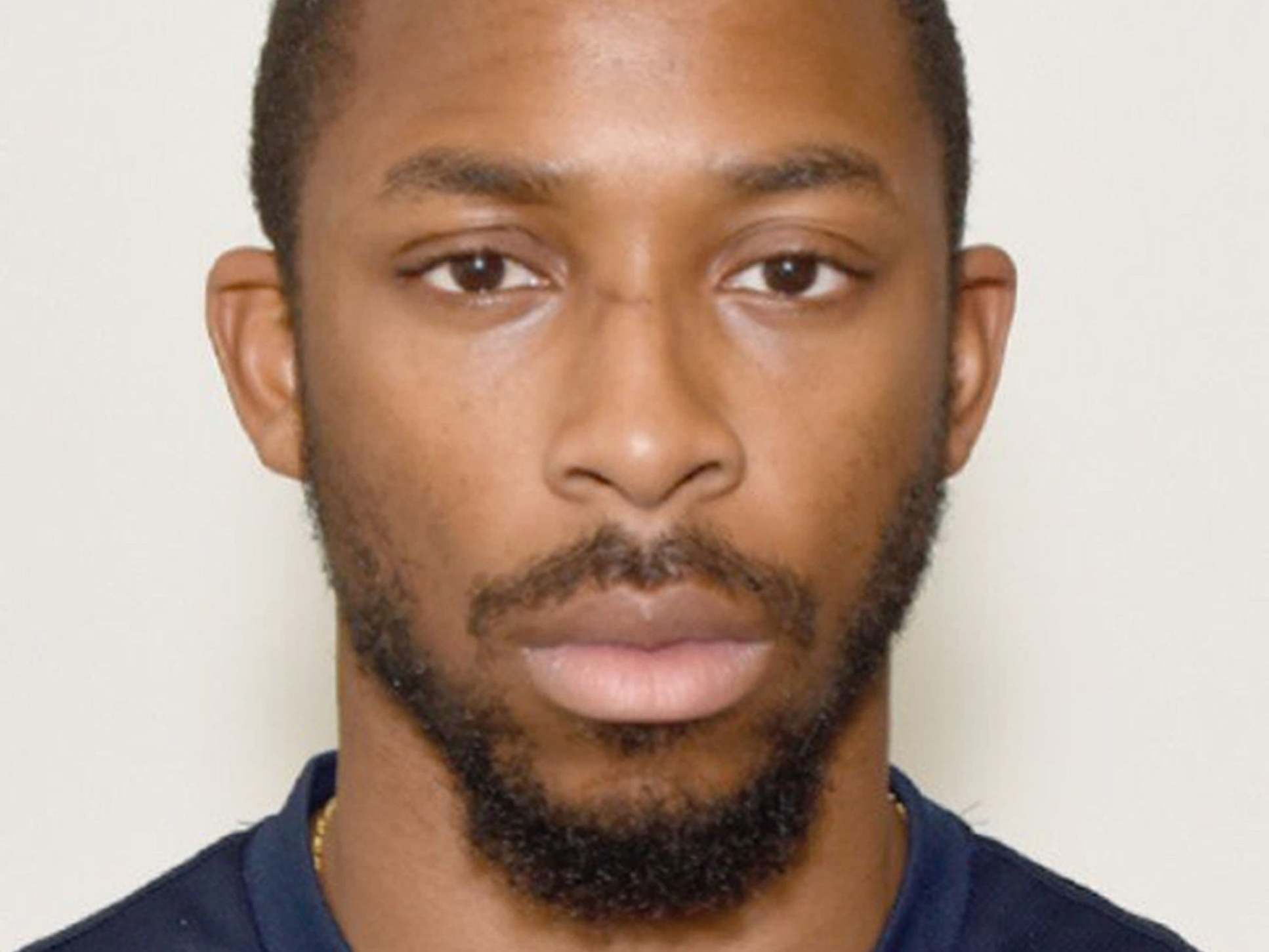

'I was a bit annoyed': Black man's lips flagged by passport checker as open mouth

‘My mouth is closed, I just have big lips,’ Joshua Bada tells machine

Your support helps us to tell the story

From reproductive rights to climate change to Big Tech, The Independent is on the ground when the story is developing. Whether it's investigating the financials of Elon Musk's pro-Trump PAC or producing our latest documentary, 'The A Word', which shines a light on the American women fighting for reproductive rights, we know how important it is to parse out the facts from the messaging.

At such a critical moment in US history, we need reporters on the ground. Your donation allows us to keep sending journalists to speak to both sides of the story.

The Independent is trusted by Americans across the entire political spectrum. And unlike many other quality news outlets, we choose not to lock Americans out of our reporting and analysis with paywalls. We believe quality journalism should be available to everyone, paid for by those who can afford it.

Your support makes all the difference.A black man who tried to renew his passport online had his image flagged by an automated photo checker because it mistook his lips for an open mouth.

Joshua Bada used a high quality photo booth to take the image for the application process.

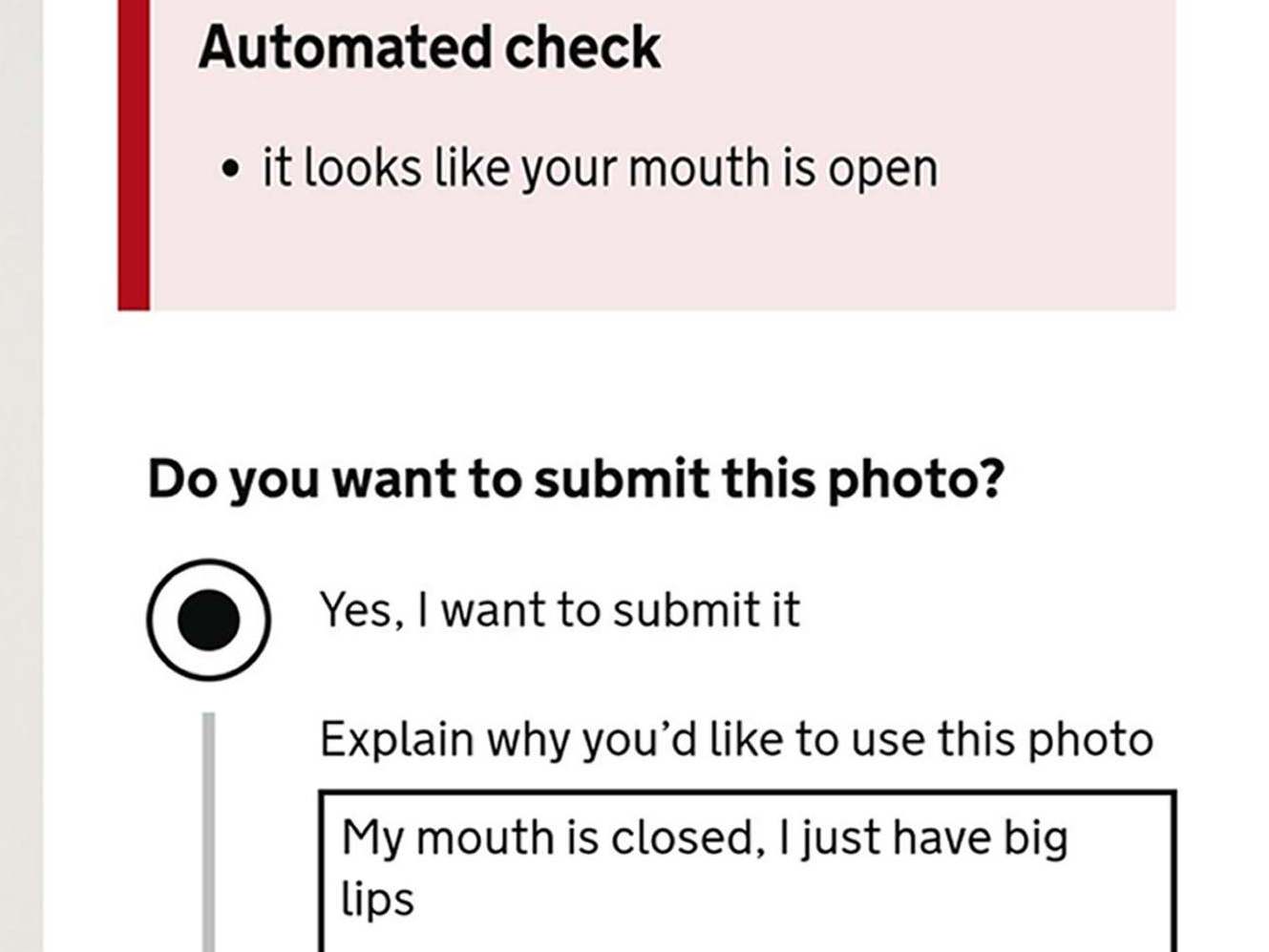

But the automatic facial detection system which informs applicants whether their images will meet the strict requirements needed for passport photos told him: "It looks like your mouth is open".

Asked by the system if he wanted to submit the photo anyway, Mr Bada said he was forced to explain the situation in a comment box.

“My mouth is closed, I just have big lips," he wrote.

The 28-year-old said he had previously struggled with technology which failed to recognise his features.

“When I saw it, I was a bit annoyed but it didn’t surprise me because it’s a problem that I have faced on Snapchat with the filters, where it hasn’t quite recognised my mouth, obviously because of my complexion and just the way my features are,” he said.

“After I posted it online, friends started getting in contact with me, saying, it’s funny but it shouldn’t be happening.”

The incident is similar to that shared online in April by Cat Hallam, a black woman who lives in Staffordshire.

She became frustrated after the system told her it looked like her eyes were closed and that it could not find the outline of her head.

“The first time I tried uploading it and it didn’t accept it,” she said at the time. “So perhaps the background wasn’t right. I opened my eyes wider, I closed my mouth more, I pushed my hair back and did various things, changed clothes as well – I tried an alternative camera.”

The education technologist added that she was irritated about having to pay extra for a photo booth image when free smartphone photos worked for other people.

She added:”How many other individuals are probably either spending money unnecessarily or having to go through the process on numerous occasions of a system that really should be able to factor in a broad range of ethnicities?”

Ms Hallam pressed on with one of the images and renewed her passport without further issues.

She said the problem was one of algorithmic bias. Ms Hallam said she did not believe it amounted to racism.

When she tweeted about the issue the Passport Office replied, saying it was sorry the service had not “worked as it should”.

After the latest incident, a Home Office spokesperson said: “We are determined to make the experience of uploading a digital photograph as simple as possible, and will continue working to improve this process for all of our customers. In the vast majority of cases where a photo does not pass our automated check, customers may override the outcome and submit the photo as part of their application.

“The photo checker is a customer aide that is designed to check a photograph meets the internationally agreed standards for passports.”

Noel Sharkey, a professor of artificial intelligence and robotics at the University of Sheffield said it was known that automated systems had "problems with gender as well,”

He added: “It has a real problem with women too generally, and if you’re a black woman you’re screwed, it’s really bad, it’s not fit for purpose and I think it’s time that people started recognising that. People have been struggling for a solution for this in all sorts of algorithmic bias, not just face recognition, but algorithmic bias in decisions for mortgages, loans, and everything else and it’s still happening.”

Additional reporting by agencies

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments