Police urged to axe facial recognition after research finds four of five ‘suspects’ are innocent

Report also raises ‘significant concerns’ Metropolitan Police’s identification system breaks human rights laws

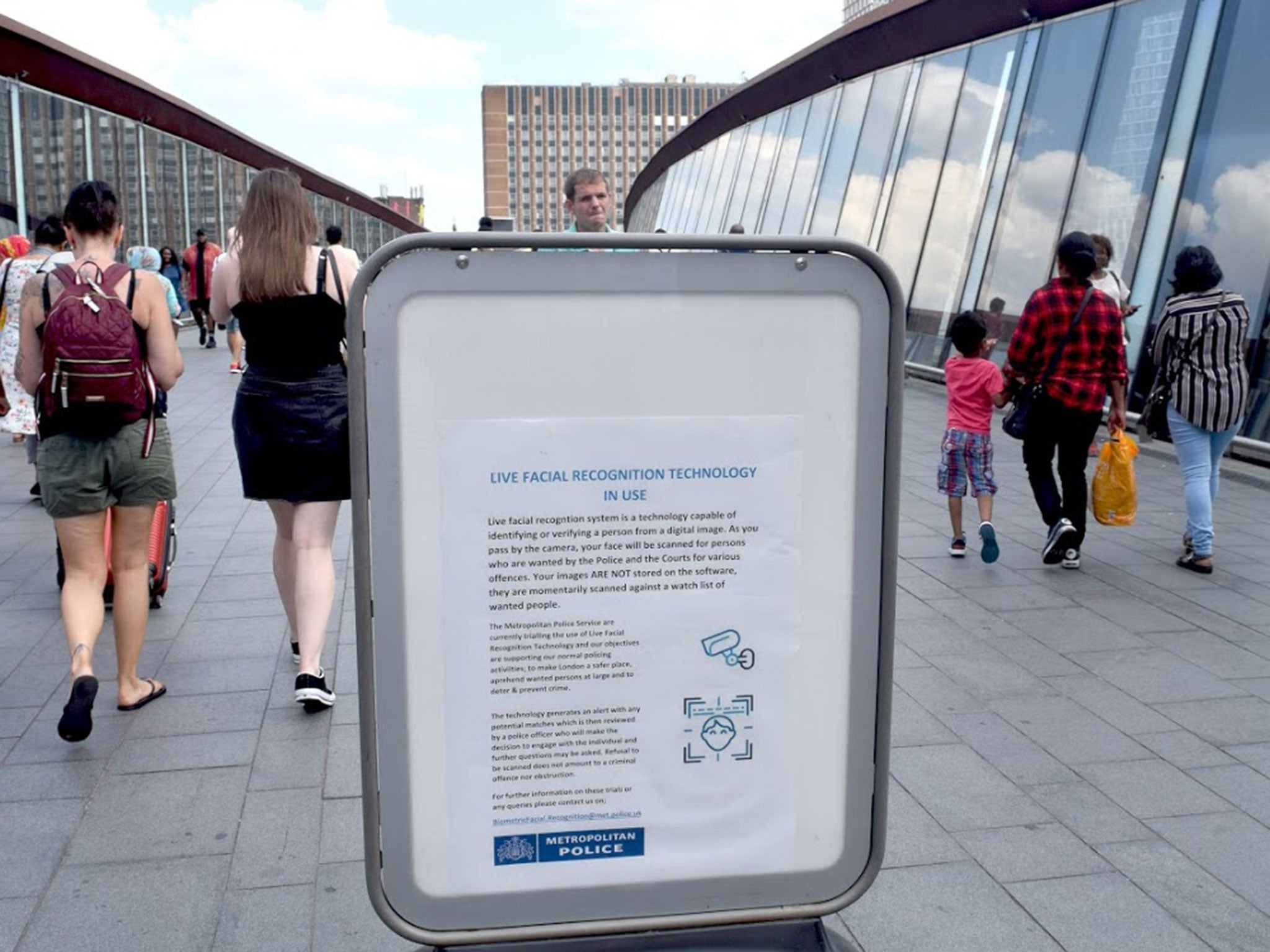

Scotland Yard has been urged to stop using facial recognition technology after independent research found that four out of five people identified as possible suspects were innocent.

Researchers at the University of Essex found that the Metropolitan Police’s live facial recognition (LFR) system was inaccurate in the huge majority of cases: the technology made only eight out of 42 matches correctly across six trials evaluated.

The report, commissioned by the Met, raised “significant concerns” that use of the controversial technology breaks human rights laws.

It also warned it is “highly possible” the use of facial recognition would be held unlawful if challenged in court.

The report’s authors, Professor Peter Fussey and Dr Daragh Murray, called for all Scotland Yard’s live trials of LFR to be ceased until the concerns are addressed.

Duncan Ball, the Met’s deputy assistant commissioner, said the force was “extremely disappointed with the negative and unbalanced tone” of the report – insisting the pilot had been successful.

The Neoface system uses special cameras to scan the structure of faces in a crowd of people to create a digital image, comparing the result against a watch list made up of pictures of people who have been taken into police custody.

If a match is found, officers at the scene where cameras are set up are alerted.

According to the Met’s website, the force has used the technology several times since 2016, including at Notting Hill Carnival that year and the following year.

Use of the facial recognition is currently under judicial review in Wales following the technology’s first ever legal challenge, brought against South Wales Police by Liberty.

Hannah Couchman, policy and campaigns officer for the civil rights group, renewed their calls for a ban on the technology after the research was published.

“This damning assessment of the Met’s trial of facial recognition technology only strengthens Liberty's call for an immediate end to all police use of this deeply invasive tech in public spaces,” she said.

“It would display an astonishing and deeply troubling disregard for our rights if the Met now ignored this independent report and continued to deploy this dangerous and discriminatory technology. We will continue to fight against police use of facial recognition which has no place on our streets.”

The report’s authors were granted access to the final six of 10 trials run by the Metropolitan Police, running from June last year to February 2019.

The research also highlighted concerns over criteria for the watch list as information was often not current, which saw police stopping people whose case had already been addressed.

It also found numerous operational failures and raised a number of concerns regarding “consent, public legitimacy and trust”.

Dr Murray said: “This report raises significant concerns regarding the human rights law compliance of the trials. The legal basis for the trials was unclear and is unlikely to satisfy the ‘in accordance with the law’ test established by human rights law.

“It does not appear that an effective effort was made to identify human rights harms or to establish the necessity of LFR. Ultimately, the impression is that human rights compliance was not built into the Metropolitan Police’s systems from the outset, and was not an integral part of the process.”

Deputy Commissioner Ball responded to the report: “This is new technology, and we’re testing it within a policing context.

“The Met’s approach has developed throughout the pilot period, and the deployments have been successful in identifying wanted offenders.

“We believe the public would absolutely expect us to try innovative methods of crime fighting in order to make London safer.”

Additional reporting by PA