The Independent's journalism is supported by our readers. When you purchase through links on our site, we may earn commission.

Is the standard model of particle physics broken?

Roger Jones on why creating a new model to explain particle physics will help us delve deep into the universe’s mysteries

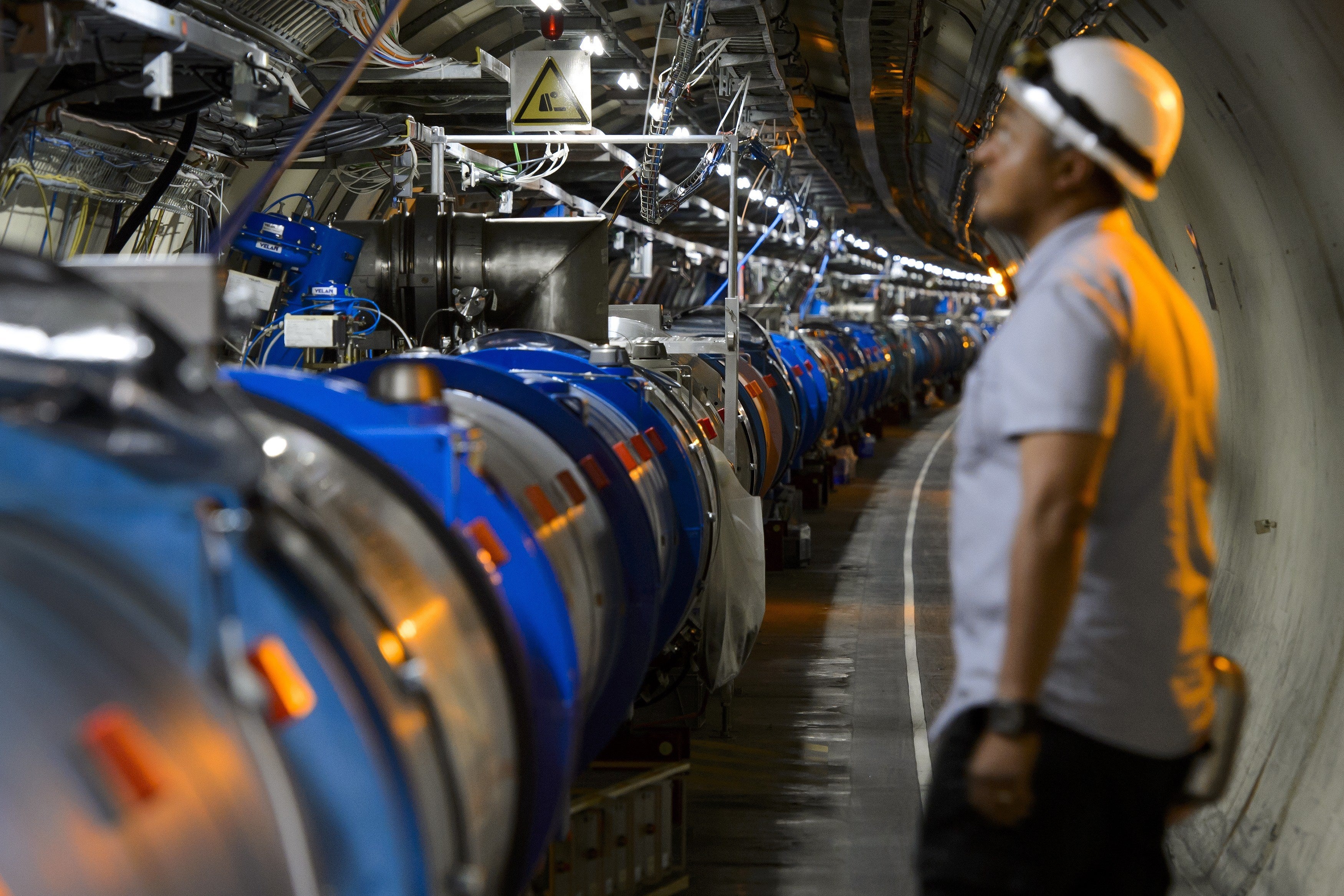

As a physicist working on the Large Hadron Collider (LHC) at Cern, one of the most frequent questions I am asked is “When are you going to find something?”. Resisting the temptation to sarcastically reply “Besides the Higgs boson, which won the Nobel Prize, and a whole slew of new composite particles?”, I realise that the reason the question is posed so often is down to how we have portrayed progress in particle physics to the wider world.

We often talk about progress in terms of discovering new particles, and it often is. Studying a new, very heavy particle helps us view underlying physical processes – often without annoying background noise. That makes it easy to explain the value of the discovery to the public and politicians.

Recently, however, a series of precise measurements of already known, bog-standard particles and processes have threatened to shake up physics. And with the LHC getting ready to run at higher energy and intensity than ever before, it is time to start discussing the implications widely.

In truth, particle physics has always proceeded in two ways, of which new particles is one. The other is by making very precise measurements that test the predictions of theories and look for deviations from what is expected.

The early evidence for Einstein’s theory of general relativity, for example, came from discovering small deviations in the apparent positions of stars and from the motion of Mercury in its orbit.

Three key findings

Particles obey a counter-intuitive but hugely successful theory called quantum mechanics. This theory shows that particles far too massive to be made directly in a lab collision can still influence what other particles do (through something called “quantum fluctuations”). Measurements of such effects are very complex, however, and much harder to explain to the public.

While we are not absolutely certain these effects require a novel explanation, the evidence seems to be growing that some new physics is needed

But recent results hinting at unexplained new physics beyond the standard model are of this second type. Detailed studies from the LHCb experiment found that a particle known as a beauty quark (quarks make up the protons and neutrons in the atomic nucleus) “decays” (falls apart) into an electron much more often than into a muon – the electron’s heavier, but otherwise identical, sibling. According to the standard model, this shouldn’t happen – hinting that new particles or even forces of nature may influence the process.

Intriguingly, though, measurements of similar processes involving “top quarks” from the ATLAS experiment at the LHC show this decay does happen at equal rates for electrons and muons.

Meanwhile, the Muon g-2 experiment at Fermilab in the US has recently made very precise studies of how muons “wobble” as their “spin” (a quantum property) interacts with surrounding magnetic fields. It found a small but significant deviation from some theoretical predictions – again suggesting that unknown forces or particles may be at work.

The latest surprising result is a measurement of the mass of a fundamental particle called the W boson, which carries the weak nuclear force that governs radioactive decay. After many years of data taking and analysis, the experiment, also at Fermilab, suggests it is significantly heavier than theory predicts – deviating by an amount that would not happen by chance in more than a million million experiments. Again, it may be that yet undiscovered particles are adding to its mass.

Interestingly, however, this also disagrees with some lower-precision measurements from the LHC (presented in this study and this one).

The verdict

While we are not absolutely certain these effects require a novel explanation, the evidence seems to be growing that some new physics is needed.

Of course, there will be almost as many new mechanisms proposed to explain these observations as there are theorists. Many will look to various forms of “supersymmetry”. This is the idea that there are twice as many fundamental particles in the standard model than we thought, with each particle having a “super partner”. These may involve additional Higgs bosons (associated with the field that gives fundamental particles their mass).

Others will go beyond this, invoking less recently fashionable ideas such as “technicolour”, which would imply that there are additional forces of nature (in addition to gravity, electromagnetism and the weak and strong nuclear forces), and might mean that the Higgs boson is in fact a composite object made of other particles. Only experiments will reveal the truth of the matter – which is good news for experimentalists.

The experimental teams behind the new findings are all well respected and have worked on the problems for a long time. That said, it is no disrespect to them to note that these measurements are extremely difficult to make. What’s more, predictions of the standard model usually require calculations where approximations have to be made. This means different theorists can predict slightly different masses and rates of decay depending on the assumptions and level of approximation made. So, it may be that when we do more accurate calculations, some of the new findings will fit with the standard model.

Equally, it may be the researchers are using subtly different interpretations and so finding inconsistent results. Comparing two experimental results requires careful checking that the same level of approximation has been used in both cases.

These are both examples of sources of “systematic uncertainty”, and while all concerned do their best to quantify them, there can be unforeseen complications that under- or overestimate them.

None of this makes the current results any less interesting or important. What the results illustrate is that there are multiple pathways to a deeper understanding of the new physics, and they all need to be explored.

With the restart of the LHC, there are still prospects of new particles being made through rarer processes or found hidden under backgrounds that we have yet to unearth.

Roger Jones is a professor of physics and head of department at Lancaster University. This article first appeared on the Conversation

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

0Comments