The Independent's journalism is supported by our readers. When you purchase through links on our site, we may earn commission.

We created holograms you can actually touch

Hologram technology looks set to play a growing part in our lives, but what possibilities would it open up if we could touch them? Ravinder Dahiya reports

The TV show Star Trek: The Next Generation introduced millions of people to the idea of a holodeck: an immersive, realistic 3D holographic projection of a complete environment that you could interact with and even touch.

In the 21st century, holograms are already being used in a variety of ways, such as in medical systems, education, art, security and defence. Scientists are still developing ways to use lasers, modern digital processors, and motion-sensing technologies to create several different types of holograms that could change the way we interact.

My colleagues and I, working in the University of Glasgow’s bendable electronics and sensing technologies research group, have now developed a system of holograms of people using “aerohaptics”, creating feelings of touch with jets of air. Those jets of air deliver a sensation of touch on people’s fingers, hands and wrists.

In time, this could be developed to allow you to meet a virtual avatar of a colleague on the other side of the world and really feel their handshake. It could even be the first step towards building something like a holodeck.

To create this feeling of touch we use affordable, commercially available parts to pair computer-generated graphics with carefully directed and controlled jets of air.

In some ways, it’s a step beyond the current generation of virtual reality, which usually requires a headset to deliver 3D graphics, and smart gloves or handheld controllers to provide haptic feedback, a stimulation that feels like touch. Most of the wearable-gadget-based approaches are limited to controlling the virtual object that is being displayed.

It could also help clinicians to collaborate on treatment for patients, and make patients feel more involved and informed in the process

Controlling a virtual object doesn’t give the feeling that you would experience when two people touch. The addition of an artificial touch sensation can deliver the extra dimension without having to wear gloves to feel objects, and so feels much more natural.

Using glass and mirrors

Our research uses graphics that provide the illusion of a 3D virtual image. It’s a modern variation on a 19th-century illusion technique known as Pepper’s ghost, which thrilled Victorian theatregoers with visions of the supernatural onstage.

The system uses glass and mirrors to make a two-dimensional image appear to hover in space without the need for any additional equipment. And our haptic feedback is created with nothing but air.

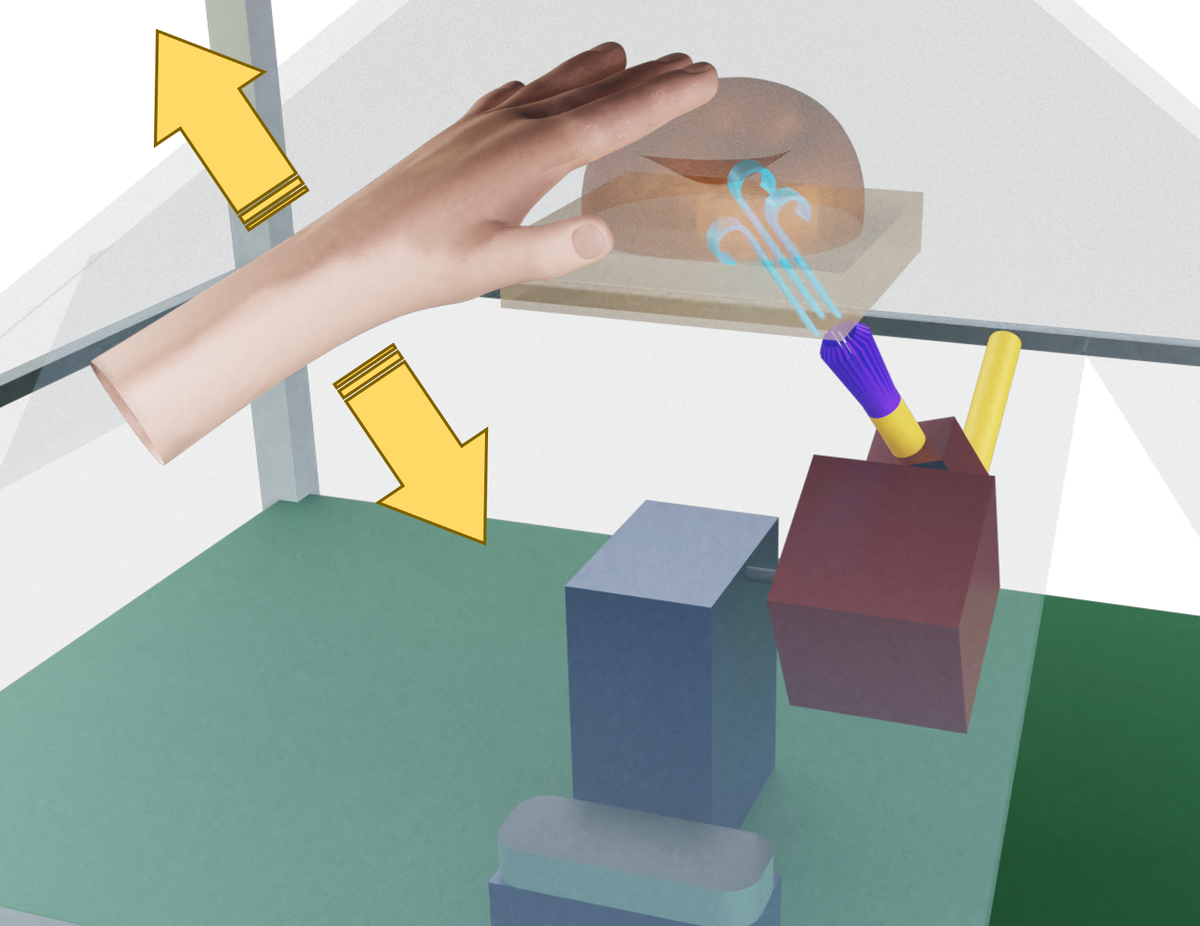

The mirrors making up our system are arranged in a pyramid shape with one open side. Users put their hands through the open side and interact with computer-generated objects, which appear to be floating in free space inside the pyramid. The objects are graphics, created and controlled by a software programme called Unity Game Engine, which is often used to create 3D objects and worlds in videogames.

Located just below the pyramid is a sensor that tracks the movements of users’ hands and fingers, and a single air nozzle, which directs jets of air towards them to create complex sensations of touch. The overall system is directed by electronic hardware programmed to control nozzle movements. We developed an algorithm which allowed the air nozzle to respond to the movements of users’ hands with appropriate combinations of direction and force.

One of the ways in which we’ve demonstrated the capabilities of the “aerohaptic” system is with an interactive projection of a basketball, which can be convincingly touched, rolled and bounced. The touch feedback from air jets from the system is also modulated based on the virtual surface of the basketball, allowing users to feel the rounded shape of the ball as it rolls from their fingertips when they bounce it, and the slap in their palm when it returns.

Users can even push the virtual ball with varying force, and sense the resulting difference in how a hard bounce or a soft bounce feels in their palm. Even something as apparently simple as bouncing a basketball required us to work hard to model the physics of the action and how we could replicate that familiar sensation with jets of air.

Smells of the future

While we don’t expect to be delivering a full Star Trek holodeck experience in the near future, we’re already boldly going in new directions to add additional functions to the system. Soon, we expect to be able to modify the temperature of the airflow to allow users to feel hot or cold surfaces. We’re also exploring the possibility of adding scents to the airflow, deepening the illusion of virtual objects by allowing users to smell as well as touch them.

As the system expands and develops, we expect that it may find uses in a wide range of sectors. Delivering more absorbing videogame experiences without having to wear cumbersome equipment is an obvious one, but it could also allow more convincing teleconferencing too. You could even take turns to add components to a virtual circuit board as you collaborate on a project.

It could also help clinicians to collaborate on treatments for patients, and make patients feel more involved and informed in the process. Doctors could view, feel and discuss the features of tumour cells, and show patients plans for a medical procedure.

Ravinder Dahiya is a professor of electronics and nanoengineering at the University of Glasgow. This article first appeared on The Conversation

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

0Comments