The Independent's journalism is supported by our readers. When you purchase through links on our site, we may earn commission.

How much longer do we have left on Earth?

We’re becoming more and more worried about human extinction, but this isn’t anything new, argues Thomas Moynihan – it’s been on our radar ever since the Enlightenment

Your support helps us to tell the story

From reproductive rights to climate change to Big Tech, The Independent is on the ground when the story is developing. Whether it's investigating the financials of Elon Musk's pro-Trump PAC or producing our latest documentary, 'The A Word', which shines a light on the American women fighting for reproductive rights, we know how important it is to parse out the facts from the messaging.

At such a critical moment in US history, we need reporters on the ground. Your donation allows us to keep sending journalists to speak to both sides of the story.

The Independent is trusted by Americans across the entire political spectrum. And unlike many other quality news outlets, we choose not to lock Americans out of our reporting and analysis with paywalls. We believe quality journalism should be available to everyone, paid for by those who can afford it.

Your support makes all the difference.It is 1950 and a group of scientists are walking to lunch against the majestic backdrop of the Rocky Mountains. They are about to have a conversation that will become scientific legend. The scientists are at Los Alamos Ranch School, the site of the Manhattan Project, where each recently played their part in developing the atomic bomb.

They are laughing about a recent cartoon in The New Yorker offering an unlikely explanation for a slew of missing public bins across New York City. The cartoon had depicted “little green men” (complete with antenna and guileless smiles) having stolen the bins, assiduously unloading them from their flying saucer.

By the time the party of nuclear scientists sits down to lunch, within the mess hall of a grand log cabin, one of their number turns the conversation to matters more serious. “Where, then, is everybody?” he asks. They all know that he is talking – sincerely – about extraterrestrials.

The question, which was posed by Enrico Fermi and is now known as Fermi’s paradox, has chilling implications.

Bin-stealing UFOs notwithstanding, humanity still hasn’t found any evidence of intelligent activity among the stars. Not a single feat of “astro-engineering”, no visible superstructures, not one space-faring empire, not even a radio transmission. It has been argued that the eerie silence from the sky above may well tell us something ominous about the future course of our own civilisation.

Such fears are ramping up. Last year, the astrophysicist Adam Frank implored an audience at Google that we see climate change – and the newly baptised geological age of the Anthropocene – against this cosmological backdrop.

Artificial superintelligence need not even be intentionally malicious in order to accidentally wipe us out

The Anthropocene refers to the effects of humanity’s energy-intensive activities upon Earth. Could it be that we do not see evidence of space-faring galactic civilisations because, due to resource exhaustion and subsequent climate collapse, none of them ever get that far? If so, why should we be any different?

A few months after Frank’s talk, in October 2018, the Intergovernmental Panel on Climate Change’s update on global warming caused a stir. It predicted a sombre future if we do not decarbonise. And in May, amid Extinction Rebellion’s protests, a new climate report upped the ante, warning: “Human life on earth may be on the way to extinction.”

Meanwhile, NASA has been publishing press releases about an asteroid set to hit New York within a month. This is, of course, a dress rehearsal: part of a “stress test” designed to simulate responses to such a catastrophe. NASA is obviously fairly worried by the prospect of such a disaster event – such simulations are costly.

Space-tech billionaire Elon Musk has also been relaying his fears about artificial intelligence to YouTube audiences of tens of millions. He and others worry that the ability of AI systems to rewrite and self-improve themselves may trigger a sudden runaway process, or “intelligence explosion”, that will leave us far behind. Artificial superintelligence need not even be intentionally malicious in order to accidentally wipe us out.

In 2015, Musk donated to the University of Oxford’s Future of Humanity Institute, headed up by transhumanist Nick Bostrom. Nestled within the university’s medieval spires, Bostrom’s institute scrutinises the long-term fate of humanity and the perils we face at a truly cosmic scale, examining the risks of things such as climate change, asteroids and AI. It also looks into less well-publicised issues. Universe-destroying physics experiments, gamma-ray bursts, planet-consuming nanotechnology and exploding supernovae have all come under its gaze.

So it would seem that humanity is becoming more and more concerned with portents of human extinction. As a global community, we are increasingly conversant with increasingly severe futures. Something is in the air.

But this tendency is not actually exclusive to the post-atomic age: our growing concern about extinction has a history. We have been becoming more and more worried for our future for quite some time now. My PhD research tells the story of how this began. No one has yet told this story, yet I feel it is an important one for our present moment.

I wanted to find out how current projects, such as the Future of Humanity Institute, emerge as offshoots and continuations of an ongoing project of “enlightenment” that we first set ourselves over two centuries ago. Recalling how we first came to care for our future helps reaffirm why we should continue to care today.

Extinction, 200 years ago

In 1816, something was also in the air. It was a 100-megatonne sulphate aerosol layer. Girdling the planet, it was made up of material thrown into the stratosphere by the eruption of Mount Tambora in Indonesia the previous year. It was one of the biggest volcanic eruptions since civilisation emerged.

Almost blotting out the sun, Tambora’s fallout caused a global cascade of harvest collapses, mass famine, cholera outbreaks and geopolitical instability. And it also provoked the first popular fictional depictions of human extinction. These came from a troupe of writers including Lord Byron, Mary Shelley and Percy Shelley.

The group had been holidaying together in Switzerland when titanic thunderstorms, caused by Tambora’s climate perturbations, trapped them inside their villa. Here they discussed humanity’s long-term prospects.

Clearly inspired by these conversations and by 1816’s hellish weather, Byron immediately set to work on a poem entitled “Darkness”. It imagines what would happen if our sun died:

I had a dream, which was not all a dream

The bright sun was extinguish’d, and the stars

Did wander darkling in the eternal space

Rayless, and pathless, and the icy earth

Swung blind and blackening in the moonless air

Detailing the ensuing sterilisation of our biosphere, it caused a stir. And almost 150 years later, against the backdrop of escalating Cold War tensions, the Bulletin of the Atomic Scientists again called upon Byron’s poem to illustrate the severity of nuclear winter.

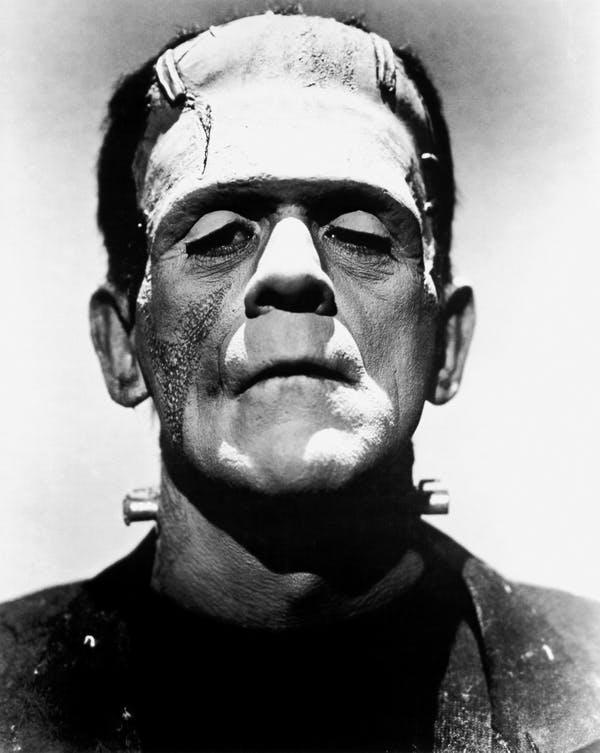

Two years later, Mary Shelley’s Frankenstein (perhaps the first book on synthetic biology) refers to the potential for the lab-born monster to outbreed and exterminate Homo sapiens as a competing species. By 1826, Mary went on to publish The Last Man. This was the first full-length novel on human extinction, depicted here at the hands of a pandemic pathogen.

Beyond these speculative fictions, other writers and thinkers had already discussed such threats. Samuel Taylor Coleridge, in 1811, daydreamed in his private notebooks about our planet being “scorched by a close comet and still rolling on – cities men-less, channels riverless, five mile deep”. In 1798, Mary Shelley’s father, the political thinker William Godwin, queried whether our species would “continue forever”?

Just a few years earlier, Immanuel Kant had pessimistically proclaimed that global peace may be achieved “only in the vast graveyard of the human race”. He would, soon after, worry about a descendent offshoot of humanity becoming more intelligent and pushing us aside.

Earlier still, in 1754, philosopher David Hume had declared that “man, equally with every animal and vegetable, will partake” in extinction. Godwin noted that “some of the profoundest enquirers” had lately become concerned with “the extinction of our species”.

In 1816, against the backdrop of Tambora’s glowering skies, a newspaper article drew attention to this growing murmur. It listed numerous extinction threats. From global refrigeration to rising oceans to planetary conflagration, it spotlighted the new scientific concern for human extinction. The “probability of such a disaster is daily increasing”, the article glibly noted. Not without chagrin, it closed by stating: “Here, then, is a very rational end of the world!”

Before this, we thought the universe was busy

So if people first started worrying about human extinction in the 18th century, where was the notion beforehand? There is enough apocalypse in scripture to last until judgement day, surely. But extinction has nothing to do with apocalypse. The two ideas are utterly different, even contradictory.

Galileo confidently declared that an entirely uninhabited or unpopulated world is ‘naturally impossible’

For a start, apocalyptic prophecies are designed to reveal the ultimate moral meaning of things. It’s in the name: apocalypse means revelation. Extinction, by direct contrast, reveals precisely nothing, and this is because it instead predicts the end of meaning and morality itself – if there are no humans, there is nothing humanly meaningful left.

And this is precisely why extinction matters. Judgement day allows us to feel comfortable knowing that, in the end, the universe is ultimately in tune with what we call “justice”. Nothing was ever truly at stake. On the other hand, extinction alerts us to the fact that everything we hold dear has always been in jeopardy. In other words, everything is at stake.

Extinction was not much discussed before 1700 due to a background assumption, widespread prior to the Enlightenment, that it is the nature of the cosmos to be as full of moral value and worth as is possible. This, in turn, led people to assume that all other planets are populated with “living and thinking beings” exactly like us.

Although it only became a truly widely accepted fact after Copernicus and Kepler in the 16th and 17th centuries, the idea of plural worlds certainly dates back to antiquity, with intellectuals from Epicurus to Nicholas of Cusa proposing them to be inhabited with lifeforms similar to our own. And, in a cosmos that is infinitely populated with humanoid beings, such beings – and their values – can never fully go extinct.

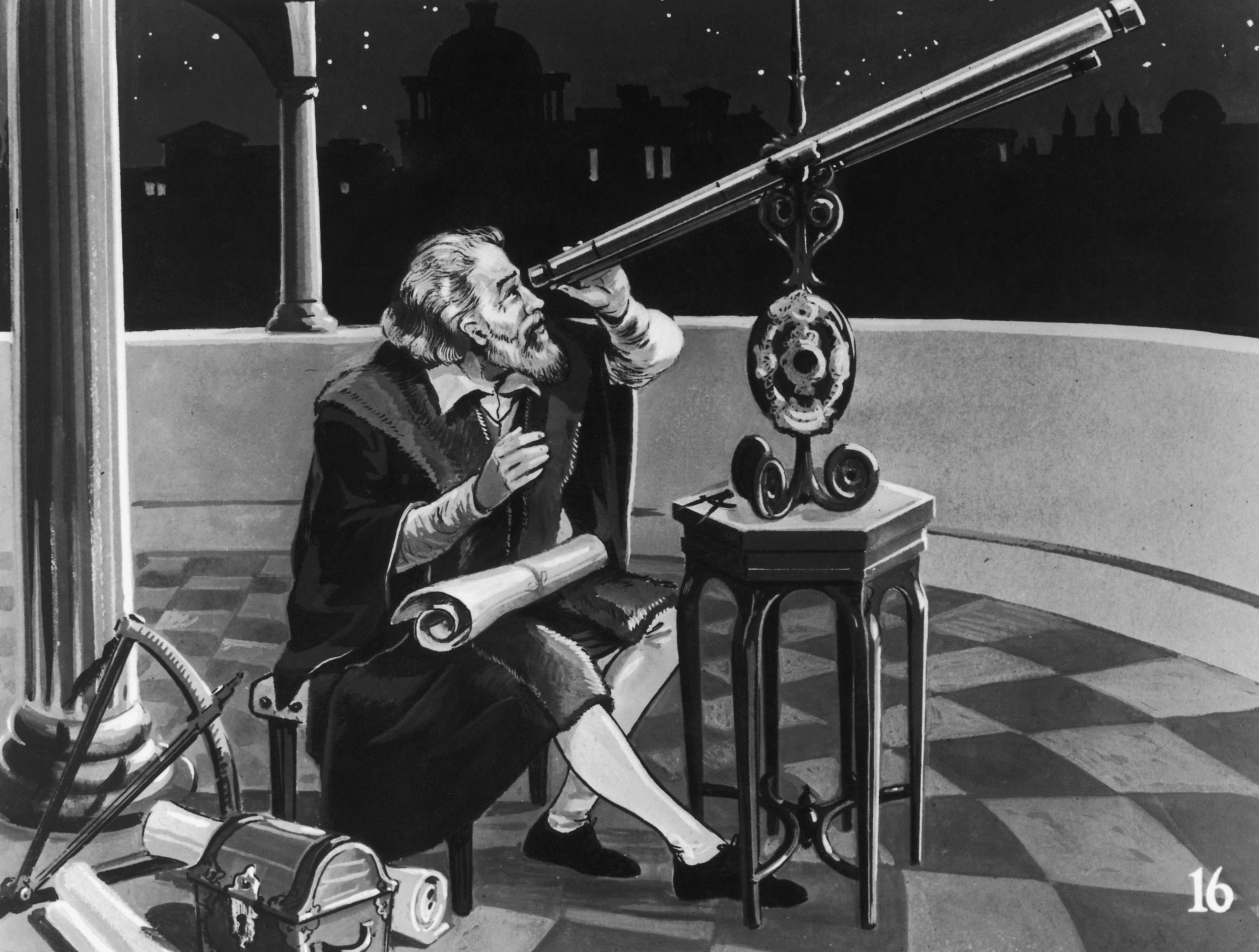

In the 1660s, Galileo confidently declared that an entirely uninhabited or unpopulated world is “naturally impossible” on account of it being “morally unjustifiable”. Gottfried Leibniz later pronounced that there simply cannot be anything entirely “fallow, sterile, or dead in the universe”.

Along the same lines, the trailblazing scientist Edmond Halley (after whom the famous comet is named) reasoned in 1753 that the interior of our planet must likewise be “inhabited”. It would be “unjust” for any part of nature to be left “unoccupied” by moral beings, he argued.

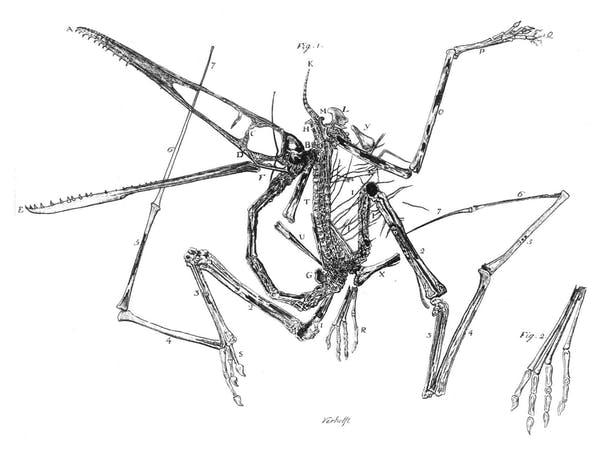

Around the same time, Halley provided the first theory on a “mass extinction event”. He speculated that comets had previously wiped out entire “worlds” of species. Nonetheless, he also maintained that after each previous cataclysm “human civilisation had reliably re-emerged”. And it would do so again. Only this, he said, could make such an event morally justifiable.

Later, in the 1760s, the philosopher Denis Diderot was attending a dinner party when he was asked whether humans would go extinct. He answered “yes”, but immediately qualified this by saying that after several millions of years the “biped animal who carries the name man” would inevitably re-evolve.

It’s a wrong-headed assumption that, should we be wiped out today, something like us will inevitably return tomorrow

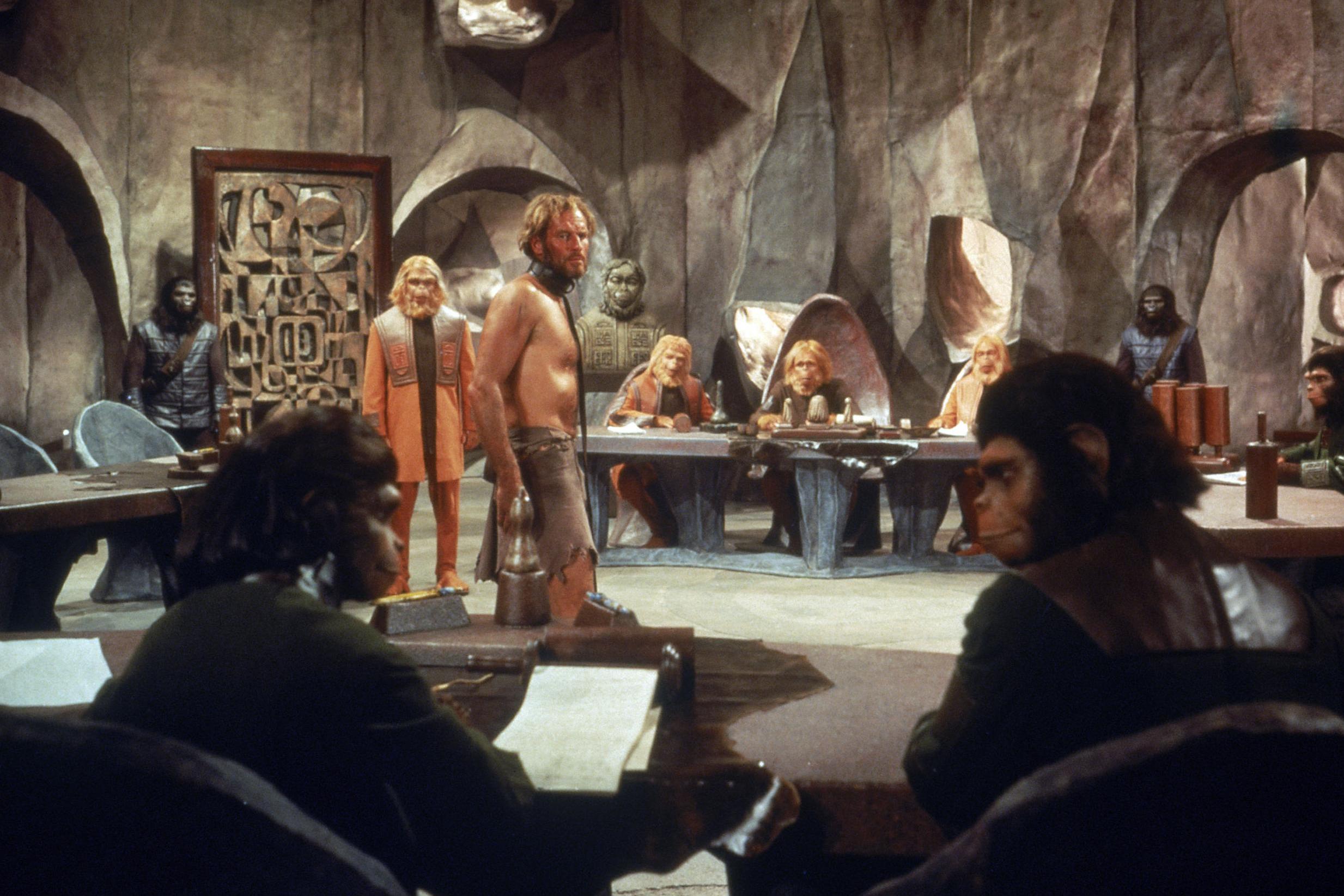

This is what the contemporary planetary scientist Charles Lineweaver identifies as the Planet of the Apes hypothesis. This refers to the misguided presumption that “human-like intelligence” is a recurrent feature of cosmic evolution: that alien biospheres will reliably produce beings like us. This is what is behind the wrong-headed assumption that, should we be wiped out today, something like us will inevitably return tomorrow.

Back in Diderot’s time, this assumption was pretty much the only game in town. It was why one British astronomer wrote, in 1750, that the destruction of our planet would matter as little as “Birth-Days or Mortalities” do on Earth.

This was typical thinking at the time. Within the prevailing worldview of eternally returning humanoids throughout an infinitely populated universe, there was simply no pressure or need to care for the future. Human extinction simply couldn’t matter. It was trivialised to the point of being unthinkable.

For the same reasons, the idea of the “future” was also missing. People simply didn’t care about it in the way we do now. Without the urgency of a future riddled with risk, there was no motivation to be interested in it, let alone attempt to predict and preempt it.

It was the dismantling of such dogmas, beginning in the 1700s and ramping up in the 1800s, that set the stage for the enunciation of Fermi’s paradox in the 1900s and which led to our growing appreciation of our cosmic precariousness today.

But then we realised the skies are silent

In order to truly care about our mutable position down here, we first had to notice that the cosmic skies above us are crushingly silent. Slowly at first, though soon after gaining momentum, this realisation began to take hold around the same time that Diderot had his dinner party.

Biology hasn’t even been a permanent fixture here on Earth – why should it be one elsewhere?

One of the first examples of a different mode of thinking I’ve found is from 1750, when the French polymath Claude-Nicholas Le Cat wrote a history of Earth. Like Halley, he posited the now familiar cycles of “ruin and renovation”. Unlike Halley, he was conspicuously unclear as to whether humans would return after the next cataclysm.

A shocked reviewer picked up on this, demanding to know whether “Earth shall be re-peopled with new inhabitants”. In reply, the author facetiously asserted that our fossil remains would “gratify the curiosity of the new inhabitants of the new world, if there be any”. The cycle of eternally returning humanoids was unwinding.

In line with this, the French encyclopedist Baron d’Holbach ridiculed the “conjecture that other planets, like our own, are inhabited by beings resembling ourselves”. He noted that precisely this dogma – and the related belief that the cosmos is inherently full of moral value – had long obstructed appreciation that the human species could permanently “disappear” from existence. By 1830, the German philosopher F W J Schelling declared it utterly naive to go on presuming “that humanoid beings are found everywhere and are the ultimate end”.

And so, where Galileo had once spurned the idea of a dead world, the German astronomer Wilhelm Olbers proposed in 1802 that the Mars-Jupiter asteroid belt in fact constitutes the ruins of a shattered planet. Troubled by this, Godwin noted that this would mean that the creator had allowed part of “his creation” to become irremediably “unoccupied”.

But scientists were soon computing the precise explosive force needed to crack a planet – assigning cold numbers where moral intuitions once prevailed. Olbers calculated a precise timeframe within which to expect such an event befalling Earth. Poets began writing of “bursten worlds”.

The cosmic fragility of life was becoming undeniable. If Earth happened to drift away from the sun, one 1780s Parisian diarist imagined that interstellar coldness would “annihilate the human race, and the earth rambling in the void space, would exhibit a barren, depopulated aspect”. Soon after, the Italian pessimist Giacomo Leopardi envisioned the same scenario. He said that, shorn of the sun’s radiance, humanity would “all die in the dark, frozen like pieces of rock crystal”.

Galileo’s inorganic world was now a chilling possibility. Life, finally, had become cosmically delicate. Ironically, this appreciation came not from scouring the skies above but from probing the ground below. Early geologists, during the later 1700s, realised that Earth has its own history and that organic life has not always been part of it.

Biology hasn’t even been a permanent fixture here on Earth – why should it be one elsewhere? Coupled with growing scientific proof that many species had previously become extinct, this slowly transformed our view of the cosmological position of life as the 19th century dawned.

Seeing death in the stars

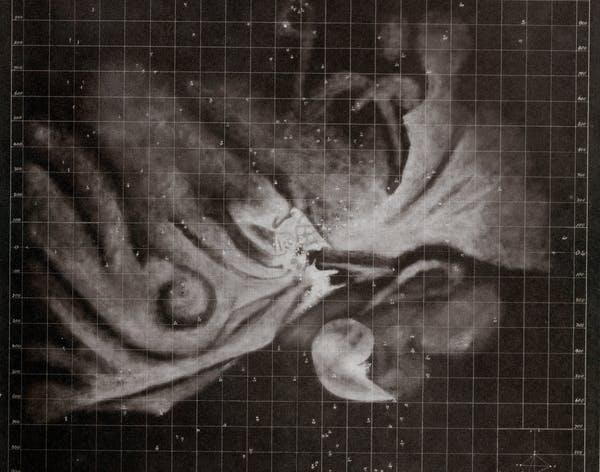

And so, where people like Diderot looked up into the cosmos in the 1750s and saw a teeming Petri dish of humanoids, writers such as Thomas De Quincey were, by 1854, gazing upon the Orion nebula and reporting that they saw only a gigantic inorganic “skull” and its lightyear-long rictus grin.

The astronomer William Herschel had, already in 1814, realised that when looking out into the galaxy one is looking into a “kind of chronometer”. Fermi would spell it out a century after De Quincey, but people were already intuiting the basic notion: looking out into dead space, we may just be looking into our own future.

People were becoming aware that the appearance of intelligent activity on Earth should not be taken for granted. They began to see that it is something distinct – something that stands out against the silent depths of space.

Only by realising that what we consider valuable is not the cosmological baseline did we come to grasp that such values are not necessarily part of the natural world. Realising this was also realising that they are entirely our own responsibility. And this, in turn, summoned us to the modern projects of prediction, preemption and strategising. It is how we came to care about our future.

As soon as people first started discussing human extinction, possible preventative measures were suggested. Bostrom now refers to this as “macrostrategy”. However, as early as the 1720s, the French diplomat Benoit de Maillet was suggesting gigantic feats of geoengineering that could be leveraged to buffer against climate collapse

Will technology save us?

It wasn’t long before authors began conjuring up highly technologically advanced futures aimed at protecting against existential threat. The eccentric Russian futurologist Vladimir Odoevsky, writing in the 1830s and 1840s, imagined humanity engineering the global climate and installing gigantic machines to “repulse” comets and other threats, for example.

Yet Odoevsky was also keenly aware that with self-responsibility comes risk: the risk of abortive failure. Accordingly, he was also the very first author to propose the possibility that humanity might destroy itself with its own technology.

Acknowledgement of this plausibility, however, is not necessarily an invitation to despair. And it remains so. It simply demonstrates appreciation of the fact that, ever since we realised that the universe is not teeming with humans, we have come to appreciate that the fate of humanity lies in our hands.

We may yet prove unfit for this task, but – then as now – we cannot rest assured believing that humans, or something like us, will inevitably reappear – here or elsewhere.

Beginning in the late 1700s, appreciation of this has snowballed into our ongoing tendency to be swept up by concern for the deep future. Current initiatives, such as Bostrom’s Future of Humanity Institute, can be seen as emerging from this broad and edifying historical sweep.

From ongoing demands for climate justice to dreams of space colonisation, all are continuations and offshoots of a tenacious task that we first began to set for ourselves two centuries ago during the Enlightenment, when we first realised that, in an otherwise silent universe, we are responsible for the entire fate of human value.

It may be solemn, but becoming concerned for humanity’s extinction is nothing other than realising one’s obligation to strive for unceasing self-betterment. Indeed, ever since the Enlightenment, we have progressively realised that we must think and act ever better because, should we not, we may never think or act again. And that seems – to me at least – like a very rational end of the world.

the University of Oxford. This article was first published on The Conversation

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments