From coronavirus to the climate: What it means to be a scientist today

Scientists haven’t changed much in the past 300 years, explains Astronomer Royal Martin Rees. The challenges might be different but one thing remains the same: they must work together to solve them

Your support helps us to tell the story

From reproductive rights to climate change to Big Tech, The Independent is on the ground when the story is developing. Whether it's investigating the financials of Elon Musk's pro-Trump PAC or producing our latest documentary, 'The A Word', which shines a light on the American women fighting for reproductive rights, we know how important it is to parse out the facts from the messaging.

At such a critical moment in US history, we need reporters on the ground. Your donation allows us to keep sending journalists to speak to both sides of the story.

The Independent is trusted by Americans across the entire political spectrum. And unlike many other quality news outlets, we choose not to lock Americans out of our reporting and analysis with paywalls. We believe quality journalism should be available to everyone, paid for by those who can afford it.

Your support makes all the difference.If we could time travel back to the 1660s – to the earliest days of the Royal Society – we would find Christopher Wren, Robert Hooke, Samuel Pepys and other “ingenious and curious gentlemen” (as they described themselves) meeting regularly. Their motto was to accept nothing on authority. They did experiments; they peered through newly invented telescopes and microscopes; they dissected weird animals. Their experiments were sometimes gruesome – one involved a blood transfusion from a sheep to a man. Amazingly the man survived.

But, as well as indulging their curiosity, these pioneers were immersed in the practical agenda of their era: improving navigation, exploring the New World, and rebuilding London after the plague and the Great Fire. They were inspired by the goals spelled out by Francis Bacon: to be “merchants of light” and to aid “the relief of man’s estate”. Coming back to the present day, we are still inspired by the same ideals – but health and safety rules make our present-day meetings a bit duller.

Science has always crossed national boundaries. The Royal Society, in its quaint idiom, aimed to promote “commerce in all parts of the world with the most curious and philosophical persons to be found”. Humphrey Davy (who invented the Davy lamp and experimented with what he called “laughing gas” – nitrous oxide) travelled freely in France during the Napoleonic wars; Soviet scientists retained contact with their western counterparts throughout the Cold War. Any leading laboratory, whether it’s run by a university or by a multinational company, contains a similarly broad mix of nationalities wherever it is located. And women scientists are now able to carry out research on equal terms with men.

Science is a truly global culture, cutting across nationality, gender and faith. In the coming decades, the world’s intellectual and commercial centre of gravity will move to Asia, as we see the end of four centuries of North Atlantic hegemony. But we’re not engaged in a zero-sum game. We should welcome an expanded and more networked world, and hope that other countries follow the example of Singapore, South Korea and, of course, India and China.

What are scientists like? They don’t fall into a single mould. Consider, for instance, Newton and Darwin, the most iconic figures in this nation’s scientific history. Newton’s mental powers were really “off the scale”; his concentration was as exceptional as his intellect. When asked how he cracked such deep problems, he said: “By thinking on them continually.” He was solitary and reclusive when young; vain and vindictive in his later years. Darwin, by contrast, was an agreeable and sympathetic personality, and modest in his self-assessment. “I have a fair share of invention,” he wrote in his autobiography, “and of common sense or judgment, such as every fairly successful lawyer or doctor must have, but not, I believe, in any higher degree.”

And we see a similar spread in personalities today – there are micro-Newtons and micro-Darwins. Scientists are widely believed to think in a special way – to follow what’s called the “scientific method”. This belief should be downplayed. It would be truer to say that scientists follow the same rational style of reasoning as (for instance) lawyers or detectives in categorising phenomena, forming hypotheses and testing evidence.

A related (and indeed damaging) misperception is the mindset that there is something especially “elite” about the quality of their thought. “Academic ability” is one facet of the far wider concept of intellectual ability -- possessed in equal measure by the best journalists, lawyers, engineers and politicians. Indeed the great ecologist EO Wilson avers that to be effective in some scientific fields it’s best not to be too bright.

He’s not underestimating the insights and eureka moments that punctuate (albeit rarely) scientists’ working lives. But, as the world expert on tens of thousands of species of ants, Wilson’s research has involved decades of hard slog: armchair theorising is not enough. So, there is a risk of boredom. And he is indeed right that those with short attention spans – with “grasshopper minds” – may find happier (albeit less worthwhile) employment as “millisecond traders” on Wall Street, or the like.

And there’s no justification for snobbery of “pure” over “applied” work. Harnessing a scientific concept for practical goals can be a greater challenge than the initial discovery. A favourite cartoon of my engineering friends shows two beavers looking up at a vast hydroelectric dam. One beaver says to the other: “I didn’t actually build it, but it’s based on my idea.” And I like to remind my theorist colleagues that the Swedish engineer Gideon Sundback, who invented the zipper, made a bigger intellectual leap than most of us ever will.

Odd though it may seem, it’s the most familiar questions that sometimes baffle us most, whereas some of the best-understood phenomena are far away in the cosmos. Astronomers have detected ripples in space from two black holes crashing together a billion light-years away – they can describe that amazingly exotic and remote event in some detail. In contrast, experts are still befuddled about everyday things that we all care about, diet and childcare for instance. When I was young, milk and eggs were good; a decade later we were warned off them because of cholesterol – but today they’re OK again.

These examples alone show that science has an open frontier. And also that the “glamorous” frontiers of science – the very small (particle physics) and the very large (the cosmos) – are far less challenging than the very complex. Human beings are the most complicated known things in the universe – the smallest insect is more complex than an atom or a star, and presents deeper mysteries.

Scientists tend to be severe critics of other people’s work. Those who overturn a consensus – or who contribute something unexpected and original – garner the greatest esteem. But scientists should be equally critical of their own work: they must not become too enamoured of “pet” theories, or be influenced by wishful thinking. Many of us find that very hard. Someone who has invested years of their life in a project is bound to be strongly committed to its importance, to the extent that it’s a traumatic wrench if the whole effort comes to nought.

But initially tentative ideas only firm up after intense scrutiny and criticism – for instance, the link between smoking and lung cancer, or between HIV and Aids. Scrutiny and criticism is also how seductive theories get destroyed by harsh facts. The great historian Robert Merton described science as “organised scepticism”.

When rival theories fight it out, only one winner is left standing (or maybe none). A crucial piece of evidence can clinch the case. That happened in 1965 for the big bang cosmology, when weak microwaves were discovered that had no plausible interpretation other than as a “fossil” of a hot, dense “beginning”. It happened also when the discovery of “seafloor spreading”, again in the 1960s, converted almost all geophysicists to a belief in continental drift. In other cases, an idea gains only a gradual ascendancy: alternative views get marginalised until their leading proponents die off. In general, the more remarkable a claim is – the more intrinsically unlikely, the more incompatible with a package of well-corroborated ideas – the more sceptical and less credulous it’s appropriate to be. As Carl Sagan said: “Extraordinary claims demand extraordinary evidence.”

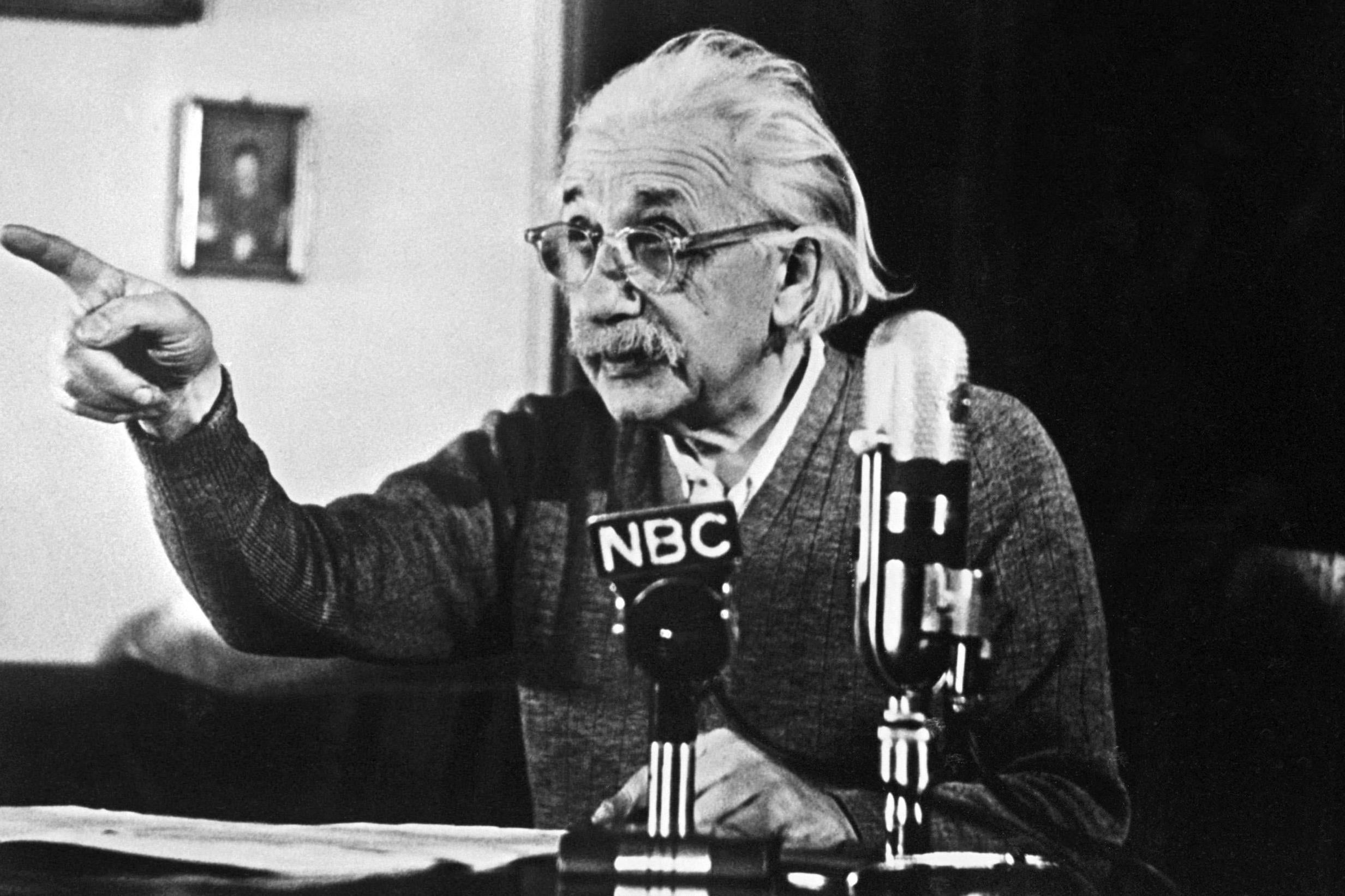

The path towards a consensual scientific understanding can be winding, with many blind alleys explored along the way. Occasionally a maverick is vindicated. We all enjoy seeing this happen – but such instances are rare, and rarer than the popular press would have us believe. Sometimes there are “scientific revolutions”, when a prior consensus is overturned; but most advances transcend and generalise the concepts that went before, rather than contradicting them. Einstein didn’t “overthrow” Newton. He transcended Newton, offering a new perspective with broader scope and deeper insights into space, time and gravity.

Science should surely feature strongly in everyone’s culture – it’s not just for potential scientists. There are two reasons. First, it’s a real intellectual deprivation not to understand the natural world around us ... Second, we live in a world where more and more of the decisions that governments need to make involve science

What about ideas “beyond the fringe”? As an astronomer, I haven’t found it fruitful to engage in dialogue with astrologers or creationists. We don’t share the same methods, nor play by the same evidence-based rules. We shouldn’t let a craving for certainty – for the easy answers that science can seldom provide – drive us towards the illusory comfort and reassurance that these pseudo-sciences appear to offer.

But of course we should have an open mind on fascinating questions where evidence is still lacking.

Science, culture and education

CP Snow’s 1959 lecture on the “Two Cultures”, which some still quote more than 60 years later, bemoaned the failure of scholars in the humanities to appreciate the “creativity” and imagination that the practice of science involves. But it can’t be denied that there are differences. An artist’s work might be individual and distinctive, but it generally doesn’t last. By contrast, even a journeyman scientist adds a few durable bricks to the corpus of “public knowledge”. Science is a progressive enterprise; our contributions – the “bricks” we’ve added – merge into the structure and lose their individuality. If A didn’t discover something, B would, usually not much later; indeed there are many cases of near-simultaneous discovery.

Here there is indeed a contrast with the arts. As the great immunologist Peter Medawar remarked in an essay, when Wagner diverted his energies for 10 years in the middle of the Ring cycle, to compose Meistersinger and Tristan, the composer wasn’t worried that someone would scoop him on Gotterdammerung.

Einstein made a greater and more distinctive imprint on 20th-century science than any other individual. But had he never existed, all his insights would have been revealed by now, though probably by several people rather than by one great mind.

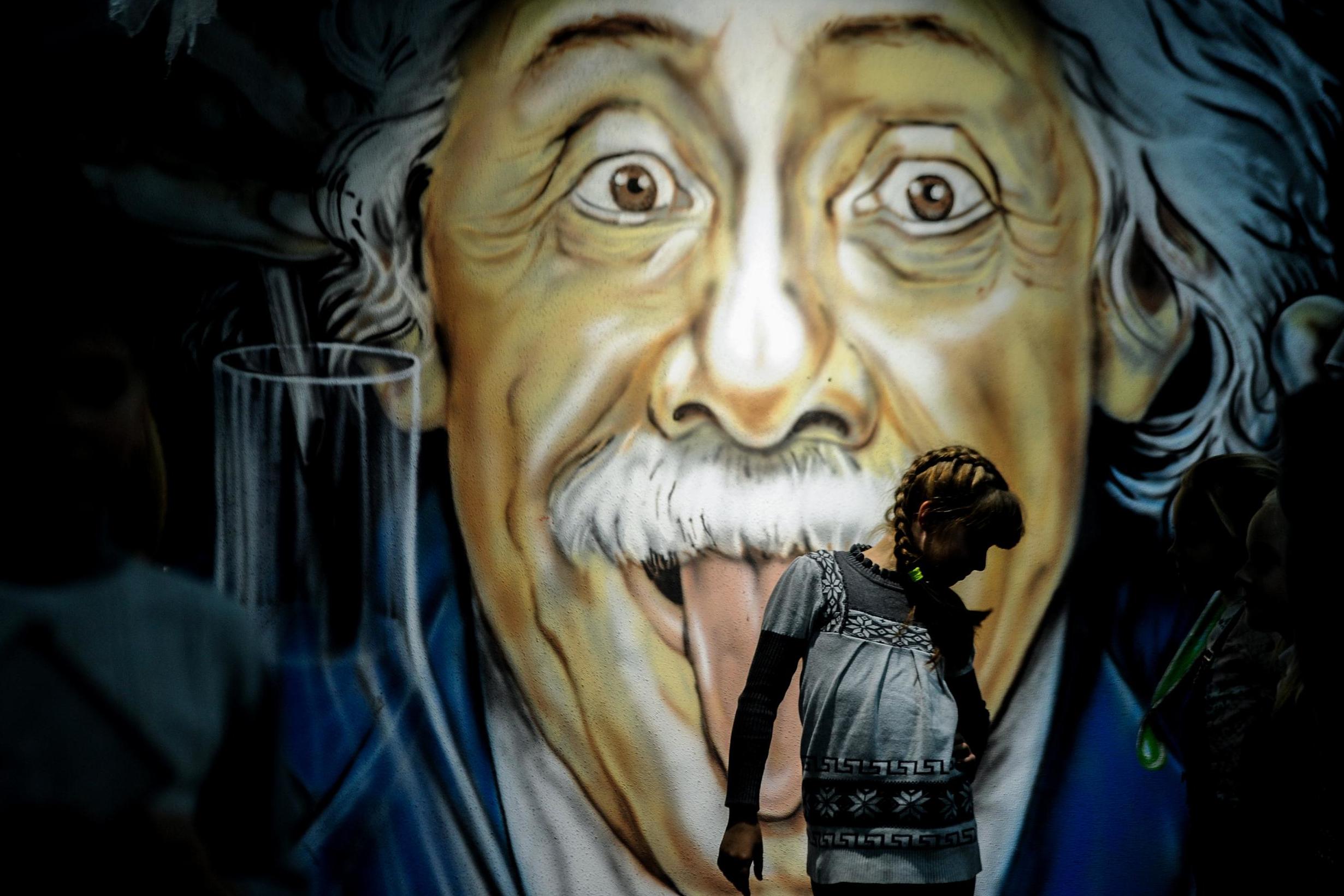

Einstein’s fame extends far beyond science. He was one of the few who really did achieve public celebrity. His image as the benign and unkempt sage became as much an icon of creative genius as Beethoven. His impact on general culture, though, has sometimes led to confusion about what his discoveries actually mean. In some ways it’s a pity that he called his theory “relativity”; its essence is that the local laws of physics are the same in different frames of reference. “Theory of invariance” might have been an apter choice, and would have staunched the misleading analogies with relativism in the humanities and social sciences. But in terms of cultural fallout, he’s fared no worse than others. Heisenberg’s uncertainty principle – a mathematically precise concept, the keystone of quantum mechanics – has been hijacked by adherents of oriental mysticism. Darwin has likewise suffered tendentious distortions, especially in regards to racial differences, and eugenics – and claims that Darwinism offers a basis for morality.

Science should surely feature strongly in everyone’s culture – it’s not just for potential scientists. There are two reasons. First, it’s a real intellectual deprivation not to understand the natural world around us. And to be blind to the marvellous vision offered by Darwinism and by modern cosmology – the chain of emergent complexity leading from a “big bang” to stars, planets, biospheres and human brains able to ponder the wonder and the mystery of it all. To marvel at the intricate web of life on which we all depend – and to understand its vulnerabilities.

Second, we live in a world where more and more of the decisions that governments need to make involve science. Obviously Covid-19 is at the forefront of our minds today. But policies on health, energy, climate and the environment all have a scientific dimension. However, they have economic, social and ethical aspects as well. And on those aspects, scientists speak only as citizens. And all citizens should be involved in democratic choices.

Yet if there’s to be a proper debate, rising above mere sloganeering, everyone needs enough of a “feel” for science to avoid becoming bamboozled by propaganda and bad statistics. The need for proper debate will become more acute in the future, as the pressures on the environment and from misdirected technology get more diverse and threatening.

Incidentally, we scientists habitually bemoan the meagre public grasp of our subject – and, of course, unless citizens have some understanding, policy debates won’t get beyond tabloid slogans. But maybe we protest too much. On the contrary, we should, perhaps, be gratified and surprised that there’s wide interest in such remote topics as dinosaurs, the Large Hadron Collider in Geneva, or alien life. It is indeed sad if some citizens can’t distinguish a proton from a protein; but it’s equally sad if they are ignorant of their nation’s history, or are unable to find Korea or Syria on a map.

School education

Young children have an intrinsic curiosity and “sense of wonder”. That’s why it’s a mistake to think teachers have to make science “relevant” to get their pupils’ interest. What fascinates children most is dinosaurs and space – both blazingly irrelevant to their everyday lives. What’s sad is that by the secondary school stage young people have lost these early enthusiasms without having segued from them to more practical topics.

There’s no gainsaying that technology – computers and the web especially – offers huge benefits to today’s young generation, and loom large in their lives.

People as ancient as me had one advantage. When we were young, you could take apart a clock, a radio set, or motorbike, figure out how it worked, and then reassemble it ... But it’s different today

But I think, paradoxically, that our high-tech environment may actually be an impediment to sustaining youthful enthusiasm for science. People as ancient as me had one advantage. When we were young, you could take apart a clock, a radio set, or motorbike, figure out how it worked, and then reassemble it. And that’s how many of us got hooked on science or engineering.

Going back further, the young Newton made model windmills and clocks – the high-tech artefacts of his time. Darwin collected fossils and beetles. Einstein was fascinated by the electric motors in his father’s factory.

But it’s different today. The gadgets that now pervade our lives, smartphones and suchlike, are baffling “black boxes” – pure magic to most people. Even if you take them apart you’ll find few clues to their arcane miniaturised mechanisms. And you certainly can’t put them together again. So the extreme sophistication of modern technology – wonderful though its benefits are – is, ironically, an impediment to engaging young people with the basics of learning how things work.

And, to reiterate, science education isn’t just for those who will use it professionally. But we do need experts and specialists: if the UK, and indeed Europe, is to sustain economic competitiveness, enough of its young people need to attain professional-level expertise – as millions now do each year in the Far East. A few, the nerdish element, will take this route, come what may – I’m a nerd myself. But a country can’t survive on just this minority of “obsessives”.

The sciences must attract a share of those who are ambitious and have flexible talent – those who have a choice of career paths and who are mindful that financial careers offer Himalayan salaries, if no longer such high esteem. It’s crucial that the brightest young people – savvy about trends and anxiously choosing a career – should perceive the UK as a place where cutting-edge science and engineering can be done. They need a sustained positive signal that the UK is staying in the premier league.

And that, of course, includes women. In astronomy – the science I know best – there was nothing like a “level playing field” for women until the end of the 20th century. The Royal Society didn’t open its doors to women until 1945. There are famous historical cases of women being under-recognised. William Herschel, who mapped the Milky Way and discovered Uranus in the 18th century, had a lot of help from his sister Caroline. A century later William Huggins, who discovered spectroscopically that stars contained the same chemical elements as the Earth (not some mysterious “fifth essence”) and became president of the Royal Society in his old age, was assisted for decades by his wife, Margaret.

In the 1920s Cecilia Payne wrote one of the most important ever PhD theses in astronomy, showing that the sun and stars were mainly made of hydrogen. Her work was received with undue scepticism, but she eventually became the first female full professor at Harvard (in any subject). And, nearer our own day, I knew Geoffrey and Margaret Burbidge – two eminent astronomers. He was a theorist and she was an observer. But in the 1950s women weren’t allowed on the big Californian telescopes so the time had to be allocated to Geoff, with Margaret as his assistant. But she went on to a wonderful career and died, admired by all, six months ago aged 100. And I’m glad to say that among the younger generation the proportion of women is higher in astronomy than in the rest of the physical sciences.

Advice to would-be scientists

When embarking on research you should pick a topic to suit your personality, skills and tastes (for fieldwork? For computer modelling? For high-precision experiments? For handling huge data sets? And so forth). Moreover, it’s especially gratifying to enter a field where things are advancing fast: where you have access to novel techniques, more powerful computers, or bigger data sets – so that the experience of the older generation is at a deep discount. But, of course, there is no need to stick with the same field of science for your whole career – nor indeed to spend all your career as a scientist. The typical field advances through surges, interspersed by periods of relative stagnation. And those who shift their focus mid-career often bring a new perspective – the most vibrant fields often cut across traditional disciplinary boundaries.

And another thing: only geniuses (or cranks) head straight for the grandest and most fundamental problems. You should multiply the importance of the problem by the probability that you’ll solve it, and maximise that product. Aspiring scientists shouldn’t all swarm into, for instance, the unification of cosmos and quantum, even though it’s plainly one of the intellectual peaks we aspire to reach; they should realise that the great challenges in cancer research and in brain science need to be tackled in a piecemeal fashion, rather than head-on.

But what happens when you get old? It’s conventional wisdom that scientists (especially theorists) don’t improve with age, that they “burn out”. The physicist Wolfgang Pauli had a famous put-down for scientists past 30: “still so young, and already so unknown”. But I hope it’s not just wishful thinking – I’m an ageing scientist myself – to be less fatalistic. Many elderly scientists continue to remain productive. But despite some “late-flowering” exceptions, there are few whose last works are their greatest – though that’s the case for many artists, painters and composers. The reason for this contrast, I think, is that artists, though influenced in their youth (like scientists) by the then-prevailing culture and style, can thereafter improve and deepen solely through “internal development”. Scientists, in contrast, need continually to absorb new concepts and new techniques if they want to stay at the frontier – and it’s that flexibility that we lose as we get older. We “seniors” can probably at best aspire to be on a plateau. But this can be a high plateau – and productivity can continue into old age. One thinks, for instance, of John Goodenough, co-inventor of the lithium-ion battery, who is still working at age 97 and last year became the oldest ever winner of a Nobel Prize. We can continue to do what we are competent at, accepting that there may be some new techniques that the young can assimilate more easily than the old.

And older scientists can offer interdisciplinary and societal perspectives. For instance, Freeman Dyson was a world-leading theoretical physicist in his twenties, but right until his recent death aged 96 still offered contrarian stimulus via a flow of articles.

But there’s a pathway that should be avoided, though it’s been followed by some of the greatest scientists: an unwise and overconfident diversification into other fields. Those who follow this route are still, in their own eyes, “doing science” – they want to understand the world and the cosmos – but they no longer get satisfaction from researching in the traditional piecemeal way: they over-reach themselves, sometimes to the embarrassment of their admirers. Fred Hoyle, for 25 years the world’s most productive astrophysicist, in later years “rubbished” Darwinism and claimed that influenza viruses were brought to us via comets. And Bill Shockley, inventor of the transistor developed in later life theories of racial differences that carried little resonance.

Darwin was probably the last scientist who could present great novel insights in a book accessible to general readers – and indeed in a fine work of literature. The gulf between what is written for specialists and what is accessible to the average reader has widened. Literally millions of scientific papers are published, worldwide, each year. This vast primary literature needs to be sifted and synthesised, otherwise not even the specialists can keep up. Even the refereeing role of journals may one day be trumped by more informal systems of quality rating. Blogs and wikis will play a bigger role in critiquing and codifying science. The legacies of Gutenberg and Oldenberg are not optimal in the age of Zuckerberg.

Moreover, professional scientists are depressingly “lay” outside their specialisms – my own knowledge of modern biology, such as it is, comes largely from excellent “popular” books and journalism. Science writers and broadcasters do an important job, and a difficult one. I know how hard it is to explain in clear language even something I think I understand well. But journalists have the far greater challenge of assimilating and presenting topics quite new to them, often to a tight deadline; some are required to speak at short notice, without hesitation, deviation or repetition, before a microphone or TV camera.

But what happens when you get old? It’s conventional wisdom that scientists (especially theorists) don’t improve with age, that they ‘burn out’

In Britain, there is a strong tradition of science journalism. But science generally only earns a newspaper headline, or a place on TV bulletins, as background to a natural disaster, or health scare, rather than as a story in its own right. Scientists shouldn’t complain about this any more than novelists or composers would complain that their new works don’t make the news bulletins. Indeed, coverage restricted to “newsworthy” items – newly announced results that carry a crisp and easily summarised message – distorts and obscures the way science normally develops.

Among the sciences, astronomy and evolutionary biology are appealing not only because both subjects involve beautiful images and fascinating ideas. Their allure also derives from a certain positive and non-threatening public image. In contrast, genetics and nuclear physics may be equally interesting, but the public is ambivalent about them because they have downsides as well as benefits.

I would derive less satisfaction from my astronomical research if I could discuss it only with professional colleagues. I enjoy sharing ideas, and the mystery and the wonder of the universe, with non-specialists. Moreover, even when we do it badly, attempts at this kind of communication are salutary for scientists themselves, helping us to see our work in perspective. As I mentioned earlier, researchers don’t usually shoot directly for a grand goal: they focus on timely, bite-sized problems because that’s the methodology that pays off. But it does carry an occupational risk – we may forget that we’re wearing blinkers and that our piecemeal efforts are only worthwhile in so far as they’re steps towards some fundamental question.

In 1964, Arno Penzias and Robert Wilson, radio engineers at the Bell Telephone Laboratories in the US, made, quite unexpectedly, one of the great discoveries of the 20th century: they detected weak microwaves, filling all of space, which are actually a relic of the Big Bang. But Wilson afterwards remarked that he was so focused on the technicalities that he didn’t himself appreciate the full import of what he’d done until he read a “popular” description in The New York Times, where the background noise in his radio antenna was described as the “afterglow of creation”.

Misperceptions about Darwin or dinosaurs are an intellectual loss, but no more. In the medical arena, however, they could be a matter of life and death. Hope can be cruelly raised by claims of miracle cures; exaggerated scares can distort healthcare choices, as happened over the MMR vaccine. When reporting a particular viewpoint, journalists should clarify whether it is widely supported, or whether it is contested by 99 per cent of specialists as was the case in the claims about the MMR vaccine. Noisy controversy need not signify evenly balanced arguments. The best scientific journalists and bloggers are plugged into an extensive network that should enable them to calibrate the quality of novel claims and the reliability of sources.

Whenever their work has societal impact, scientists – some at least – need to get out of the lab and engage with the media via campaigning groups, via blogging and journalism, or via NGO or political activity – all of which can catalyse a better-informed debate.

You would be a poor parent if you didn’t care about what happened to your children in adulthood, even though you might have little control over them. Likewise, no scientists should be indifferent to the fruits of their ideas – their creations. They should try to foster benign spinoffs, commercial or otherwise. They should resist, so far as they can, dubious or threatening applications of their work, and alert the public and politicians to perceived dangers.

We have some fine exemplars from the past. Take the atomic scientists who developed the first nuclear weapons during the Second World War. Fate had assigned them a pivotal role in history. Though many of them returned with relief to peacetime academic pursuits, the ivory tower didn’t serve as a sanctuary. They continued not just as academics but as engaged citizens, promoting efforts to control the power they had helped unleash – paving the way for the arms control treaties of the 1960s and ‘70s.

Another example comes from the UK, where a dialogue between scientists and parliamentarians – initiated especially by the philosopher Mary Warnock in the 1980s – produced a widely admired legal framework regulating the use of embryos. Similar dialogue led to agreed guidelines for stem cell research. But in the UK and Europe, there have been failures too. For instance, the GM crop debate was left too late – to a time when opinion was already polarised between eco-campaigners on the one side and commercial interests on the other. This has led to an excessive caution in Europe – despite evidence that more then 300 million Americans have eaten GM crops for decades, without manifest harm.

Today, genetics and robotics are advancing apace, confronting us with a range of new contexts where regulation is needed, on ethical or prudential grounds. These are rightly prompting wide pubic discussion, but professionals have a special obligation to engage. Universities can use their staff’s expertise, and their convening power, to assess which scary scenarios – from eco-threats to misapplied genetics or cybertech – can be dismissed as science fiction, and which deserve more serious attention.

Scientists who have worked in government often end up frustrated. It’s hard to get politicians to prioritise long-term issues – or measures that will mainly benefit people in remote parts of the world – when there’s a pressing agenda at home. Even the best politicians focus mainly on more urgent and parochial matters – and on getting re-elected.

Scientists can often gain more leverage indirectly. Carl Sagan, for instance, was a pre-eminent exemplar of the concerned scientist, and had immense influence through his writings, broadcasts, lectures and campaigns. And this was before the age of social media and tweets. He would have been a leader in our contemporary age of campaigns and marches, electrifying crowds through his passion and his eloquence.

David Attenborough is today’s supreme exemplar in raising awareness of environmental issues. Michael Gove wouldn’t have been motivated to introduce legislation against non-reusable drinking straws had this move not gained popular support via the Blue Planet II images of an albatross returning to its nest, and coughing up not the longed-for nourishment, but bits of plastic. That’s as iconic an image as the polar bear on the melting ice has been for climate change.

It’s imperative to multiply such examples. We need a change in priorities and perspective – and soon – if the world’s people are to benefit from our present knowledge and the further breakthroughs that this century will bring. We need urgently to apply new technologies optimally, and avoid their nightmarish downsides: to stem the risk of environmental degradation; to develop clean energy, and sustainable agriculture; and to ensure that we don’t in 2050 still have a world where billions live in poverty and the benefits of globalisation aren’t fairly shared.

To retain politicians’ attention, these long-term global issues must be prominent in their inboxes, and in the press. It’s encouraging to witness more activists among the young – unsurprising, as they can expect to live to the end of the century. Their commitment gives grounds for hope. Let’s hope that many of them become scientists – and true global citizens.

Martin Rees is a member of the House of Lords and former president of the Royal Society

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments