Inside Apple’s top secret testing facilities where iPhone defences are forged in temperatures of -40C

UK exclusive: In a never-before-seen look at its privacy work, Andrew Griffin assesses criticism that the tech giant’s data security techniques limit the benefits of its products to the rich

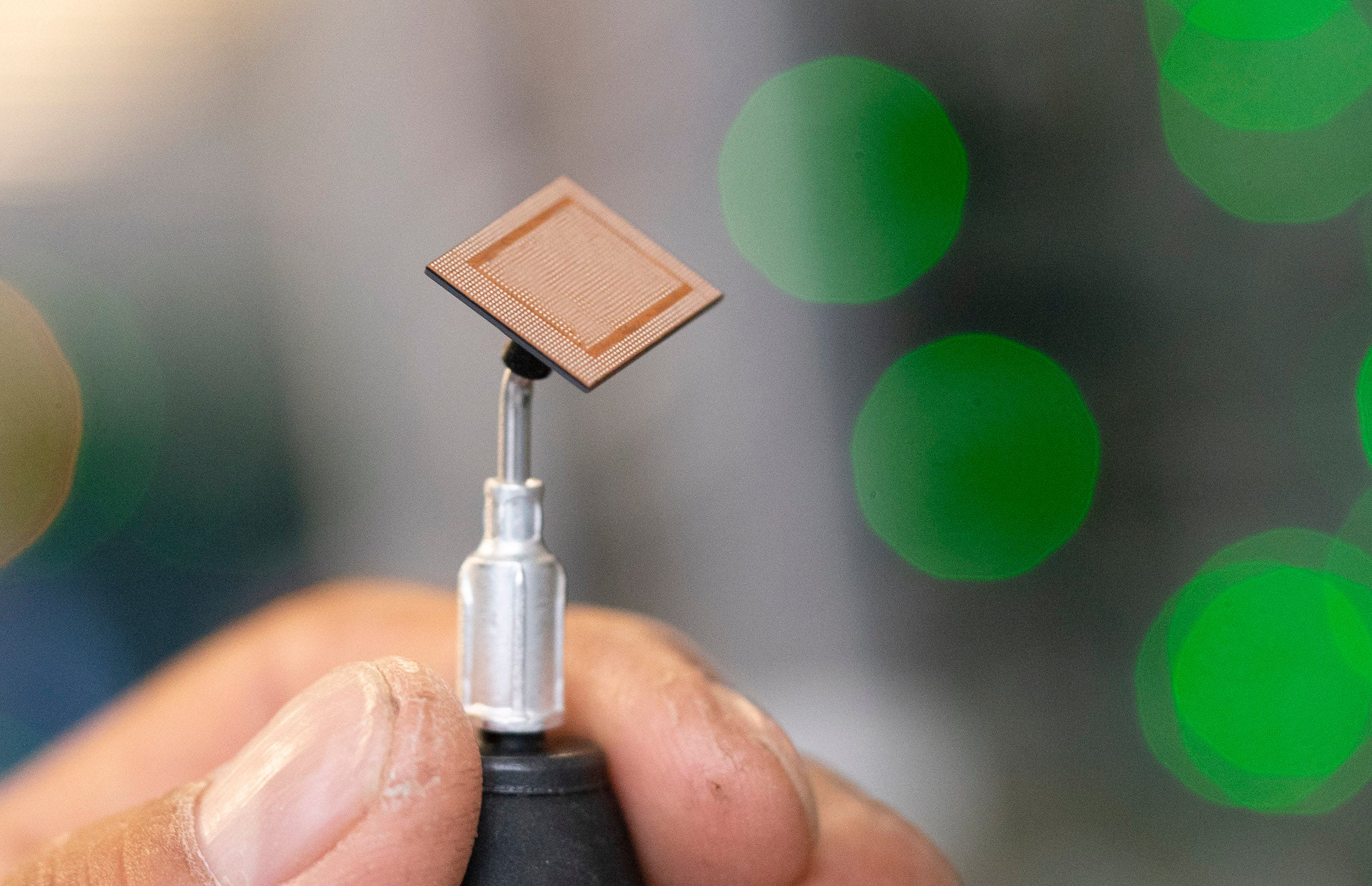

In a huge room somewhere near Apple’s glistening new campus, highly advanced machines are heating, cooling, pushing, shocking and otherwise abusing chips. Those chips – the silicon that will power the iPhones and other Apple products of the future – are being put through the most gruelling and intense work of their young and secretive lives. Throughout the room are hundreds of circuit boards, into which those chips are wired – those hundreds of boards are placed in hundreds of boxes, where these trying processes take place.

Those chips are here to see whether they can withstand whatever assault anyone might try on them when they make their way out into the world. If they succeed here, then they should succeed anywhere; that’s important, because if they fail out in the world then so would Apple. These chips are the great line of defence in a battle that Apple never stops fighting as it tries to keep users’ data private.

It is a battle fought on many fronts: against the governments that want to read users’ personal data; against the hackers who try to break into devices on their behalf; against the other companies who have attacked Apple’s stringent privacy policies. It has meant being taken to task both for failing to give up information the US government says could aid the fighting of terrorism, and for choosing to keep operating in China despite laws that force it to store private data on systems that give the country’s government nearly unlimited access.

Critics have argued that the approach has meant Apple is overly concerned with privacy in a way that limits features. They say the privacy tests are only made possible by its colossal wealth – cash generated by the premium the firm charges for its products, in effect depriving those unable to pay for their benefits.

But the company says that such a fight is necessary, arguing that privacy is a human right that must be upheld even in the face of intense criticism and difficulties.

Privacy, for the company, is a technical and a policy problem. Apple argues that protecting data privacy is one of the most central parts of what it does and that it builds products embodying that principle.

This starts from the beginning of the manufacturing process. Apple employees talk often about the principle of “privacy by design”: that keeping data safe must be thought about at every step of the engineering process, and must be encoded into each part of it. Just as important is the idea of “privacy by default”, meaning Apple always assumes data shouldn’t be gathered, unless it really and truly needs to be.

“I can tell you that privacy considerations are at the beginning of the process, not the end,” says Craig Federighi, Apple’s senior vice president of software engineering. “When we talk about building the product, among the first questions that come out is: how are we going to manage this customer data?” Federighi – sitting inside its spectacular new Apple Park campus – is talking to The Independent about his firm’s commitment to privacy, justifying its place at the core of the company’s values even when many customers regard it with indifference or even downright disdain.

Apple’s principles on privacy are simple: it doesn’t want to know anything about you that it doesn’t need to. It has, Federighi says, no desire to gather data to generate an advertising profile about its users.

“We have no interest in learning all about you. As a company, we don’t want to learn all about you, we think your device should personalise itself to you,” he says. “But that’s in your control – that’s not about Apple learning about you, we have no incentive to do it.

“And morally, we have no desire to do it. And that’s fundamentally a different position than I think many, many other companies are in.”

The chips being buffeted around inside those strange boxes are just one part of that mission. Inside them sits one of Apple’s proudest achievements: the “secure enclave”.

That enclave acts as something of an inner sanctum, the part of the phone that stores its most sensitive information and is fitted out with all the security required to do so.

Arriving with the iPhone 5s, and going through improvements every year, the secure enclave is functionally a separate part of the phone, with specific restrictions about what can access it and when. It holds important pieces of information, such as keys that lock up the biometric data it uses to check your fingerprint when you hold it up to the sensor, and the ones that lock messages so they can only be read by the people sending or receiving them.

Those keys must be kept secure if the phone is to stay safe: biometric data ensures what’s inside the phone can only be seen by its owner. While Apple has had some scares about both parts of that process being compromised – such as a disproven suggestion that its Face ID facial recognition technology could be fooled by mannequins – security experts say its approach has worked out.

“Biometrics aren’t perfect, as the people posting clever workarounds online to supposedly secure logins would attest,” says Chris Boyd, lead malware analyst at Malwarebytes. “However, there’s been no major security scare since the introduction of Apple’s secure enclave and the release of a secure enclave firmware decryption key for the iPhone 5S in 2017 was largely overblown.”

All this high-minded principle is unarguable. Who wants to inadvertently share their information? But, as Steve Jobs said, design is how it works; the product is only as safe as it actually is in practice.

So the aim of the chip stress tests is to see if they misbehave in extreme scenarios – and, if they do, to ensure this happens in this lab rather than inside the phones of users. Any kind of misbehaviour could be fatal to a device.

It might seem unlikely that any normal phone would be subjected to this kind of beating, given the chance of their owners experiencing an environment that chills them to -40C or heats them to 110C. But the fear here is not normal at all. If the chips were found to be insecure under this kind of pressure, then bad actors would immediately start putting phones through it, and all the data they store could be boiled out of them.

If such a fault were found after the phones made their way to customers, there would be nothing Apple could do. Chips can’t be changed after they are in people’s hands, unlike software updates. So Apple looks instead to find any possible dangers in this room, tweaking and fixing chips to ensure they can cope with anything thrown at them.

The chips arrive here years before they make it into this room; the silicon sitting inside the boxes could be years from making it into users’ hands. (There are notes indicating what chips they are, but there are little stickers placed on top of them to stop us reading them.)

Eventually, they will find their way into Apple’s shining new iPhones, Macs, Apple Watches and whatever other luxury computing devices the company brings to market in the future. The cost of those products has led to some criticism from Apple’s rivals, who have said that it is the price of privacy; that Apple is fine talking about how little data it collects, but it is only able to do so because of the substantial premiums they command. That was the argument recently made by Google boss Sundar Pichai, in just one of a range of recent broadsides between tech companies about privacy.

“Privacy cannot be a luxury good offered only to people who can afford to buy premium products and services,” Pichai wrote in an op-ed in The New York Times. He didn’t name Apple, but he didn’t need to.

Pichai argued that the collection of data helps make technology affordable. Having a more lax approach to privacy helps keep the products made by almost all of the biggest technology companies – from Google to Instagram – free, at least at the point of use.

“I don’t buy into the luxury good dig,” says Federighi, giving the impression he was genuinely surprised by the public attack.

“It’s on the one hand gratifying that other companies in this space, over the last few months, seemed to be making a lot of positive noises about caring about privacy. I think it’s a deeper issue than what a couple of months and a couple of press releases would make. I think you’ve got to look fundamentally at company cultures and values and business model. And those don’t change overnight.

“But we certainly seek to both set a great example for the world to show what’s possible, to raise people’s expectations about what they should expect of products, whether they get them from us or from other people. And of course, we love, ultimately, to sell Apple products to everyone we possibly could – certainly not just a luxury.

“We think a great product experience is something everyone should have. So we aspire to develop those.”

A month or so before, another of Apple’s neighbours in Silicon Valley flung another insult at the company. Facebook, mired in its own privacy scandals, announced that it wouldn’t store data in “countries that have a track record of violating human rights like privacy or freedom of expression” – while it didn’t name any names, it was clear that the decision was aimed at Apple, which has been storing users’ data in China.

Staying out of the country to keep data secure and private was “a tradeoff we’re willing to make”, boss Mark Zuckerberg said at the time. “I think it’s important for the future of the internet and privacy that our industry continues to hold firm against storing people’s data in places where it won’t be secure.”

Like many US companies, Facebook has long been blocked in China, where law requires that data is stored locally and there are fewer restrictions against the state looking at people’s personal information. Apple, however, has opted to try to grow in the country, a policy that has seen it open a data centre there, operated by a Chinese company.

Facebook’s former cybersecurity chief, Alex Stamos, called the statement from Zuckerberg “a massive shot across Tim Cook’s bow”. “Expect to hear a lot about iCloud and China every time Cook is sanctimonious,” he said at the time.

The decision to store data in China, made in March of last year, received intense criticism from privacy advocates, including Amnesty International, which called it a “privacy betrayal”. It pointed out that the decision would allow the Chinese state access to the most private parts of people’s digital lives.

“Tim Cook is not being upfront with Apple’s Chinese users when insisting that their private data will always be secure,” said Nicholas Bequelin, Amnesty’s East Asia spokesperson, at the time. “Apple’s pursuit of profits has left Chinese iCloud users facing huge new privacy risks.”

“Apple’s influential ‘1984’ ad challenged a dystopian future but in 2018 the company is now helping to create one. Tim Cook preaches the importance of privacy, but for Apple’s Chinese customers’ these commitments are meaningless. It is pure doublethink.”

Federighi says the location of stored data matters less when the amount of information collected is minimised. He says any data that is stored is done so in ways that stop people from prying into it.

“Step one, of course, is the extent that all of our data minimisation techniques, keeping data on device and protecting devices from external access – all of these things mean that that data isn’t in any cloud in the first place to be accessed by anyone,” he says. By not collecting data, officials in China or anywhere else can't abuse it, Apple claims.

What’s more, Federighi argues that because the data is encrypted, even if it was intercepted – even if someone was actually holding the disk drives that store the data itself – it couldn’t be read. Only the two users sending and receiving iMessages can read them, for example, so the fact they are sent over a Chinese server should be irrelevant if the security works. All they should be able to see is a garbled message that needs a special key to be unlocked.

At home, Apple’s commitment to privacy has led it into disputes with the US government, as well as its more traditional competitors. Probably the most famous of those fallouts came after a terrorist attack in San Bernardino, California. The FBI, seeking to find information about the attacker, asked Apple to make a version of its software that would weaken protections and allow it access to his phone; Apple argued that it was not possible to weaken that security only in one given case, and refused.

The FBI eventually resolved the issue, reportedly unlocking the phone using software from an Israeli company. But the argument has continued. Apple has not changed its mind, and insists that despite government requests to help access phones, doing so in such a way would actually be a threat to national security.

Federighi points out that not all of the sensitive data on phones is personal. Some of it can be very much public.

“If I’m a worker at a power plant, I might have access to a system that has a very high consequence,” he says. “The protection and security of those devices is actually really critical to public safety.

“We know that there are plenty of highly motivated attackers who want to for profit, or do want to break into these valuable stores of information on our devices.”

Apple has repeatedly argued that the creation of a master key or back door to only allow government’s into secure devices is simply not possible – any entry that allows law enforcement in will inevitably be exploited by the criminals they are fighting, too. So protecting the owner of the phone as much as possible keeps data private, and ensures that devices stay safe, he argues.

He remains optimistic that this argument will be resolved. “I think ultimately we hope that governments will come to embrace the idea that having secure and safe systems in the hands of everyone is the greater good,” he says.

Apple has also had to battle the idea that people simply don’t care about privacy. They have repeatedly demonstrated that they would prefer to receive features for free if it means giving up ownership of their data. Four of the top 10 apps in the store are made by Facebook, which has put that trade-off at the very centre of its business.

It has been easy to conclude that we are living in a post-privacy world. As the internet developed, information became more public, not less, and seemingly every new tech product thrived on giving users new ways of sharing a little more about themselves.

But in recent months that seemed to change. And it is becoming more and more clear to people that the privacy of information is at the centre of healthy society, argues Federighi.

“You know, I think people got a little fatalistic about, you know, privacy was dead?” he says. “I don’t believe it: I think we as people are realising that privacy is important for the functioning of good societies.

“We’ll be putting more and more energy as a society into this issue. And we’re proud to be working on it.”

It still has to battle with the fact that users have been lured into handing over data to the point that it is largely unremarkable. Between government surveillance and private ad-tracking, users have come to believe that everything they do is probably being tracked by someone. Their response has mostly been apathy rather than terror.

That poses a problem to Apple, which is spending a huge amount of time and money protecting privacy. Federighi believes the company will be vindicated, as complacency changes into concern.

“Some people care about it a lot,” he says. “And some people don’t think about it at all.”

If Apple continues to create products that don’t invade people’s privacy, it raises the bar, he says. Doing so threatens the idea that getting new features means giving up information.

Federighi continues: “I think to the extent we can set a positive example for what’s possible, we will raise people’s sense of expectations. You know, why does this app do this with my data? Apple doesn’t seem to need to do that. Why should this app do it?

“And I think we’re seeing more and more of that. So leading by example is certainly one element of what we’re trying to do. And we know it’s a long, long road, but we think, ultimately we will prevail, and we think it’s worthwhile in any case.”

In recent months – following recent scandals – just about every technology company has moved towards stressing privacy. Google does not release products without making clear how they protect the data that is generated by them; even Facebook, which exists to share information, has claimed it is moving towards a privacy-first approach in an apparent attempt to limit the damage of its repeated data abuse scandals.

Privacy is in danger of becoming a marketing term. Like artificial intelligence and machine learning before it, there is the possibility that it becomes just another word that tech firms need to promise users they’re thinking about – even if how it is actually being used remains largely unknown.

There is scepticism around recent statements made by tech firms. Christopher Weatherhead, technology lead at Privacy International, which has repeatedly called on Apple and its competitors to do more about privacy, says: “A number of Silicon Valley companies are currently positioning themselves as offering a more privacy-focused future, but until the basics are tackled, it does for the time being appear to be marketing bluff.”

Federighi is confident that Apple will continue to work on privacy whether or not people are paying attention, and with little regard to how the word is used and abused. But he admits to being worried about what it might mean for the future.

“Whether or not we’re getting credit for it, or people are noticing the difference, we’re going to do it because we’re building these products, the products that we think should exist in the world,” he says. “I think it would be unfortunate if the public ultimately got misled and didn’t realise ... So I have a concern for the world in that sense. But it certainly isn’t going to affect what we do.”

If privacy is not a luxury, Apple’s critics say, then it is at least a compromise. Building products while knowing as little as possible about the people buying them is a trade-off that involves giving up on the best features, they argue.

Many of Google’s products, for instance, collect data not just to use in advertising but also to personalise apps; Google Maps is able to know what kind of restaurant you might like to visit, for instance. Netflix hoovers up information about its users – its incredibly popular Black Mirror episode Bandersnatch seemed in large part to be a data-gathering exercise – and then uses that to decide which shows to make and what to recommend to its users.

Voice assistants provide the example often held up to demonstrate the ways that protecting privacy also means giving up on features. Google’s version draws on information from across the internet to improve itself, allowing it to learn both how to hear people better and to answer their questions more usefully when it does; Apple’s approach means that Siri doesn’t have quite so much data to play with. Critics argue that holds back its performance, making Siri worse at listening and talking.

Apple is insistent that lack of data is not holding its products back.

“I think we’re pretty proud that we are able to deliver the best experiences, we think in the industry without creating this false trade-off that to get a good experience, you need to give up your privacy,” says Federighi. “And so we challenge ourselves to do that. Sometimes that’s extra work, but that’s worth it. It’s a fun problem to solve.”

The company says that instead of hoovering up data relatively indiscriminately – and putting that into a dataset that allows for the sale of ads as well as the improvement of products – it can use alternative technologies to keep its products smart. Perhaps most unusual of all is its reliance on “differential privacy”, a computer science technique that allows it to collect a vast trove of data without knowing who it is actually collecting data about.

Take the difficult issue of adding new words to the autocorrect keyboard, so that new ways of speaking – the word “belfie”, for example – are reflecting in the phone’s internal dictionary. Doing this while gathering vast amounts of data is relatively simple: just harvest everything everyone says, and when a word reaches a minimum amount of use, it can be considered to be a genuine word rather than a mistake. But Apple doesn’t want to read what people are saying.

Instead, it relies on differential privacy. That takes the words that people are adding to the dictionary and fuzzes them up, making the data more wrong. Added to the new words are a whole host of automatically generated inaccurate ones. If a trend is consistent enough, it will still be there in the data – but any individual word might also be part of the “fuzz”, protecting the privacy of the people who are part of the dataset. It is a complicated and confusing process. But in short it lets Apple learn about its users as a whole, without learning anything about each individual one.

In other cases, Apple simply opts to take publicly available information, rather than relying on gathering up private data from the people who use its services.

Google might improve its image recognition tools by trawling the photos of people who use its service, feeding them into computers so that it gets better at recognising what is in them; Apple buys a catalogue of public photos, rather than taking people’s private ones. Federighi says the same thing has happened with voice recognition – the company can listen to audio that’s out in the world, like podcasts. It has also paid people to explore and annotate datasets so that people’s data remains private, not chewed up into training sets for anonymous artificial intelligence services.

Apple also works to ensure that its users and their devices are protected from other people, too. The company has been working on technologies referred to as “Intelligent Tracking Prevention”, built into its web browser, Safari. In recent years, advertising companies and other snooping organisations have been working on ever new ways of following people around the web; Apple has been trying to stay one step in front, attempting to hide its users from view as they browse the internet.

But hidden in all these details is a philosophical difference, too. In many instances, none of this even needs to be sent to Apple in the first place.

This all comes from the fact that Apple simply doesn’t want to know. “Fundamentally, we view the centralisation of personalised information as a threat, whether it’s in Apple’s hands or anyone else’s hands,” says Federighi. “We don’t think that security alone in the server world is an adequate protection for privacy over the long haul.

“And so the way to manage to ultimately defend user privacy is to make sure that you’ve never collected and centralised the data in the first place. And so in every place we possibly can, we build that into our architectures from the outset.”

Instead, the work is done using the powerful computers in your phone. Rather than uploading vast troves of data into server farms and letting staff sift through them, Apple says it would prefer to instead make the phones smarter and leave all of the data on them – meaning that Apple or anyone else can’t look at that data, even if it wanted to.

“Last fall, we talked about a big special block in our chips that we put in our iPhones and our latest iPads called the Apple Neural Engine – it’s unbelievably powerful at doing AI inference,” says Federighi.

“And so we can take tasks that previously you would have had to do on big servers, and we can do them on device. And often when it comes to inference around personal information, your device is a perfect place to do that: you have a lot of that local context that should never go off your device, into some other company.”

Eventually, this could come to be seen as a feature in itself. The approach has advantages of its own – and so can improve performance whether or not people are thinking about what it means for privacy.

“I think ultimately the trend will be to move more and more to the device because you want intelligence both to be respecting your privacy, but you also want it to be available all the time. Whether you have a good network connection or not, you want it to be very high performance and low latency.”

If Apple isn’t going to harvest its users’ data, then it needs to get it from somewhere else – and sometimes that means getting information from its own employees.

That’s what happens in its health and fitness lab. It hides in a nondescript building in California: the walls are the kind of drab office space found throughout Apple’s hometown, but hiding behind them is the key to some of its most popular recent products.

Apple’s commitment to health is most obviously demonstrated in the Apple Watch but has made its way across all of the company’s products. Tim Cook has said that it will be the company’s “greatest contribution to mankind”. Gathering such data has already helped save people’s lives, and the company is clearly optimistic and excited about the new data is set to collect, the new ways to do it and the new treatments it could provide.

But health data is also among some of the most significant and sensitive possible information about anyone that there is. The chances are that your phone knows more about how well you are than your doctor does – but your doctor is constrained by professional ethics, regulation and norms that ensure he isn’t accidentally leaking that information out into the world.

Those protections are not just moral requirements, but practical necessities; the flow of information between people and their doctors can only happen if the relationship is protected by a commitment to privacy. What goes for medical professionals and patients works just the same for people and their phones.

To respond to that, Apple created its fitness lab. It is a place devoted to collecting data – but also a monument to the various ways that Apple works to keep that data safe.

Data streams in through the masks that are wrapped around the faces of the people taking part in the study, it is collected by the employees who are tapping their findings into the iPads that serve as high-tech clipboards, and it is streaming in through the Apple Watches connected to their wrists.

In one room, there is an endless swimming pool where swimmers are analysed by a mask worn across their face. Next door, people are doing yoga wearing the same masks. Another section includes huge rooms that are somewhere between a jail cell and a fridge, where people are cooled down or heated up to see how that changes the data that is gathered.

All of that data will be used to collect and understand even more data, on normal people’s arms. The function of the room is to tune up the algorithms that make the Apple Watch work, and by doing so make the information it collects more useful. Apple might learn that there is a more efficient way to work out how many calories people are burning when they run, for instance, and that might lead to software and hardware improvements that will make their way on to your wrist in the future.

But even as those vast piles of data are being collected, it is being anonymised and minimised. Apple employees who volunteer to come along to participate in the studies scan themselves into the building – and then are immediately disassociated from that ID card.

Apple, by design, doesn’t even know which of its own employees it is harvesting data about. The employees don’t know why their data is being harvested, only that this work will one day end up in unknown future products.

Critics could argue all of this is unnecessary: that it didn’t need to make its own chips, to harvest its own data, subjecting both staff and silicon to strange environments to keep the information private. Apple argues that it doesn’t want to buy in that data or those chips, and that doing all this is an attempt to build new features while protecting users’ safety.

If design is how Apple works, data privacy is how it works, too. The company is famously as private about its future products as it aims to be about its user’s information, but it is in them that all this work and all those principles will be put to the test – and it is a test that could decide the future both of the company and of the internet.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments

Bookmark popover

Removed from bookmarks