Facebook can now detect ‘the most dangerous crime of the future’ and the AI used to make them

Facebook’s reverse engineering is ‘like recognising the components of a car based on how it sounds, even if this is a new car we’ve never heard of before’

Your support helps us to tell the story

From reproductive rights to climate change to Big Tech, The Independent is on the ground when the story is developing. Whether it's investigating the financials of Elon Musk's pro-Trump PAC or producing our latest documentary, 'The A Word', which shines a light on the American women fighting for reproductive rights, we know how important it is to parse out the facts from the messaging.

At such a critical moment in US history, we need reporters on the ground. Your donation allows us to keep sending journalists to speak to both sides of the story.

The Independent is trusted by Americans across the entire political spectrum. And unlike many other quality news outlets, we choose not to lock Americans out of our reporting and analysis with paywalls. We believe quality journalism should be available to everyone, paid for by those who can afford it.

Your support makes all the difference.Facebook has developed a model to tell when a video is using a deepfake – and can even tell which algorithm was used to create it.

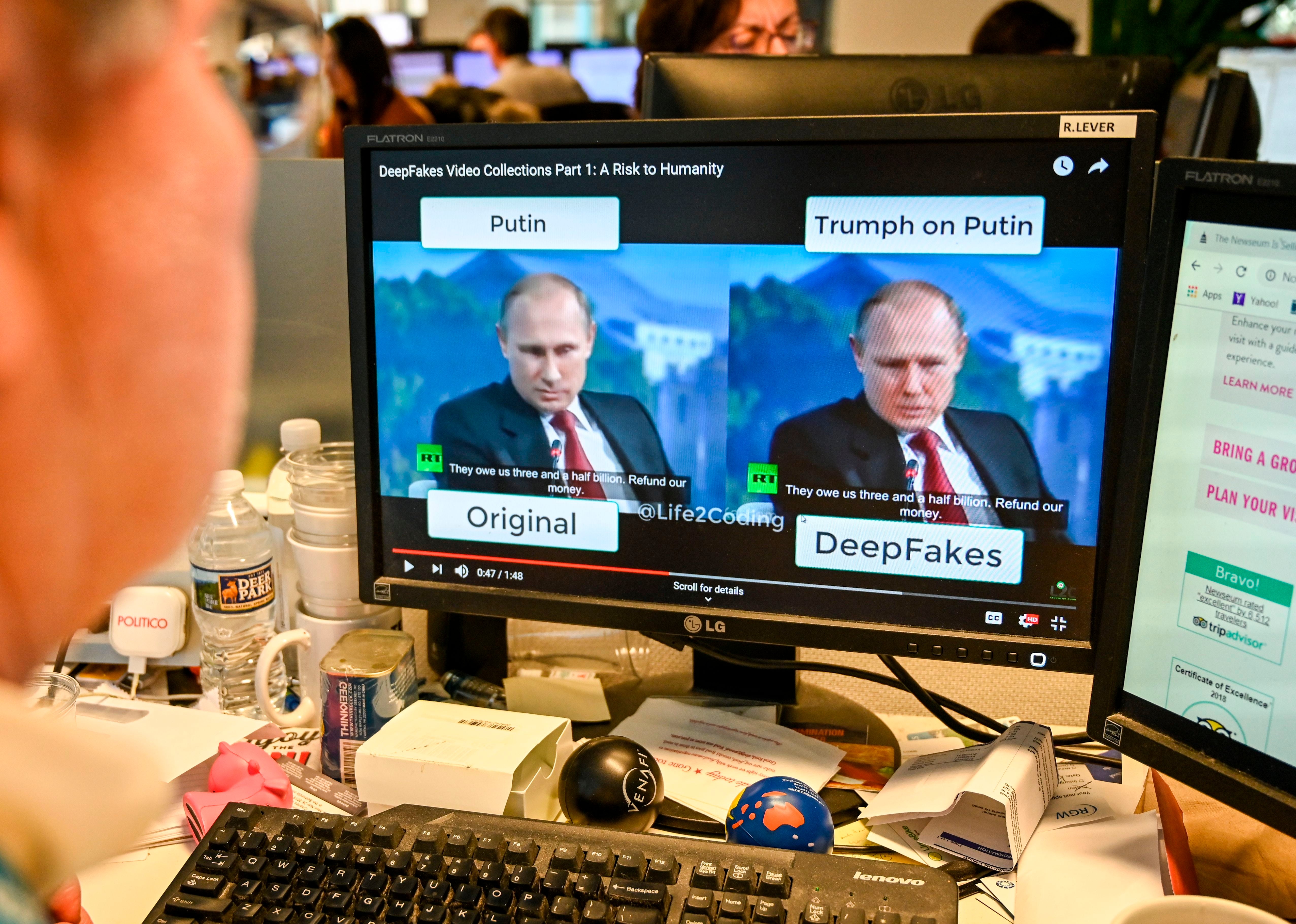

The term “deepfake” refers to a video where artificial intelligence and deep learning – an algorithmic learning method used to train computers – has been used to make a person appear to say something they have not.

Notable examples of deepfakes include a manipulated video of Richard Nixon’s Apollo 11 presidential address and Barack Obama insulting Donald Trump – and although they are relatively benign now, experts suggest that they could be the most dangerous crime of the future.

Detecting a deepfake relies on telling whether an image is real or not, but the amount of information available to researchers to do so can be limited – relying on potential input-output pairs or rely on hardware information that might not be available in the real world.

Facebook’s new process relies in detecting the unique patterns behind an artificially-intelligent model that could generate a deepfake. The video or image is run through a network to detect ‘fingerprints’ left on the image - imperfections when the deepfake was made, such as noisy pixels or asymmetrical features – that can be used to find its ‘hyperparameters’.

“To understand hyperparameters better, think of a generative model as a type of car and its hyperparameters as its various specific engine components. Different cars can look similar, but under the hood they can have very different engines with vastly different components”, Facebook says.

“Our reverse engineering technique is somewhat like recognising the components of a car based on how it sounds, even if this is a new car we’ve never heard of before.”

Finding these trains is vital, as deepfake software is easy to customise and allows malicious actors to hide themselves. Facebook claims it can establish if a piece of media is a deepfake from a single still image and, by detecting which neural network was used to develop them, could be used to find the individual or group that created it.

“Since generative models mostly differ from each other in their network architectures and training loss functions, mapping from the deepfake or generative image to the hyperparameter space allows us to gain critical understanding of the features of the model used to create it”, Facebook says.

It is unlikely this will be the end of the deepfake battle as the technology is still adapting – and knowing that this knowledge is out there will allow malicious individuals to plan around it. The research will, however, help engineers better investigate current deepfake incidents, and push the boundaries further in the ways that these videos and images can be detected.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments