Facial recognition has been creeping into British life as the government looks the other way

Analysis: While police forces have been taken to court over technology, private companies have been allowed to implement it covertly, Lizzie Dearden writes

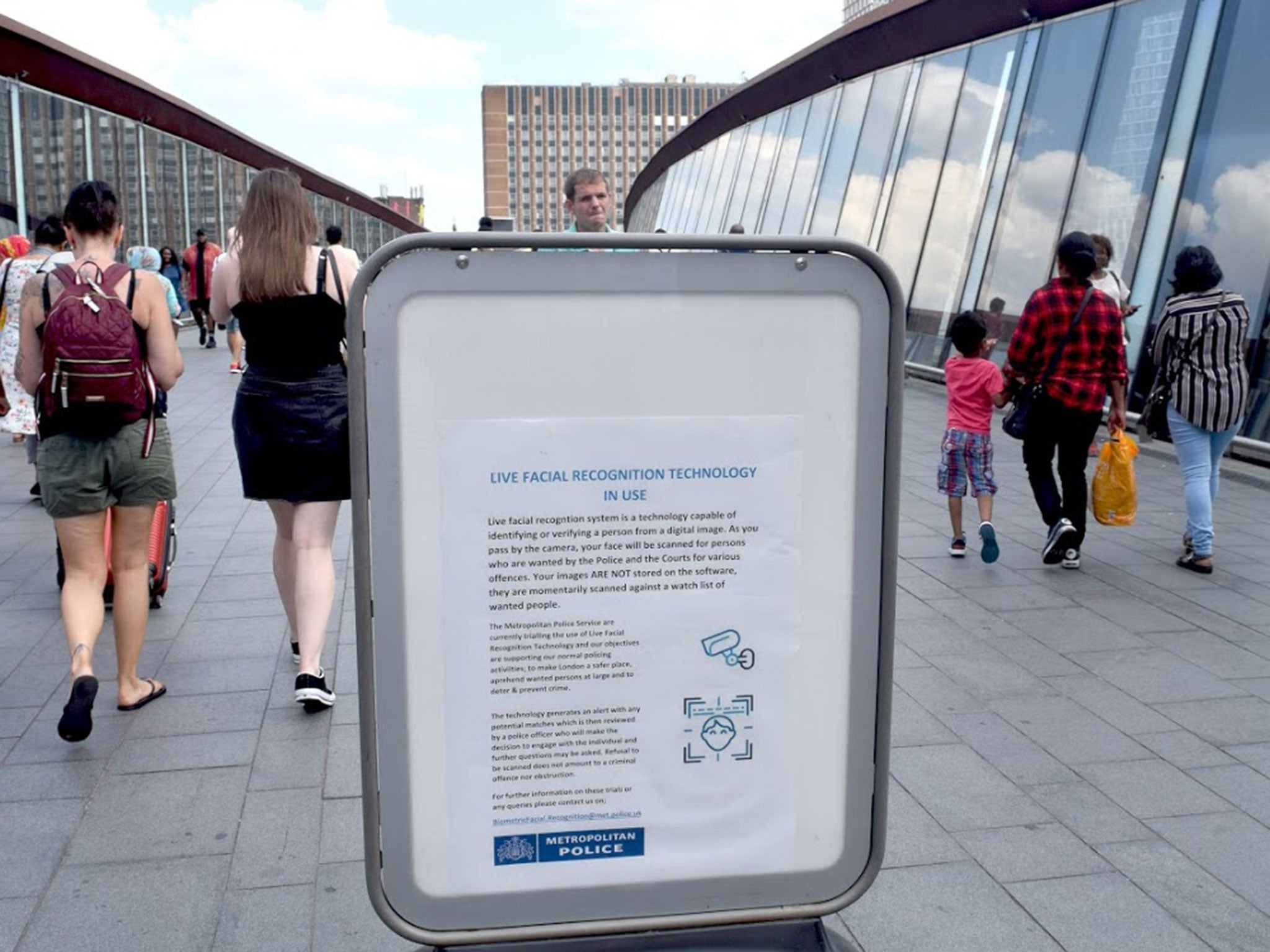

Facial recognition is once again in the spotlight after revelations that the controversial technology has been covertly used in public spaces across England.

The King’s Cross estate in London, Meadowhall shopping centre in Sheffield, the World Museum in Liverpool and the Millennium Point conference centre in Birmingham are among the locations where members of the public have been unwittingly scanned.

While police have been heavily criticised for trials of facial recognition with “staggeringly inaccurate” results, private companies have been able to implement it without public knowledge or consent.

Revelations that the technology has been used in shops, conference centres and museums are seen by privacy campaigners as proof of their warnings that “lawless” facial recognition would spread.

Although its use is governed by data protection and privacy laws, there is no specific legal framework on facial recognition in the UK.

Earlier this year, a court case over trials conducted by South Wales Police was told that the only restrictions on the technology “came from self-restraint”, rather than the law.

The government has faced repeated calls to regulate facial recognition, amid warnings that privacy would be trampled on without a proper legal framework.

The situation enables companies like those named this week to implement it without notifying authorities or the general public.

Unlike Scotland Yard and South Wales Police, private firms have not had to release results.

While police forces compare scanned people’s faces to a watchlist of wanted criminals on their databases, it is unclear what the companies are checking images against, or what they are looking for.

The owners of the King’s Cross estate have claimed they use facial recognition “in the interest of public safety”, but have demonstrated no benefit from technology that research suggests could be racially discriminatory.

They claimed that cameras in the district “have sophisticated systems in place to protect the privacy of the general public”, but gave no further detail.

Under the Data Protection Act 2018, anyone scanned has the right to be informed about how their image has been collected and used.

But the assurance is meaningless if the public are not aware that their image has been captured.

Proponents of facial recognition see it as a necessary, and inevitable, tool to maximise the effectiveness of CCTV cameras that are already commonplace across the UK.

Some police leaders voice frustration that members of the public will willingly use it to unlock their phone or for other tasks of convenience, but oppose the authorities’ use of the same technology.

They vehemently deny claims that the remit of facial recognition in Britain could be widened to the authoritarian excesses seen in China.

But the “nothing to hide, nothing to fear” mantra wears thin for technology that has so far misidentified members of the public as potential criminals in 96 per cent of alerts in the Metropolitan Police trials.

The government has so far resisted calls to regulate facial recognition, but mounting public concern may soon force an intervention.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments