How the First World War changed the weather for good

Forecasters stopped looking for patterns in the past, and started using numbers to look solidly at the future

Your support helps us to tell the story

From reproductive rights to climate change to Big Tech, The Independent is on the ground when the story is developing. Whether it's investigating the financials of Elon Musk's pro-Trump PAC or producing our latest documentary, 'The A Word', which shines a light on the American women fighting for reproductive rights, we know how important it is to parse out the facts from the messaging.

At such a critical moment in US history, we need reporters on the ground. Your donation allows us to keep sending journalists to speak to both sides of the story.

The Independent is trusted by Americans across the entire political spectrum. And unlike many other quality news outlets, we choose not to lock Americans out of our reporting and analysis with paywalls. We believe quality journalism should be available to everyone, paid for by those who can afford it.

Your support makes all the difference.Culture has rarely tired of speaking about the weather. Pastoral poems detail the seasonal variations in weather ad nauseam, while the term “pathetic fallacy” is often taken to refer to a Romantic poet’s wilful translation of external phenomena – sun, rain, snow – into aspects of his own mind. Victorian novels, too, use weather as a device to convey a sense of time, place and mood: the fog in Dickens’s Bleak House (1853), for example, or the wind that sweeps through Emily Bronte’s Wuthering Heights (1847).

And yet the same old conversations fundamentally changed tense during the First World War. Because during the war, weather forecasting turned from a practice based on looking for repeated patterns in the past, to a mathematical model that looked towards an open future.

Needless to say, a lot relied on accurate weather forecasting in wartime: aeronautics, ballistics, the drift of poison gas. But forecasts at this time were in no way reliable.

Although meteorology had developed throughout the Victorian era to produce same-day weather maps and daily weather warnings (based on a telegram service that could literally move faster than the wind), the practice of forecasting the weather as it evolved and changed over time remained notoriously inadequate.

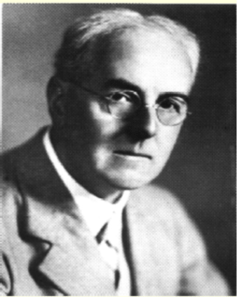

English mathematician Lewis Fry Richardson saw that the pre-War practice of weather forecasting was much too archival in nature, merely matching observable weather phenomena in the present to historical records of previous weather phenomena.

This, he deemed, was a fundamentally unscientific method, as it presupposed that past evolutions of the atmosphere would repeat in the future. For the sake of more accurate prediction, he claimed, it was essential that forecasters felt free to disregard the index of the past.

And so, in 1917, while working in the Friends’ Ambulance Unit on the Western Front, Richardson decided to experiment with the idea of making a numerical forecast – one based on scientific laws rather than past trends. He was able to do so because on 20 May, 1910 (also, funnily enough, the date of Edward VII’s funeral in London, the last coming together of Europe’s royal pedigree before the First World War) Norwegian meteorologist Vilhelm Bjerknes had simultaneously recorded atmospheric conditions across Western Europe. He had noted temperature, air pressure, air density, cloud cover, wind velocity and the valences of the upper atmosphere.

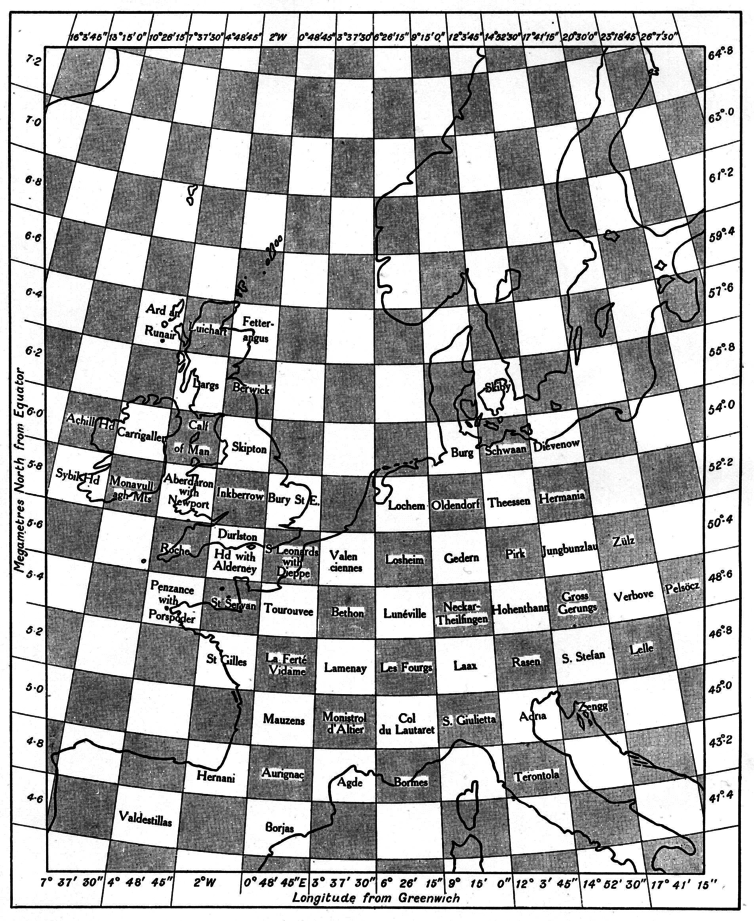

This data allowed Richardson to model a mathematical weather forecast. Of course, he already knew the weather for the day in question (he had Bjerknes’s record to hand, after all); the challenge was to generate from this record a numerical model which he could then apply to the future. And so he drew up a grid over Europe, each cell incorporating Bjerknes’s weather data, including locational variables such as the extent of open water affecting evaporation, and five vertical divisions in the upper air.

Richardson claimed that it took him six weeks to calculate a six-hour forecast for a single location. Critics have wondered whether even six weeks was enough time. In any case, the first numerical forecast was woefully out of sync with what actually happened. Richardson’s forecast took longer to calculate than the weather it was calculating took to happen.

Yet scientific failures of this magnitude often have important consequences, not least in this case because Richardson’s mathematical approach to weather forecasting was largely vindicated in the 1940s with the invention of the first digital computers, or “probability machines”. These are still the basis for much weather forecasting today. His experiment also contributed to the development of an international field of scientific meteorology.

This “new meteorology”, as it was sometimes called, became culturally pervasive in the years following the First World War. Not only did it lift the metaphors of trench warfare and place them in the air (the “weather front” taking its name directly from the battle fronts of the war), it also insisted that to speak of the weather meant to speak of a global system of energies opening, ever anew, onto different futures.

And it was reflected in the literature of the period. Writing in the 1920s, Austrian novelist Robert Musil opened his masterpiece The Man Without Qualities (1930-43), a book whose protagonist is a mathematician, with the scientific language of meteorology. “The isotherms and isotheres were functioning as they should,” we are told. “The water vapour in the air was at its maximal state of tension … It was a fine day in August 1913.”

What is interesting here is not simply that the everyday language of “a fine day” is determined by a set of newfangled scientific abstractions, but also the fact that a novel written after the war dares to inhabit the virtual outlook of before.

Similarly to Virginia Woolf’s To the Lighthouse (1927), where the pre-war question of whether or not the weather will be “fine” tomorrow takes on a general significance, Musil’s irony depends upon occupying a moment in history when the future was truly exceptional: what was about to happen next was nothing like the past. Musil’s novel – and Woolf’s, too – is in one sense a lament for a failed prediction: why couldn’t the war have been predicted?

Writing in the wake of his own initial failure as a forecaster in 1922, Richardson imagined a time in which all weather might be calculable before it takes place. In a passage of dystopian fantasy, he conjured up an image of what he called a “computing theatre”: a huge structure of surveillance through which weather data could be collected and processed, and the future managed.

The disconcerting power of this vision, and its mathematical model, emerged from the idea that weather, encoded as information to be exchanged in advance of its happening, could be finally separable from experience. With the atmosphere of the future mass-managed in this way, we would never again need to feel under the weather.

Today, it has become commonplace to check our phones for the accurate temperature while standing outside in the street, and climate change has forced us to reckon with a meteorological future that we are sure will not be in balance with the past. With this in mind, it is perhaps worth returning once more to the cultural moment of “new meteorology” to contemplate its central paradox: that our demand to know the future in advance goes hand-in-hand with an expectation that the future will be unlike anything we’ve seen before.

Barry Sheils is a lecturer in 20th and 21st century literature at Durham University. This article was originally published on The Conversation (www.conversation.com)

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments