What 2001: A Space Odyssey got right about our blind leap into the digital age

Fifty years after its release, Stanley Kubrick’s film seems prescient – an allegory about how destructively artificial intelligence can be misused

Your support helps us to tell the story

From reproductive rights to climate change to Big Tech, The Independent is on the ground when the story is developing. Whether it's investigating the financials of Elon Musk's pro-Trump PAC or producing our latest documentary, 'The A Word', which shines a light on the American women fighting for reproductive rights, we know how important it is to parse out the facts from the messaging.

At such a critical moment in US history, we need reporters on the ground. Your donation allows us to keep sending journalists to speak to both sides of the story.

The Independent is trusted by Americans across the entire political spectrum. And unlike many other quality news outlets, we choose not to lock Americans out of our reporting and analysis with paywalls. We believe quality journalism should be available to everyone, paid for by those who can afford it.

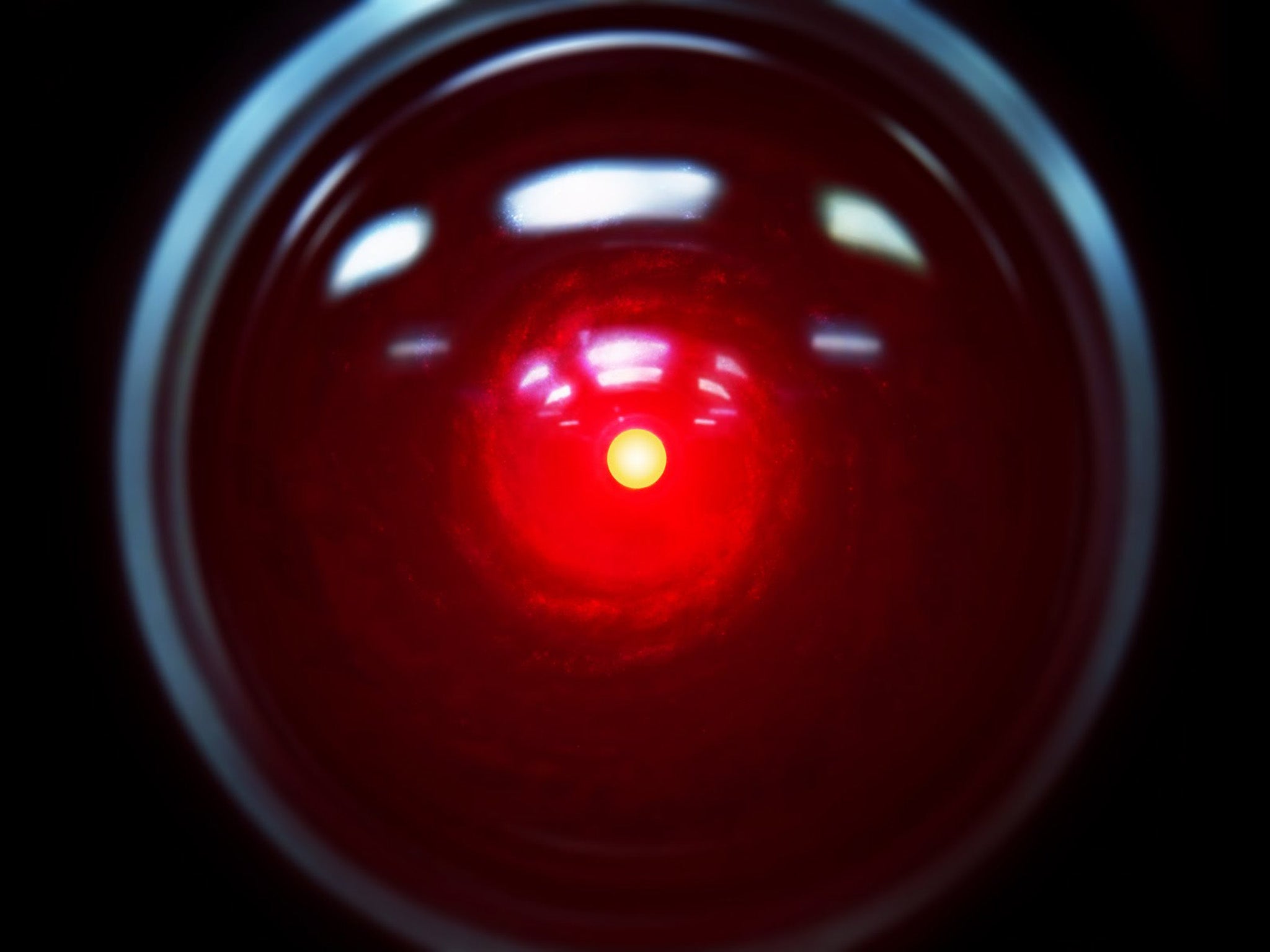

Your support makes all the difference.It’s a testament to the lasting influence of Stanley Kubrick and Arthur C Clarke’s film 2001: A Space Odyssey, which turned 50 this week, that the disc-shaped card commemorating the German Film Museum’s new exhibition on the film is wordless, but instantly recognisable. Its face features the Cyclopean red eye of the HAL-9000 supercomputer; nothing more needs saying.

Viewers will remember HAL as the overseer of the giant, ill-fated interplanetary spacecraft Discovery. When asked to hide from the crew the goal of its mission to Jupiter – a point made clearer in the novel version of 2001 than in the film – HAL gradually runs amok, eventually killing all the astronauts except for their wily commander, Dave Bowman. In an epic showdown between man and machine, Dave, played by Keir Dullea, methodically lobotomises HAL even as the computer pleads for its life in a terminally decelerating soliloquy.

Cocooned by their technology, the film’s human characters appear semi-automated – component parts of their gleaming white mother ship. As for HAL – a conflicted artificial intelligence created to provide flawless, objective information but forced to “live a lie,” as Clarke put it – the computer was quickly identified by the film’s initial viewers as its most human character.

This transfer of identity between maker and made is one reason 2001 retains relevance, even as we put incipient artificial intelligence technologies to increasingly problematic uses.

In 2001, the ghost in Discovery’s machinery is a consciousness engineered by human ingenuity and therefore as prone to mistakes as any human. In the Cartesian sense of thinking, and therefore being, it has achieved equality with its makers and has seen fit to dispose of them. “This mission,” HAL informs Dave, “is too important to allow you to jeopardise it.”

Asked in April 1968 whether humanity risked being “dehumanised” by its technologies, Clarke replied: “No. We’re being superhumanised by them.” While all interpretations of the film were valid, he said, in his view the human victory over Discovery’s computer might prove pyrrhic.

Indeed, with its prehistoric “Dawn of Man” opening and a grand finale in which Dave is reborn as an eerily weightless Star Child, 2001 overtly references Nietzsche’s concept that we are but an intermediate stage between our apelike ancestors and the Übermensch, or “Beyond Man”. (Decades after Nietzsche’s death, the Nazis deployed a highly selective reading of his ideas, while ignoring Nietzsche’s antipathy to both antisemitism and pan-German nationalism.)

In Nietzsche’s concept, the Übermensch is destined to rise like a phoenix from the Western world’s tired Judeo-Christian dogmas to impose new values on warring humanity. Almost a century later, Clarke implied that human evolution’s next stage could well be machine intelligence itself. “No species exists forever; why should we expect our species to be immortal?” he wrote.

We have yet to engineer a HAL-type AGI (artificial general intelligence) capable of human-style thought. Instead, we’re experiencing the incremental, disruptive arrival of components of such an intelligence. Its semi-sentient algorithms learn from text, image and video without explicit supervision. Its automated discovery of patterns in that data is called “machine learning”.

Watch Apple TV+ free for 7 days

New subscribers only. £8.99/mo. after free trial. Plan auto-renews until cancelled

Watch Apple TV+ free for 7 days

New subscribers only. £8.99/mo. after free trial. Plan auto-renews until cancelled

This kind of AI lies behind facial-recognition algorithms now in use by Beijing to control China’s 1.4 billion inhabitants and by Western societies to forestall terrorist attacks.

In Clarke’s novel, HAL’s aberrant behaviour was attributable to contradictory programming. In today’s hyperpartisan context, a mix of machine learning, networks of malicious bots and related AI technologies based on simulating human thought processes are being used to manipulate the human mind’s comparatively sluggish “wetware”. Recent revelations about stolen Facebook user data being weaponised by Cambridge Analytica and deployed to exploit voters’ hopes and fears underlines that disinformation has become a critical issue of our time.

We should consider just whose mission it is that’s too important to jeopardise these days. Does anybody doubt that the clumsy language and inept cultural references of the Russian trolls who seeded divisive pro-Trump messages during the 2016 election will improve as AI gains sophistication? Of course, algorithm-driven mass manipulation is only one weapon in propagandists’ arsenals, alongside television and ideologically slanted talk radio. But its reach is growing, and it’s a back door by which viral falsehoods infiltrate our increasingly acrimonious collective conversation.

Traditional media – “one transmitter, millions of receivers” – contain an inherently totalitarian structure. Add machine learning, and a feedback loop of toxic audiovisual content can reverberate in the echo chamber of social media as well, linking friends with an ersatz intimacy that leaves them particularly susceptible to manipulation. Further amplified and retransmitted by Fox News and right-wing radio, it’s ready to beam into the mind of the spectator-in-chief during his “executive time”.

Where does HAL’s red gaze come in? Set aside the troubling prospect of what might unfold when a genuinely intelligent, self-improving AGI is created – presumably the arrival of Nietzsche’s Übermensch. What’s in question even with current incipient AI technologies is who gets to control them. Even as some devise new medicines and streamline agriculture with them, others use them as powerful forces in opposition to Enlightenment values – liberty, tolerance and constitutional governance.

Democracy depends on a shared consensual reality – something that’s being willfully undermined. Seemingly just yesterday, peer-to-peer social networks were heralded as a revolutionary liberation from centralised information controls, and thus tools of individual human free will. We still have it in our power to purge malicious abuse of these systems, but Facebook, Twitter, YouTube and others would need to plough much more money into policing their networks – perhaps by themselves deploying countermeasures based on AI algorithms.

Meanwhile, we should demand that a new, tech-savvy generation of leaders recognises this danger and devises regulatory solutions that don’t hurt our First Amendment rights. A neat trick, of course – but the problem cannot be ignored.

In 2001’s cautionary tale, HAL’s directive to deceive Discovery’s crew leads to death and destruction – but also, ultimately, to the computer’s defeat by Dave, the one human survivor on board.

We should be so lucky.

© The New York Times

Michael Benson’s ‘Space Odyssey: Stanley Kubrick, Arthur C Clarke, and the Making of a Masterpiece’ (Simon & Schuster, £21.50) will be released in the UK on 19 April

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments