Robot wars: The rise of artificial intelligence

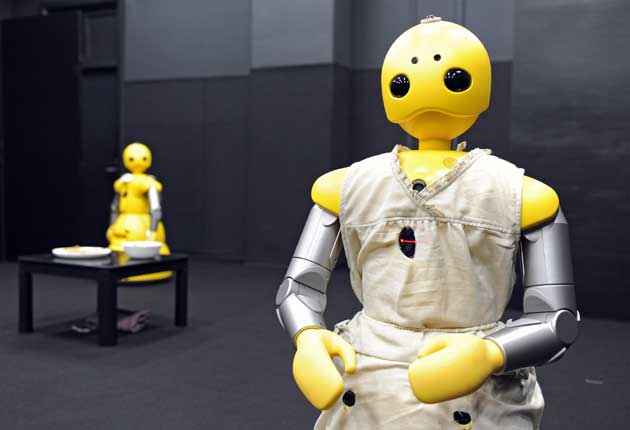

They can entertain children, feed the elderly, care for the sick. But warnings are being sounded about the march of robots

The robots are not so much coming; they have arrived. But instead of dominating humanity with superior logic and strength, they threaten to create an underclass of people who are left without human contact.

The rise of robots in the home, in the workplace and in warfare needs to be supervised and controlled by ethical guidelines which limit how they can be used in sensitive scenarios such as baby-sitting, caring for the elderly, and combat, a leading scientist warns today.

Sales of professional and personal service robots worldwide were estimated to have reached about 5.5 million this year – and are expected to more than double to 11.5 million by 2011 – yet there is little or no control over how these machines are used. Some help busy professionals entertain children; other machines feed and bathe the elderly and incapacitated.

Professor Noel Sharkey, an expert on artificial intelligence based at the University of Sheffield, warns that robots are being introduced to potentially sensitive situations that could lead to isolation and lack of human contact, because of the tendency to leave robots alone with their charges for long periods.

"We need to look at guidelines for a cut-off so we have a limit to the contact with robots," Professor Sharkey said. "Some robots designed to look after children now are so safe that parents can leave their children with them for hours, or even days."

More than a dozen companies based in Japan and South Korea manufacture robot "companions" and carers for children. For example, NEC has tested its cute-looking personal robot PaPeRo on children: the device lives at home with a family, recognises their faces, can mimic their behaviour and be programmed to tell jokes, all the while exploring the house. Many robots are designed as toys, but they can also take on childcare roles by monitoring the movements of a child and communicating with a parent in another room, or even another building, through wireless computer connection or mobile phone.

"Research into service robots has demonstrated a close bonding and attachment by children, who, in most cases, prefer a robot to a teddy bear," Professor Sharkey said. "Short-term exposure can provide an enjoyable and entertaining experience that creates interest and curiosity. But because of the physical safety that robot minders provide, children could be left without human contact for many hours a day or perhaps several days, and the possible psychological impact of the varying degrees of social isolation on development is unknown." Less playful robots are being developed to look after elderly people. Secom makes a computer called My Spoon which helps disabled people to eat food from a table. Sanyo has built an electric bathtub robot that automatically washes and rinses someone suffering from movement disability.

"At the other end of the age spectrum [to child care], the relative increase in many countries in the population of the elderly relative to available younger care-givers has spurred the development of elder-care robots," Professor Sharkey said.

"These robots can help the elderly to maintain independence in their own homes, but their presence could lead to the risk of leaving the elderly in the exclusive care of machines without sufficient human contact. The elderly need the human contact that is often provided only by caregivers and people performing day-to-day tasks for them."

In the journal Science, Professor Sharkey calls for ethical guidelines to cover all aspects of robotic technology, not just in the home and workplace, but also on the battlefield, where lethal robots such as the missile-armed Predator drones used in Iraq and Afghanistan are already deployed with lethal effect. The US Future Combat Systems project aims to use robots as "force multipliers", with a single soldier initiating large-scale ground and aerial attacks by a robot droid army. "Robots for care and for war represent just two of many ethically problematic areas that will soon arise from the rapid increase and spreading diversity of robotics applications," Professor Sharkey said. "Scientists and engineers working in robotics must be mindful of the potential dangers of their work, and public and international discussion is vital in order to set policy guidelines for ethical and safe application before the guidelines set themselves."

The call for controls over robots goes back to the 1940s when the science-fiction author Isaac Asimov drew up his famous three laws of robotics. The first rule stated that robots must not harm people; the second that they must obey the commands of people provided they does not conflict with the first law; and the third law was that robots must attempt to avoid harming themselves provided this was not in conflict with the two other laws.

Asimov wrote a collection of science fiction stories called I, Robot which exploited the issue of machines and morality. He wanted to counter the long history of fictional accounts of dangerous automatons – from the Jewish Golem to Mary Shelley's Frankenstein – and used his three laws as a literary device to exploit the ethical issues arising from the human interaction with non-human, intelligent beings. But late 20th-century predictions about the rise of machines endowed with superior artificial intelligence have not been realised, although robot scientists have given their mechanical protégés quasi-intelligent traits such as simple speech recognition, emotional expression and face recognition.

Professor Starkey believes that even dumb robots need to be controlled. "I'm not suggesting like Asimov to put ethical rules into robots, but to just to have guidelines on how robots are used," he said. "Current robots are not bright enough even to be called stupid. If I even thought they would be superior in intelligence, I would not have these concerns. They are dumb machines not much brighter than the average washing machine, and that's the problem."

Isaac Asimov: The three laws of robotics

The science fiction author Isaac Asimov, who died in 1992, coined the phrase "robotics" to describe the study of robots. In 1940, Asimov drew up his three laws of robotics, partly as a literary device to exploit the ethical issues arising from the interaction with intelligent machines.

* First Law: a robot must not harm a human being or, through inaction, allow a human being to be harmed.

* Second Law: a robot must obey the commands of human beings, except where the orders conflict with the first law.

* Third Law: a robot must protect its own existence so long as this does not conflict with the first two laws.

Later on, Asimov amended the laws by adding two more. The "zeroth" law stated that a robot must not harm humanity, which deals with the ethical problem arising from following the first law but in the process putting other human beings at risk.

Asimov also added a final "law of procreation" stating that robots must not make other robots that do not follow the laws of robotics.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments

Bookmark popover

Removed from bookmarks