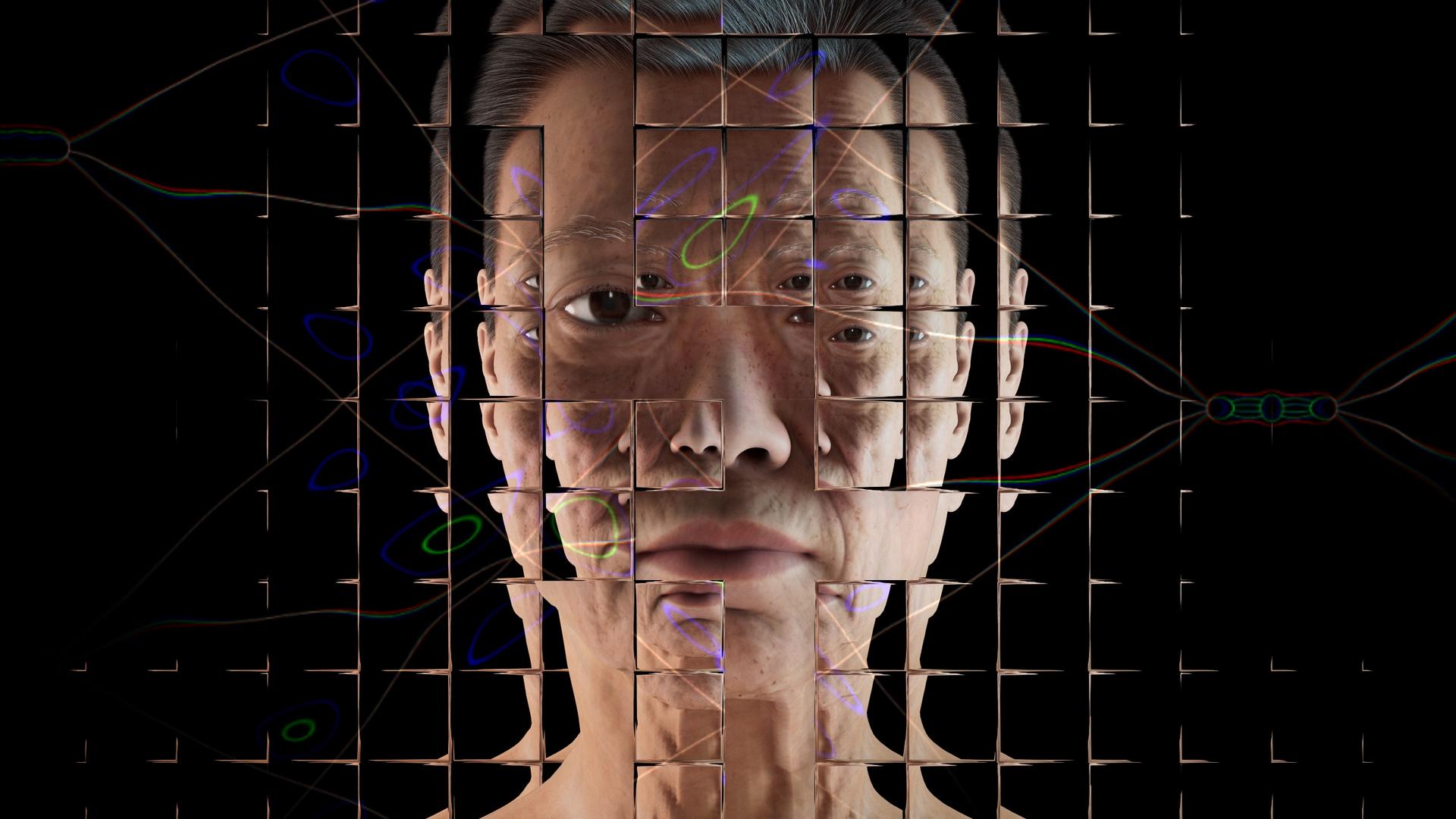

Google’s former head says AI is as dangerous as nuclear weapons

Eric Schmidt said that he was ‘naive about the impact of what we were doing’ but that ‘arming’ AI could ‘trigger the other side’

Google’s former chief executive Eric Schmidt has called artificial intelligence as dangerous as nuclear weapons.

Speaking at the Aspen Security Forum earlier this week, Eric Schmidt said that he was “naive about the impact of what we were doing”, but that information is “incredibly powerful” and “government and other institutions should put more pressure on tech to put these things consistent with our values.”

“The leverage that tech has is very, very real. If you think about, how will we negotiate an AI agreement? First you have to have technologists that understand what’s going to happen, and then you have awareness on the other side.

“Let’s say we want to have a chat with China on some kind of treaty around AI surprises. Very reasonable. How would we do it? Who in the US government would work with us? And it’s even worse on the Chinese side? Who do we call? … we’re not ready for the negotiations we need.

“In the 50s and 60s, we eventually worked out a world where there was a ‘no surprise’ rule about nuclear tests and eventually they were banned It’s an example of a balance of trust, or lack of trust, it’s a ‘no surprises’ rule.

“I’m very concerned that the U.S. view of China as corrupt or Communist or whatever, and the Chinese view of America as failing…will allow people to say ‘Oh my god, they’re up to something,’ and then begin some kind of conundrum … because you’re arming or getting ready, you then trigger the other side.”

The capabilities of artificial intelligence have been stated – and overstated – numerous times over the years. Tesla chief executive Elon Musk has often said that AI is highly likely to be a threat to humans, and recently Google fired a software engineer who claimed its artificial intelligence had become self-aware and sentient.

However, experts have often reminded people that the issue of AI is what it is trained for and how it is used by humans. If the algorithms that train these systems are based on flawed, racist, or sexist data, then the results will reflect that.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments

Bookmark popover

Removed from bookmarks