Is Apple Intelligence safe? How an Elon Musk tweet raised the big question about the future of the iPhone

New Apple AI tools include limitations and safeguards – even if the Tesla and X boss is not satisfied with them. But does the unpredictable nature of artificial intelligence mean the curbs will really work? Andrew Griffin explores what lies beneath...

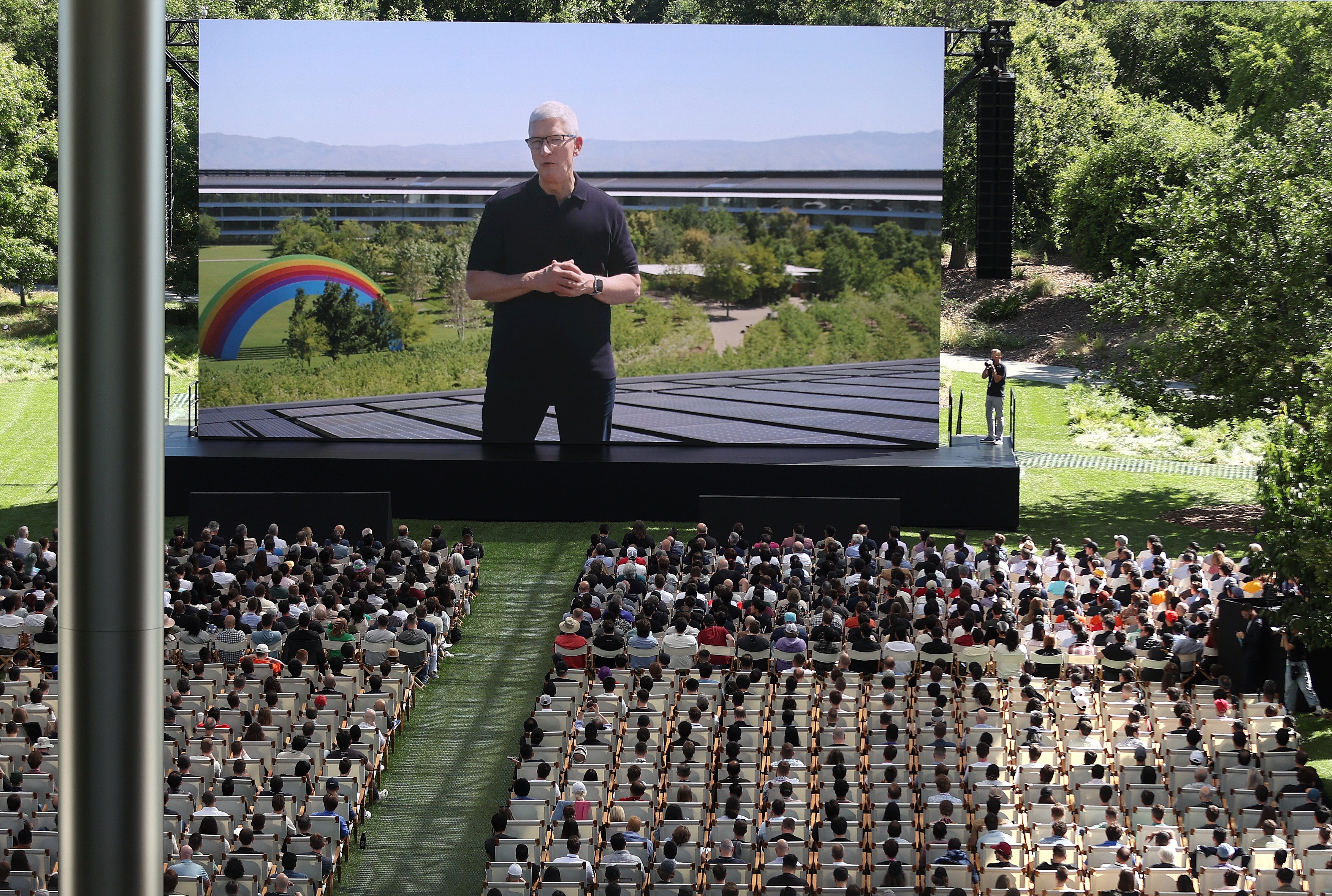

It wasn’t the reaction that Apple was hoping for – though nobody can ever really plan for an intervention from Elon Musk. Apple had just spent almost two hours enumerating its new AI features and the protections that had been added to ensure that they were private and secure. Then it dropped.

“If Apple integrates OpenAI at the OS level, then Apple devices will be banned at my companies. That is an unacceptable security violation,” Musk wrote. “And visitors will have to check their Apple devices at the door, where they will be stored in a Faraday cage.”

There was no “if” about it: during its event, Apple had mostly talked about its own new “Apple Intelligence”, but it had also revealed that it would integrate OpenAI’s ChatGPT with Siri, so that it could deal with questions the built-in assistant can’t answer. That was what had frustrated the Tesla and SpaceX boss so much that led to an outburst that overshadowed Apple’s announcement.

Moments after Musk’s tweet, Apple chief executive Tim Cook was on stage talking to journalists about what they could expect from Apple Intelligence. It “combines the power of generative models with personal context to deliver intelligence that's incredibly useful and relevant to users”, he said, adding that it does so “while setting a new standard for privacy and AI”.

He didn’t address Musk’s tweets in any explicit way. But, even if he had not read them, he certainly was addressing the broader concern that now hangs over the whole tech industry: is this thing safe?

The anxieties around the safety of AI are so multifarious that they sometimes become hard to follow. But they largely boil down to a few different things: that AI represents an assault on privacy, since it often relies on heavy and indiscriminate data collection and analysis; that it will make it too easy to create synthetic media, leading either to the destruction of creativity, the flooding of the internet with low-quality text and images, or both; and the big fear that it will become so smart that it will wipe us all out, usually through a process the technology and threat of which goes unexplained.

Apple has responded to those first two problems with a range of features, principles and commitments. Its relative lack of response to the latter might be an important indication of the current state of artificial intelligence.

One of the more remarkable things about Musk’s attack is that Apple has leaned so heavily on privacy both historically and in the announcement of its new AI tools. The charge that has been made against Apple is that it collects so little data that its AI tools are hobbled. In its launch, the company spent a long time explaining the privacy protections that had been built into its new AI tools, including those that arise from its partnership with OpenAI.

In his complaint, Musk appeared to have misunderstood the update, whether intentionally or not. Apple says that users will be asked to opt in for any request to be handed to ChatGPT, each time.

Even when ChatGPT is used, data collection is minimised; their IP addresses will be obscured, and OpenAI has committed not to store requests. It also comes with controls, so that a parent can switch it off for a child, or a company can do so on an employee’s device.

When Apple’s own AI tools are used, the safeguards are even stronger. Most of the work will be done on the device, so that nobody else can see it. When it does have to leave to one of Apple’s servers, a new technology called “Private Cloud Compute” means it will not be personally identifiable and will be destroyed as soon as it is finished with.

But if Musk misrepresented the details of Apple’s updates, he accurately grasped the public mood. After a run of high-profile and embarrassing scandals at Microsoft, OpenAI and Google, the public feeling is shifting against the companies making AI, if not the technology itself. Musk would have known that only a little insinuation of suspect behaviour would be enough to raise concerns.

It’s not clear exactly why Musk chose to raise those concerns, however. Some part of it may be his history with OpenAI: he helped found the company, but has since lost control of it. Seemingly partly as a response, last year he launched his own AI research company named xAI.

In addition to his attempt to damage a company that is both competitor and legal opponent, he may also have had more high-minded concerns. Musk was one of the founders of OpenAI who did so out of a seemingly genuine fear that AI would pose a danger, and a belief that the only way to combat it was to research the technology in a transparent and free way.

Those founding principles have been largely abandoned by the OpenAI of today, under Sam Altman, who has been increasingly focused on turning the technology into a product that can be sold to companies such as Microsoft and now Apple. Altman’s success in doing so might have raised professional jealousy in Musk, but that is not to say there isn’t a more philosophical worry.

Even before Musk’s tweet, Apple knew that it was stepping into a difficult position: investors, commentators and users were urging it to release new AI features, but would also punish any misstep they might make. The announcement came after a few weeks that saw Google’s AI recommend that people eat rocks, Microsoft criticised for recording everything people do on PCs so that AI can analyse it, and OpenAI accused of stealing Scarlett Johansson’s voice.

Apple’s response to these broader anxieties has largely been to be limited both in the promises it has made about the technology and the scope it has to do things with people’s devices and data. Asked after the event how Apple planned to deal with hallucinations, its executives first pointed to the obvious, though not necessarily common, safeguards: the systems were trained on carefully chosen data, designed cautiously, applied in responsible ways and then tested extensively and rigorously to ensure any problems were spotted.

But then Apple’s software boss, Craig Federighi, said that one of the main restrictions was to “reliably curate the experiences that play to the strengths of the model, not to their weaknesses”. Setting today’s AI loose as a chatbot that is required to answer potentially critical problems is like “taking this teenager and telling him to go fly an aeroplane”, he suggested. “We have this entity and we’re like, ‘no, no, here’s what you're good at – you can flip burgers’.”

This was part of the reason for the integration of ChatGPT that had so spooked Musk. Federighi said that there were certain questions that were best answered by a large language model of the kind that OpenAI has made, and suggested that similar integrations with other models – Google’s Gemini, for instance, but also perhaps more niche systems like a medical chatbot – could come later.

There are other kinds of limitations, too. Apple’s image generation technologies are subject to constraints: they can generate illustrations in a limited range of styles, none of which are intended to be lifelike or realistic. And generally, the AI may be proactive but it does not take the initiative – users will still have to generate and send the images it can make, or choose the automatically generated messages that it can produce.

But still, Apple has also admitted that it is likely that there will be problems; it has added safeguards, but the fundamentally unpredictable nature of AI means that it cannot know for sure whether they will work all the time. As such, those safeguards will be accompanied by what Apple said will be an easy way of reporting problems and a commitment to respond to those reports as quickly as possible.

There was one safety fear that was not raised through Apple's event, or even in any of the responses to it – despite being perhaps the most popular point of discussion since the current AI hype cycle began. There was no discussion at all of the danger, so often proposed, that the system would become simply too clever and take over the world.

That might seem a slightly ridiculous proposition to anyone who has spent any significant time with Siri, a voice assistant whose misbehaviour is so renowned that it got a starring role in Curb Your Enthusiasm. And even as it introduced transformative changes to Siri, it made very clear that this was a long journey that Apple did not feel had ended.

Throughout its presentation it spoke about how Apple was getting closer to the aim that began when Apple released Siri in 2011: getting closer, but still a long way away. Even as Siri gets the ability to control the phone, and piece together various bits of information, we are still far from the sci-fi possibilities of Her or Hal.

Apple, the most cautious of technology companies, did not feel compelled to warn about or place safeguards against that kind of more existential danger. It may be missing the big safety threat, or be so far behind that it does not need to have the same worries as competitors, but just as likely it is not incentivised to make up flattering fictions about how powerful its technology is. (Apple is in the constraining business of selling products that have to actually exist, unlike OpenAI, which mostly sells dreams and more often nightmares.)

As we panic about artificial intelligence, it's worth remembering what Apple felt the real concern was: not that AI is too smart, but that it can occasionally be very stupid.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments

Bookmark popover

Removed from bookmarks