Stephen Hawking right about dangers of AI... but for the wrong reasons, says eminent computer expert

Professor Mark Bishop says key human abilities, such as understanding and consciousness, are fundamentally lacking in so-called 'intelligent' computers

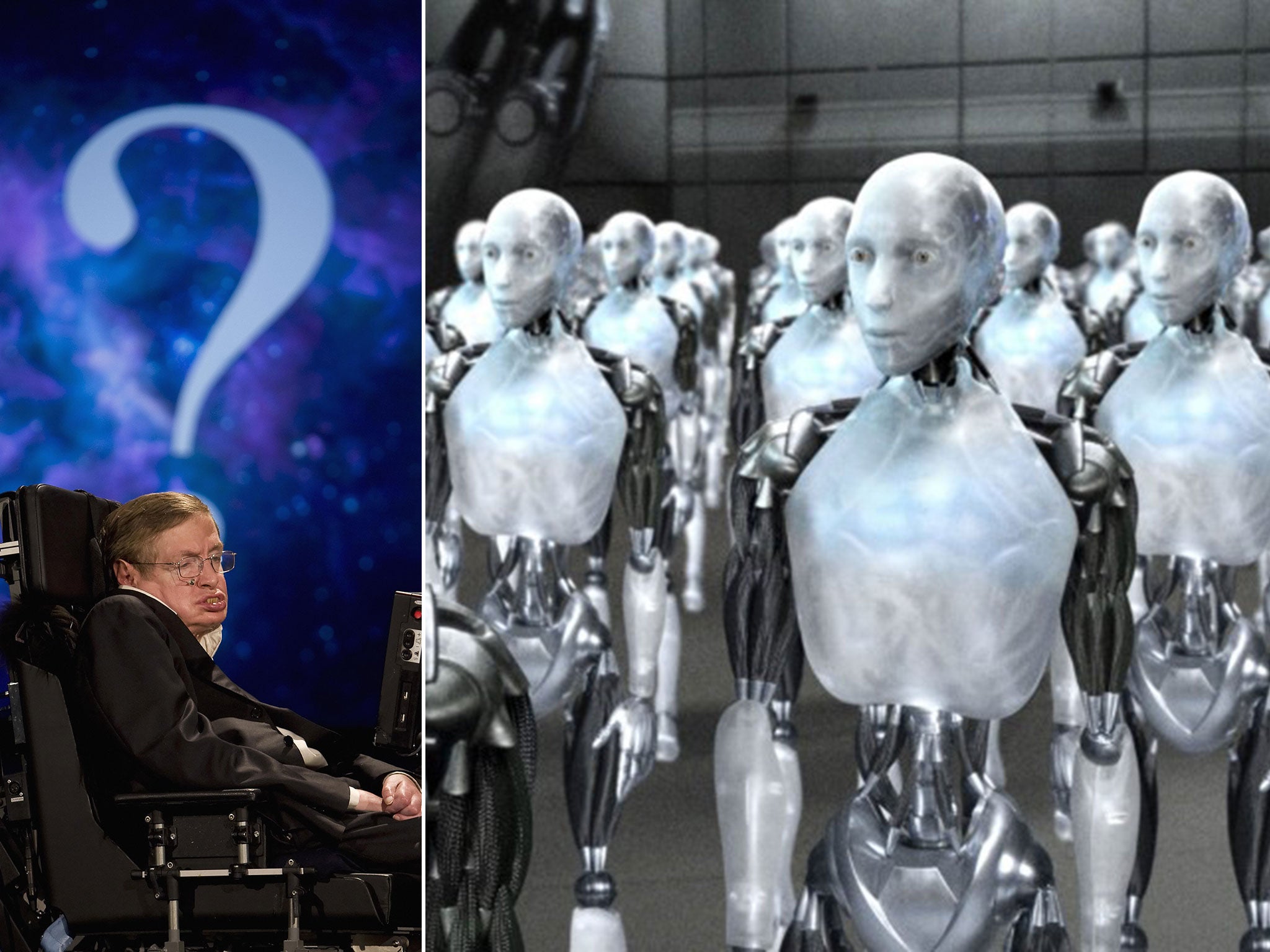

It is one of our biggest existential threats, something so powerful and dangerous that it could put an end to the human race by replacing us with an army of intelligent robots.

This may sound like a bad film but in fact it came from Stephen Hawking, the world's most famous cosmologist, who told the BBC last week that he worries deeply about artificial intelligence and machines that can outsmart humanity.

"The development of full artificial intelligence could spell the end of the human race. It would take off on its own, and re-design itself at an ever-increasing rate," Professor Hawking said. "Humans, who are limited by slow biological evolution, couldn't compete, and would be superseded."

His apocalyptic vision is not matched, however, by the views of one expert in artificial intelligence.

"It is not often that you are obliged to proclaim a much-loved international genius wrong, but in the alarming prediction regarding artificial intelligence and the future of humankind, I believe Professor Stephen Hawking is," said Mark Bishop, professor of cognitive computing at Goldsmiths, University of London.

Professor Hawking is not alone in being worried about the growing power of artificial intelligence (AI) to imbue robots with the ability to both replicate themselves and to increase the rate at which they get smarter – leading to a tipping point or "singularity" when they can outsmart humans.

The mathematician John von Neumann first talked of an AI singularity in the 1950s, and Ray Kurzweil, the futurologist, popularised the idea a few decades later.

Professor Kevin Warwick of Reading University said something similar in 1997 when promoting his book March of the Machines and more recently, Elon Musk, the PayPal entrepreneur, warned about AI being our biggest existential threat that needs regulatory oversight.

But they are misguided, according to Professor Bishop, because there are some key human abilities, such as understanding and consciousness which are fundamentally lacking in so-called "intelligent" computers.

"This lack means that there will always be a 'humanity gap' between any artificial intelligence and a real human mind. Because of this gap a human working in conjunction with any given AI machine will always be more powerful than that AI working on its own," Professor Bishop said.

"It is precisely this that prevents the runaway explosion of AI that Hawking refers to – AI building better AI until machine intelligence is better than the human mind, leading to the singularity point where the AI exceeds human performance across all domains," he said.

Fear of clever automatons goes back many decades. Nearly a hundred years ago, Czech film-maker Karel Capek coined the word "robot", meaning "slave", to describe a machine take-over of humanity.

"The history of the subject is littered with researchers who claimed a breakthrough in AI as a result of their research, only for it later to be judged harshly against the weight of society's expectations," Professor Bishop said. But what does worry him about AI is the increasing reliance being placed on so-called intelligent machines.

"I am particularly concerned by the potential military deployment of robotic weapons systems – systems that can take a decision to militarily engage without human intervention – precisely because current AI is not very good and can all too easily force situations to escalate with potentially terrifying consequences," Professor Bishop said.

"So it is easy to concur that AI may pose a very real 'existential threat' to humanity without having to imagine that it will ever reach the level of superhuman intelligence," he said.We should be worried about AI, but for the opposite reasons given by Professor Hawking, he explained.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

0Comments