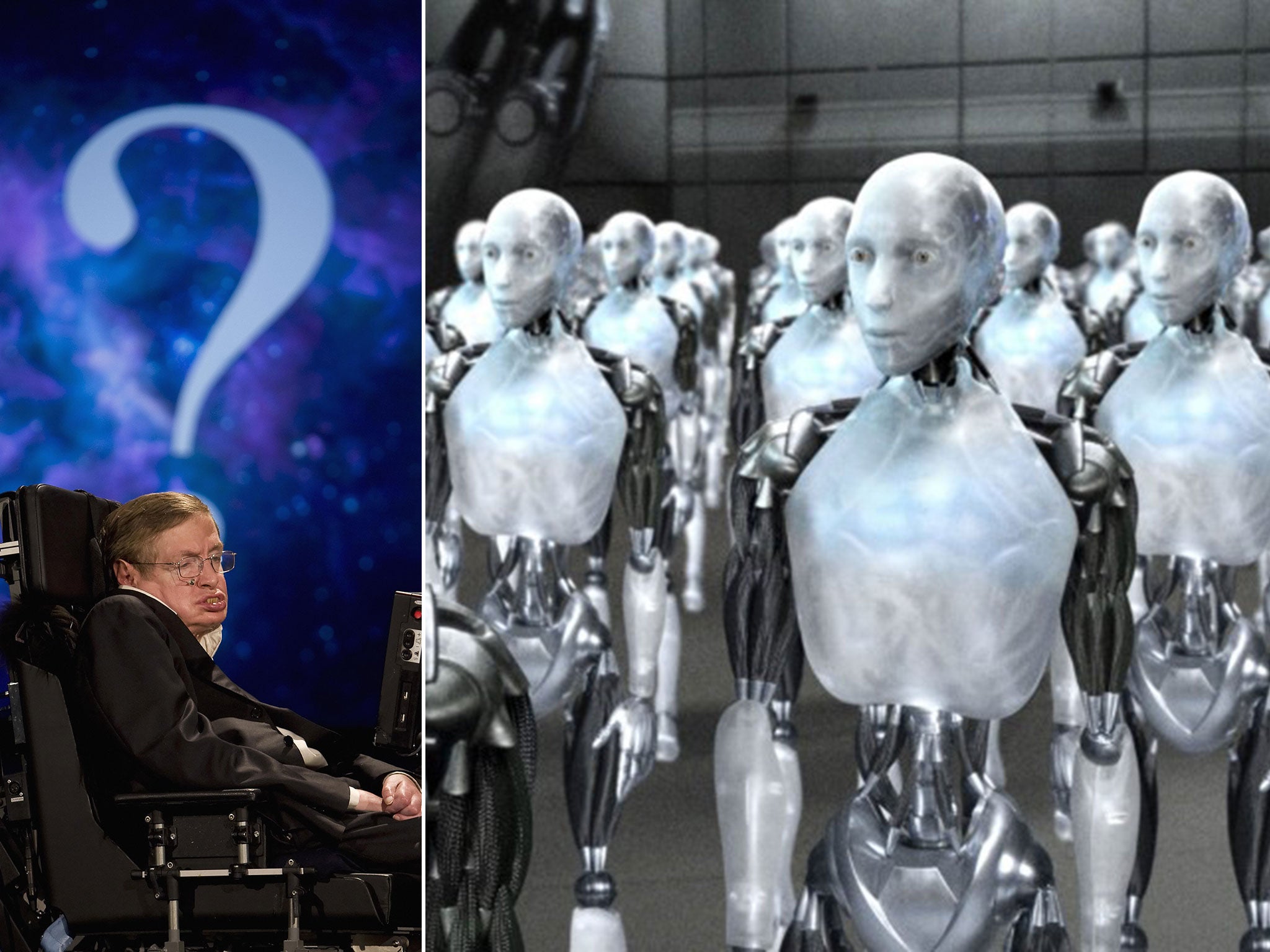

Stephen Hawking, Elon Musk and others call for research to avoid dangers of artificial intelligence

Document also signed by prominent employees of companies involved in AI, including Google, DeepMind and Vicarious

Your support helps us to tell the story

From reproductive rights to climate change to Big Tech, The Independent is on the ground when the story is developing. Whether it's investigating the financials of Elon Musk's pro-Trump PAC or producing our latest documentary, 'The A Word', which shines a light on the American women fighting for reproductive rights, we know how important it is to parse out the facts from the messaging.

At such a critical moment in US history, we need reporters on the ground. Your donation allows us to keep sending journalists to speak to both sides of the story.

The Independent is trusted by Americans across the entire political spectrum. And unlike many other quality news outlets, we choose not to lock Americans out of our reporting and analysis with paywalls. We believe quality journalism should be available to everyone, paid for by those who can afford it.

Your support makes all the difference.Hundreds of scientists and technologists have signed an open letter calling for research into the problems of artificial intelligence in an attempt to combat the dangers of the technology.

Signatories to the letter created by the Future of Life Institute including Elon Musk and Stephen Hawking, who has warned that AI could be the end of humanity. Anyone can sign the letter, which now includes hundreds of signatures.

It warns that “it is important to research how to reap its benefits while avoiding potential pitfalls”. It says that “our AI systems must do what we want them to do” and lays out research objectives that will “help maximize the societal benefit of AI”.

That will be a project that involves not just scientists and technology experts, they warn. Because it involves society as well as AI, it will also require help from experts in “economics, law and philosophy to computer security, formal methods and, of course, various branches of AI itself”.

A document laying out those research priorities points out concerns about autonomous vehicles — which people are already “horrified” by — as well as machine ethics, autonomous weapons, privacy and professional ethics.

Elon Musk has also repeatedly voiced concerns about artificial intelligence, describing it as “summoning the demon” and the “biggest existential threat there is”.

The document is signed by many representatives from Google and artificial intelligence companies DeepMind and Vicarious. Academics from many of the world’s biggest universities have also signed it, including those from Cambridge, Oxford, Harvard, Stanford and MIT.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments