Self-driving cars may have to be programmed to kill you

It's all a matter of ethics

Your support helps us to tell the story

From reproductive rights to climate change to Big Tech, The Independent is on the ground when the story is developing. Whether it's investigating the financials of Elon Musk's pro-Trump PAC or producing our latest documentary, 'The A Word', which shines a light on the American women fighting for reproductive rights, we know how important it is to parse out the facts from the messaging.

At such a critical moment in US history, we need reporters on the ground. Your donation allows us to keep sending journalists to speak to both sides of the story.

The Independent is trusted by Americans across the entire political spectrum. And unlike many other quality news outlets, we choose not to lock Americans out of our reporting and analysis with paywalls. We believe quality journalism should be available to everyone, paid for by those who can afford it.

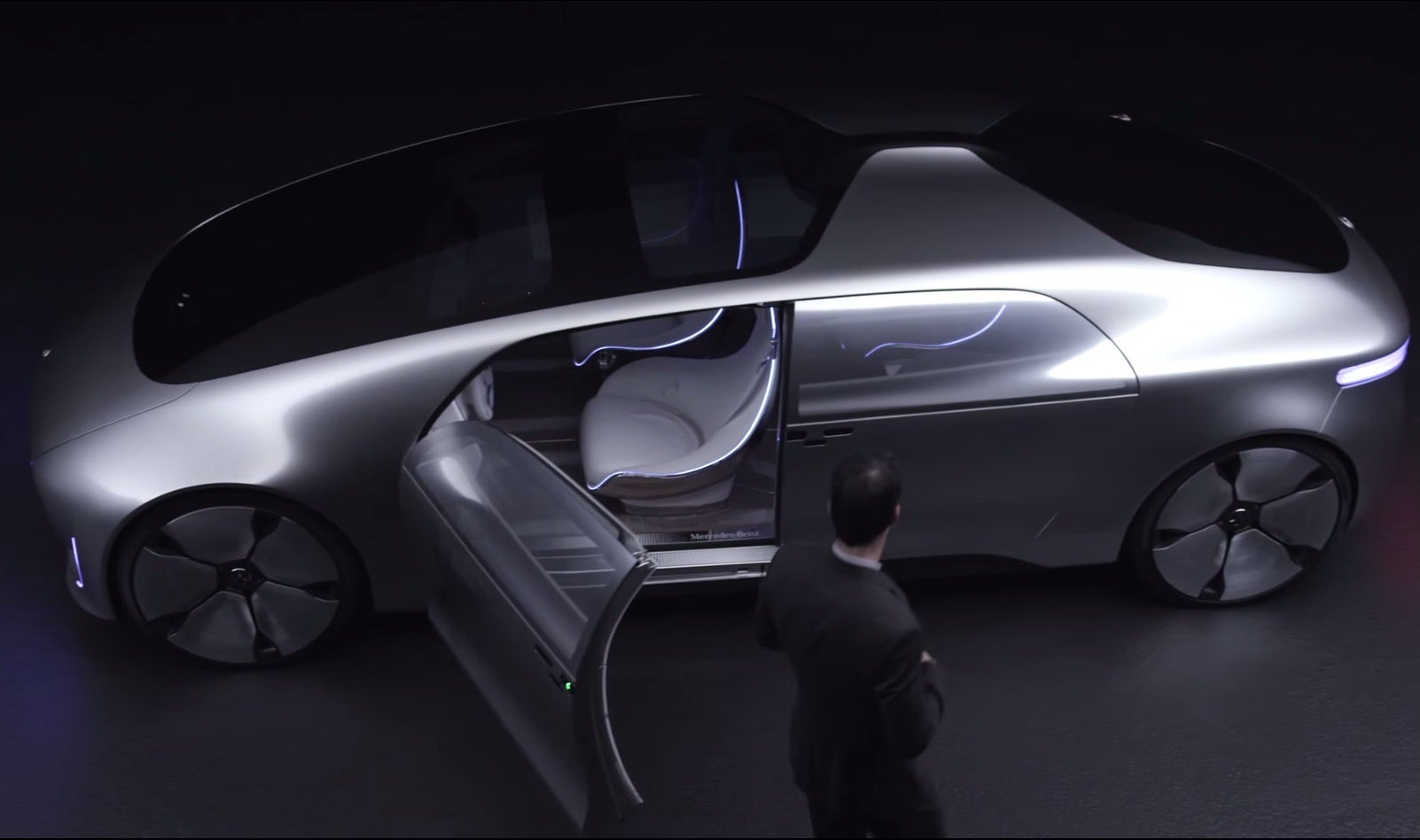

Your support makes all the difference.The self-driving cars that could soon dominate our roads, perhaps even making human-driven ones illegal some day, could end up being programmed to kill you if it means saving a larger number of lives.

This is routed in a classic philosophical thought experiment, the Trolley Problem.

Consider this:

'There is a runaway trolley barrelling down the railway tracks. Ahead, on the tracks, there are five people tied up and unable to move. The trolley is headed straight for them. You are standing some distance off in the train yard, next to a lever. If you pull this lever, the trolley will switch to a different set of tracks. However, you notice that there is one person on the side track. You have two options: 1. Do nothing, and the trolley kills the five people on the main track or 2. Pull the lever, diverting the trolley onto the side track where it will kill one person. Which is the correct choice?'

Though the whole point of driverless cars is that they are better at avoiding accidents than humans, there will still be times when a collision is unavoidable. As such, it is conceivable that whoever programmes them will decide that ploughing you into a road barrier is better than continuing your course into a bus packed with school children.

"Utilitarianism tells us that we should always do what will produce the greatest happiness for the greatest number of people," said UAVB and Oxford University scholar and bioethics expert Ameen Barghi, with this approach advising that changing course to reduce loss of life is the right thing to do.

Deontologists however, argue that "some values are simply categorically always true.

"For example, murder is always wrong, and we should never do it," Barghi continued, so "even if shifting the trolley will save five lives, we shouldn't do it because we would be actively killing one," with the same going for a self-driving car situation.

As technology and AI advances these unsolved philosophical and ethical problems are only going to become more urgent and political, and as it stands, future tech appears to be advancing a lot faster than useful debate about how best to implement it.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments