Facebook unveils tool to let users see if they interacted with Russian trolls

More than 120 million people were exposed to posts by Russian-linked actors

Your support helps us to tell the story

From reproductive rights to climate change to Big Tech, The Independent is on the ground when the story is developing. Whether it's investigating the financials of Elon Musk's pro-Trump PAC or producing our latest documentary, 'The A Word', which shines a light on the American women fighting for reproductive rights, we know how important it is to parse out the facts from the messaging.

At such a critical moment in US history, we need reporters on the ground. Your donation allows us to keep sending journalists to speak to both sides of the story.

The Independent is trusted by Americans across the entire political spectrum. And unlike many other quality news outlets, we choose not to lock Americans out of our reporting and analysis with paywalls. We believe quality journalism should be available to everyone, paid for by those who can afford it.

Your support makes all the difference.Did you “like” a Russian troll? Facebook has rolled out a new tool to let you find out.

The social media titan unveiled a mechanism for users to see if they interacted with pages created by the Internet Research Agency, identified by American intelligence as a Kremlin-linked troll farm.

The move towardx transparency comes after Facebook revealed that some 126 million Americans were exposed to posts promulgated by Russian-linked actors, who disseminated divisive and false posts as part of an effort to sow discord ahead of the 2016 presidential election.

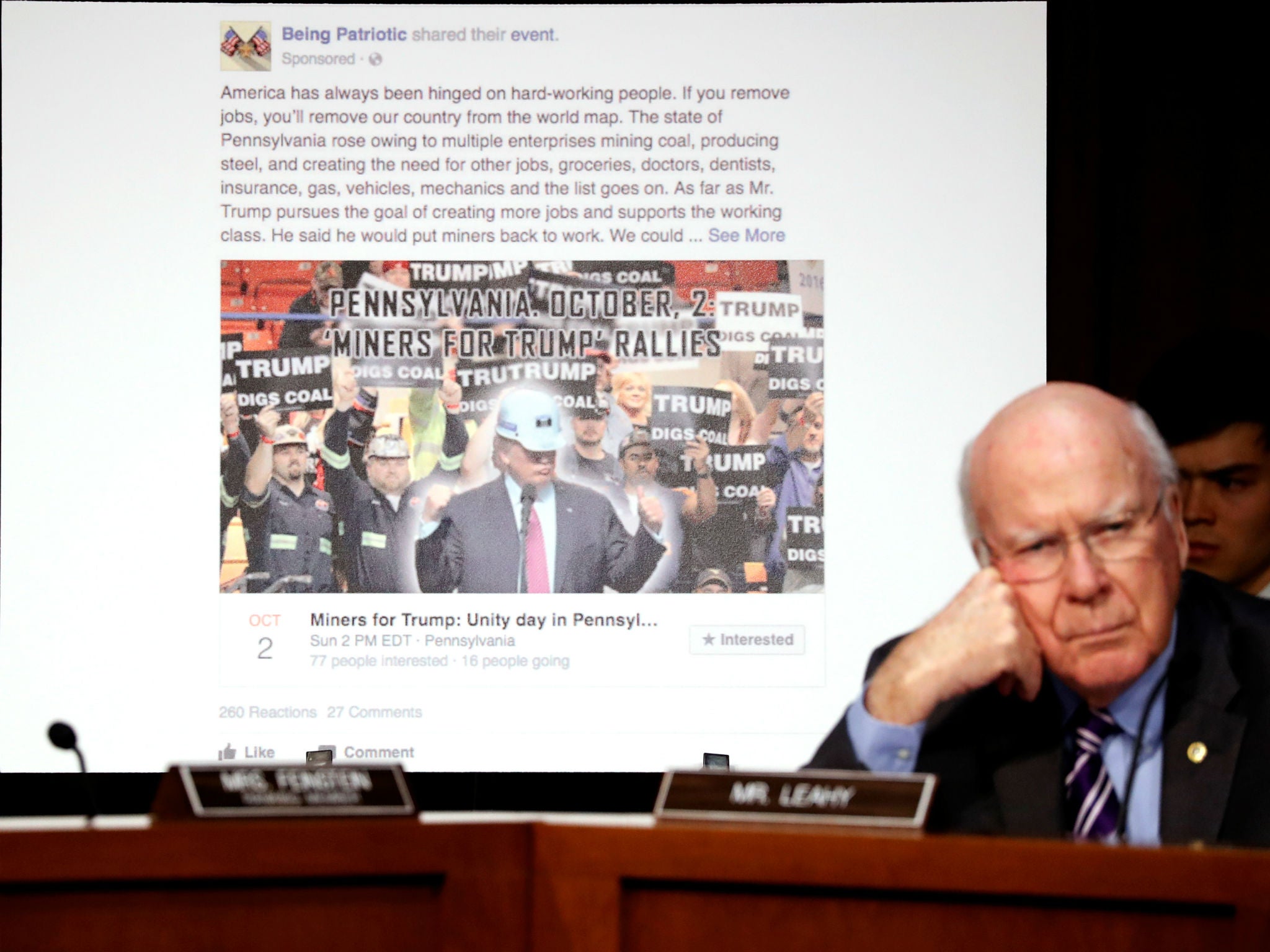

Examples released by Congress show Russian-linked posts promoting Donald Trump and Bernie Sanders, assailing Hillary Clinton and weighing in on various sides of issues like immigration and gun control.

Many pages were designed to resemble grassroots organisations that did not exist.

After American intelligence officials concluded that the Kremlin mounted a concerted effort to disrupt the 2016 presidential election, Facebook and other tech giants have faced questions about how their platforms enabled the spread of disinformation.

Since disclosing the vast reach of the propaganda effort, Facebook has faced a barrage of questions about how thoroughly it vets content and whether it adequately shields users from misleading content.

Among changes the site has instituted, it has pledged to start releasing more information about who pays for political content.

It also announced that it would hire an additional 10,000 people to monitor content, an effort to “identify and remove content violations and fake accounts”, and moved to block content flagged as false by fact-checking organisations.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments