'We're blind to our blindness. We have very little idea of how little we know. We're not designed to'

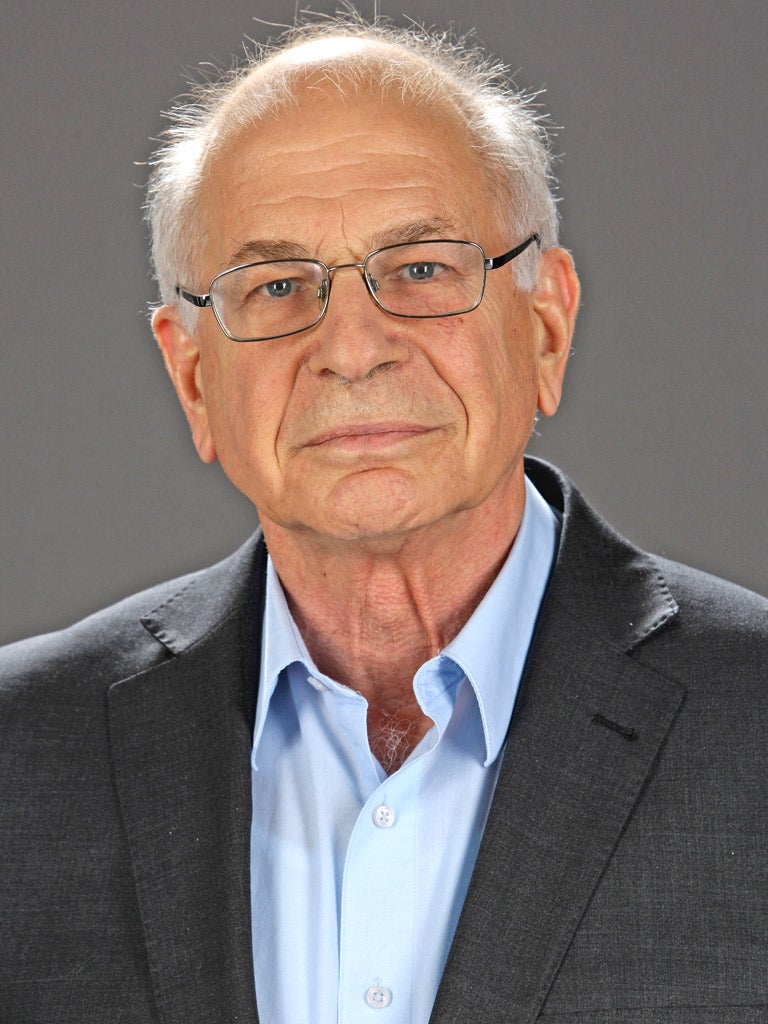

Insight: Daniel Kahneman, psychologist

Daniel Kahneman, 77, is the Eugene Higgins Professor of Psychology Emeritus at Princeton University. In 2002, he was awarded the Nobel Prize in Economics for his analyses of decision-making and uncertainty, developed with the late Amos Tversky. His work has influenced not only psychology and economics, but also medicine, philosophy, politics and the law. In his new book, Thinking, Fast and Slow, Kahneman explains the ideas that have driven his career over the past five decades, providing an unrivalled insight into the workings of our own minds. Nicholas Nassim Taleb has called it "a landmark book in social thought".

"Fast" and "Slow" thinking is a distinction recognised in psychology under various names, such as system one [intuitive thought] and system two [deliberate thought]. The subtitle for my talks on the subject is: "The marvels and the flaws of intuitive thinking." We act intuitively most of the time. System one learns how to navigate the world, and mostly it does so very well. But when system one doesn't have the answer to a question, it answers another, related question.

A study was done after there were terror incidents in Europe. It asked people how much they would be willing to pay for an insurance policy that covered them against death, for any reason, during a trip abroad. Another group of people were asked how much they would pay for a policy that covered them for death in a terrorist incident during the trip. People paid substantially more for the second than for the first, which is absurd. But the reason is that we're more afraid when we think of dying in a terrorist incident, than we are when we think simply of dying. You're asked how much you're willing to pay, and you answer something much simpler, which is: "How afraid am I?"

Some students were asked two questions: "How happy are you?" and "How many dates did you go on last month?" If you ask the questions in that order, the answers are completely uncorrelated. But if you reverse the order, the correlation is very high. When you ask people how many dates they had last month, they have an emotional reaction: if they went on dates, then they're happier than if they went on none. So if you then ask them how happy they are, that emotional reaction is going on already, and they use it as a substitute for the answer to the question.

On the most elementary level, what we feel is a story. System one generates interpretations, which are like stories. They tend to be as coherent as possible, and they tend to suppress alternatives, so that our interpretation of the world is simpler than the world really is. And that breeds overconfidence.

What do I mean by "story"? There is a drawing in the book, with a line of letters: ABC, and a line of numbers: 12 13 14. The B and the 13 are actually physically identical. You perceive them differently because of the context. In the context of letters, it becomes a B. In the context of numbers, the same shape becomes a 13. You're not aware of the ambiguity, you just see it as a 13. That's what I mean by a very simple story.

We also tell more complex stories, but these tend to be very simplified, too. They exaggerate the role of agents and systematically underplay the role of luck. This is inevitable, because anything that didn't happen but could have happened is not part of the story. For example, the story of the success of Google: suppose that somebody else had developed a search engine as capable as the page-rank algorithm that [Sergey Brin and Larry Page] invented. Then Google might not exist today. So we don't realise how lucky they were. We say they were ahead of their time, but we don't know by how much. Because as soon as they were successful, that discouraged others from working along the same lines.

Leaders and entrepreneurs are particularly optimistic. There's plenty of evidence for that. They wouldn't be doing what they're doing if they didn't have a sense that they could control their environment, and if they were not quite sanguine about their chances of success. Leaders are selected for their optimism. I have no interest in my financial adviser or in my surgeon being an optimist. On the other hand, if you have a football team that believes they can win, they are going to do better.

Political columnists and sports pundits are rewarded for being overconfident. Most successful pundits are selected for being opinionated, because it's interesting, and the penalties for incorrect predictions are negligible. You can make predictions and a year later people won't remember them. There was a study by Phil Tetlock about the ability of pundits, CIA analysts and academic experts to make long-term strategic predictions, looking five to 10 years ahead. They couldn't do it, but believed they could. And the people who were most overconfident, and had the strongest theory? They're the ones who were on TV.

We're blind to our blindness. We have very little idea of how little we know. We're not designed to know how little we know. Most of the time, [trying to judge the validity of our own judgements] is not worth doing. But when the stakes are high, my guess is that asking for the advice of other people is better than criticising yourself, because other people are more likely – if they're intelligent and knowledgeable – to understand your motives and your needs.

I'm not a great believer in self-help. The role of my book is to educate gossip, to make people more sophisticated in the way they think about the decisions and judgments of other people, which is easy and pleasant to do. If we have a society in which people had a richer language in which to talk about these issues, I think it would have an indirect effect on people's decisions, because we constantly anticipate the gossip of others.

"Thinking, Fast and Slow" by Daniel Kahneman, out now (Allen Lane, £25)

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

0Comments